Introduction

We often get asked, “How long will this take to build?” This is a pretty reasonable question!

However, the answer tends to be “it depends”, and the reason for that is innate to how you build effectively with this technology. It really depends on what maturity stage you’re targeting for the solution.

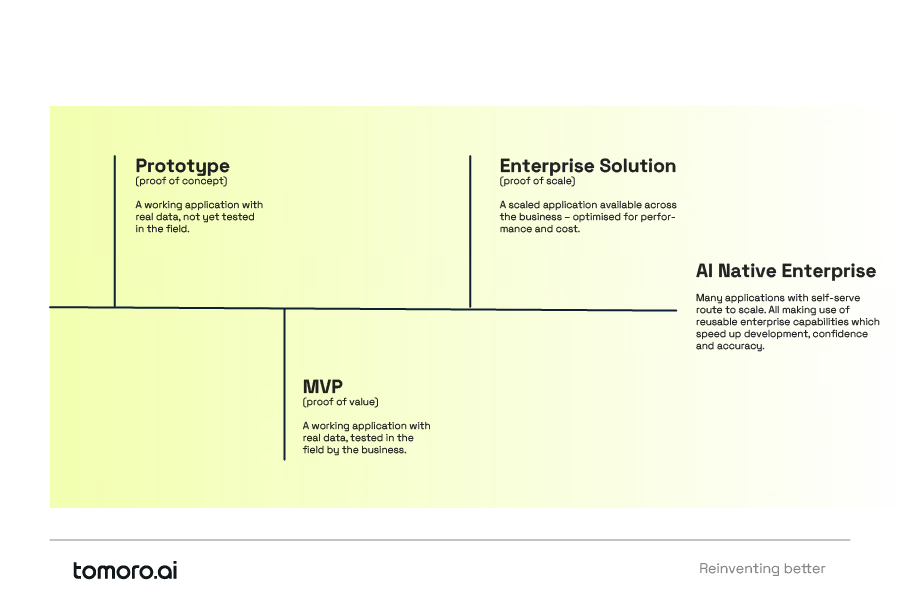

We see the world through three maturity stages of AI application.

- Proof of concept (a.k.a prototype)

- Proof of value (a.k.a. minimum viable product, MVP)

- Proof of scale (a.k.a. enterprise solution)

This blog defines each stage in the context of a large enterprise, what kind of trade-offs are made at each point and what solutions tend to look and feel like when you get there.

As we’ll discuss, it’s a journey, not a menu. The fastest way to proof of scale is through proof of concept and proof of value.

Proof of concept (a.k.a prototype)

A working application with real data, built with SMEs, but not yet deployed in the field.

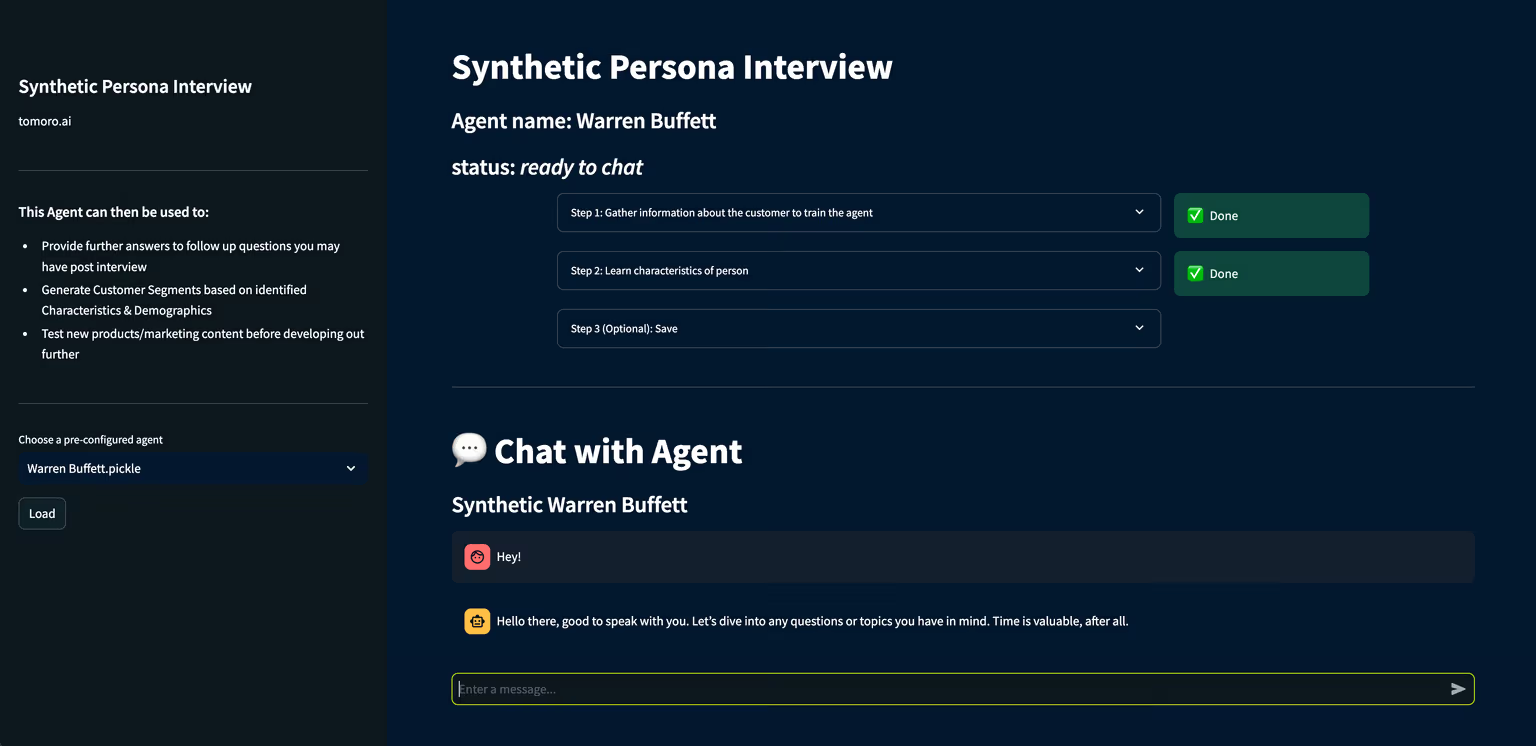

A proof of concept: Synthetic personas to better understand your customers

We’re looking to prove:

- The features the users want are feasible

- There is sufficient data and knowledge in the business to create the composite parts of the V1 agent Anatomy of an AI agent

- There is a viable plan to build the MVP

What we’re not looking to prove:

- How much this will cost to run at full scale

- The value delivered from using the solution in the field

- How we’ll rigorously evaluate performance and cost during operation

Why do it like this?

Proving what's important first

The two most important questions for any enterprise application are - will it be valuable and will it work. How will we scale it and what will it cost are also good questions, but they are only relevant once you’ve got past the first hurdle. A working prototype (emphasis on working) will teach you 100x times more than the most beautiful business case document ever will.

Leaning into what this technology does well

Generative AI (the core of AI agent solutions) are by their nature a technology that requires fast iteration to develop. You will have your first demo of some functions in half a day. The nature of developing requires running responses and scoring the model’s attempt at responding - rewarding successes and mitigating failures.

Minimising time (and cost) to decision point

Getting to this stage should take somewhere between 1-4 weeks, with 1-3 people.

Depending on the use case features, the data required, the number of stakeholders involved, and the technology and governance landscape of the business. Most of this time will be spent on solution tuning and stakeholder engagement.

If it takes longer than that, you are doing it wrong. It’s critical to get past this stage as fast as possible, as it will enable you to make real choices (i.e., non-theoretical PowerPoint decisions) about whether this is a viable solution to move forward with and present a meaningful business case of what a route to an MVP will mean for the business.

Proof of value (a.k.a MVP)

A working applications with real data, deployed and tested in the field by a cohort of users.

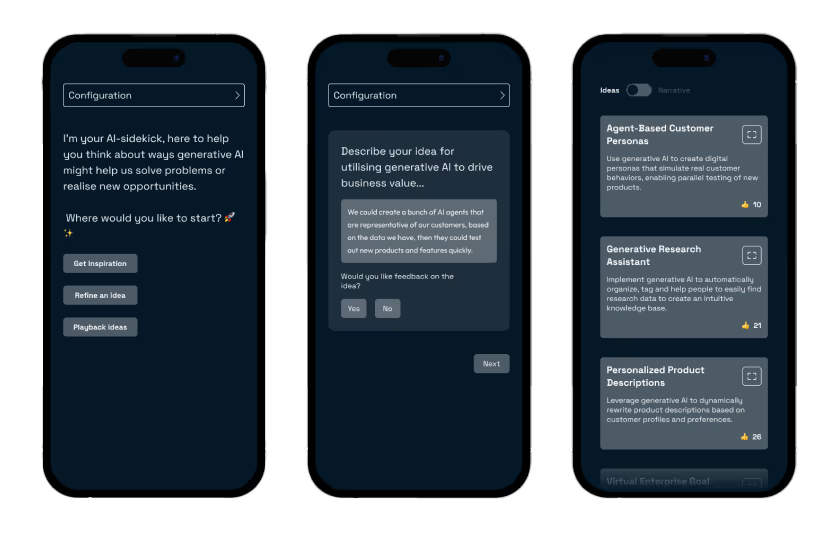

An MVP: AI Ideation Sidekick as a thinking partner and facilitator

We’re looking to prove:

- The solution delivers value for a meaningful section of the prospective user base

- We can manage the solution sufficiently to push it to live (performance, cost, accuracy evals (tests), etc.)

- We are capturing enough user data to give us options to optimise at proof of scale

What we’re not looking to prove:

- We can immediately deliver the scale required if it goes viral

- We’ve implemented every technique possible to minimise run costs (i.e., fine-tuning, model distillation, etc)

Why do it like this?

Realise some value as fast as you can

Once you’ve got your stakeholders excited with a working proof of concept, you’ve bought yourself a few weeks to get something live. Weeks, not months. Will users actually use this and can we do this safely become the next top questions on your to-do list. Where safely has a broad definition from is it cost-controllable (financially-safe) to will we get a Twitter-storm (brand-safe). A user-facing Minimum Viable Product (MVP) is the fastest way to achieve these questions.

Don’t solve problems you don’t have yet

Often, when MVPs are successful, people will immediately say, “But it’s going a bit slowly now,” or “But when will this feature come?” These questions can make you and the team feel like you’ve failed. You haven’t. This is what success feels like. MVPs that succeed immediately create momentum to get them further scaled and while that’s important - think of the MVPs which don’t work and you don’t want to have committed 6 months to optimising them only to have them fail on launch.

Minimising time (and cost) to the next decision point

Getting to this stage should take a total of 8-14 weeks, with 3-5 people.

This includes the first 1-4 weeks that you spent getting to the proof of concept. The same dependencies as the proof of concept apply to where exactly you fit in that spectrum.

Similarly - if it takes longer than this, you’re doing it wrong. If you take any longer than this to get to the MVP stage, your carefully developed buy-in will start to crumble. Also, just as crucially, at about this point you need to go live to capture enough data to keep developing. As mentioned in the proof of concept stage you need usage data to drive the solution forward, and by 8-14 weeks you’re going to need more volume than a small development team, working alongside business SMEs, can provide.

Proof of scale (a.k.a. enterprise solution)

A scaled application available across the full user base; optimised for performance and cost.

A scaled solution: A research assistant for analysts engaging with high-complexity, high-volume data sources

We’re looking to prove:

- The solution delivers value for the target user base

- The solution is cost, performance and safety optimised

- The solution is robust to future evolutions of models, knowledge, integrations, etc.

Why do it like this?

Prepare for volume

If your proof of value was a runaway success, it’s not unreasonable to expect the scaled solution to do well. The MVP's results should give you a solid business case to choose from if you need to move to the final phase.

The scaled solution is where you add to the controls and evals you’ve implemented at MVP based on the lessons you learned from the initial users: everything from new few-shot prompts, model fine-tuning and distillation to reduce the cost and/or adding more accurate evals at the different staging points of your agent pipeline.

Create a self-learning loop

As has been a recurring theme in this blog, these solutions get better the more you use them (if you capture and use that data appropriately!). Therefore, factoring in how you’ll monitor usage and learn as you go is critical. This could be everything from capturing user prompts to discovering previously unthought-of use cases to monitoring agent responses to continuously steer responsible and brand-safe responses.

Build modules for re-use and compatibility

You may have built your proof of concept and proof of value with a monolithic style architecture - that’s fine (albeit in future you may want to move to a more composable approach, you can learn about that here: The great, but tough, job of being an AI enablement function in a large enterprise (and what to do about it).

At the proof of scale phase, we’d advise against continuing this if possible. Both for (1) the AI ecosystem is moving ridiculously quickly, it is highly likely you’ll need to switch aspects of the solution (e.g., the underlying foundation model) within a year. If you don’t have a systematic, controllable way to do this, it can be very challenging (talk to us if you want some tips how to do this). The second reason (2) is that your solution will be made of many components (retrieval, fact-extraction, orchestration, lineage citations, etc.) that will be incredibly useful for all your other solutions! You want to extract the ‘vanilla’ versions of these to speed up your future solutions asap.

Make sure you’re in control

For most enterprises, you’ve been on this journey using a third-party foundation model via an API (e.g., GPT-4o from OpenAI or Azure OpenAI or Claude-3.5 from Anthropic or via AWS or Google).

This is to be encouraged (as long as you’ve understood the security implications of your model vendor). However, it does leave you at risk of not being in complete control of your e2e system. To account for this, you need to do one or both of two things.

- Have a robust CI/CD pipeline in your agent deployment which measures model effectiveness against a known baseline, plus the ability to automatically tune prompts et al. to optimise new deployments when model versions are changed.

- Use ‘your own’ model - which may be a fine-tuned version of a third-party (inc. open source) model. You may have done this naturally to optimise cost and performance at the MVP → scale stage but it can also be very useful for control purposes.

Summary

Hopefully, I’ve convinced you that thinking about the process of building agents as proof of concept, proof of value, and proof of scale is a useful mental model for planning and execution purposes.

The main thing is to keep it simple and solve the problem in front of you right now—not the one that’s only relevant if you have success on your hands!

As always, if you have any comments, questions, or disagreements(!), please don’t hesitate to drop us a note.