This blog is for leaders who want to understand the technical building blocks of developing AI agents in their business, it covers:

- The components that make up an AI agent

- The functional features that these components enable

- How these components map to different technology vendors (as of April 2024)

If you’d like to read a more business-orientated summary of what is an AI agent first, go to: What is an AI Agent?.

A simple definition of an AI agent

An AI agent is an application with a defined knowledge base, the capability to initiate several actions, and the intelligence to choose the appropriate one given a specific context.

It’s designed to be self-sufficient in understanding requests, gathering the right data, making decisions and performing, or initiating, action.

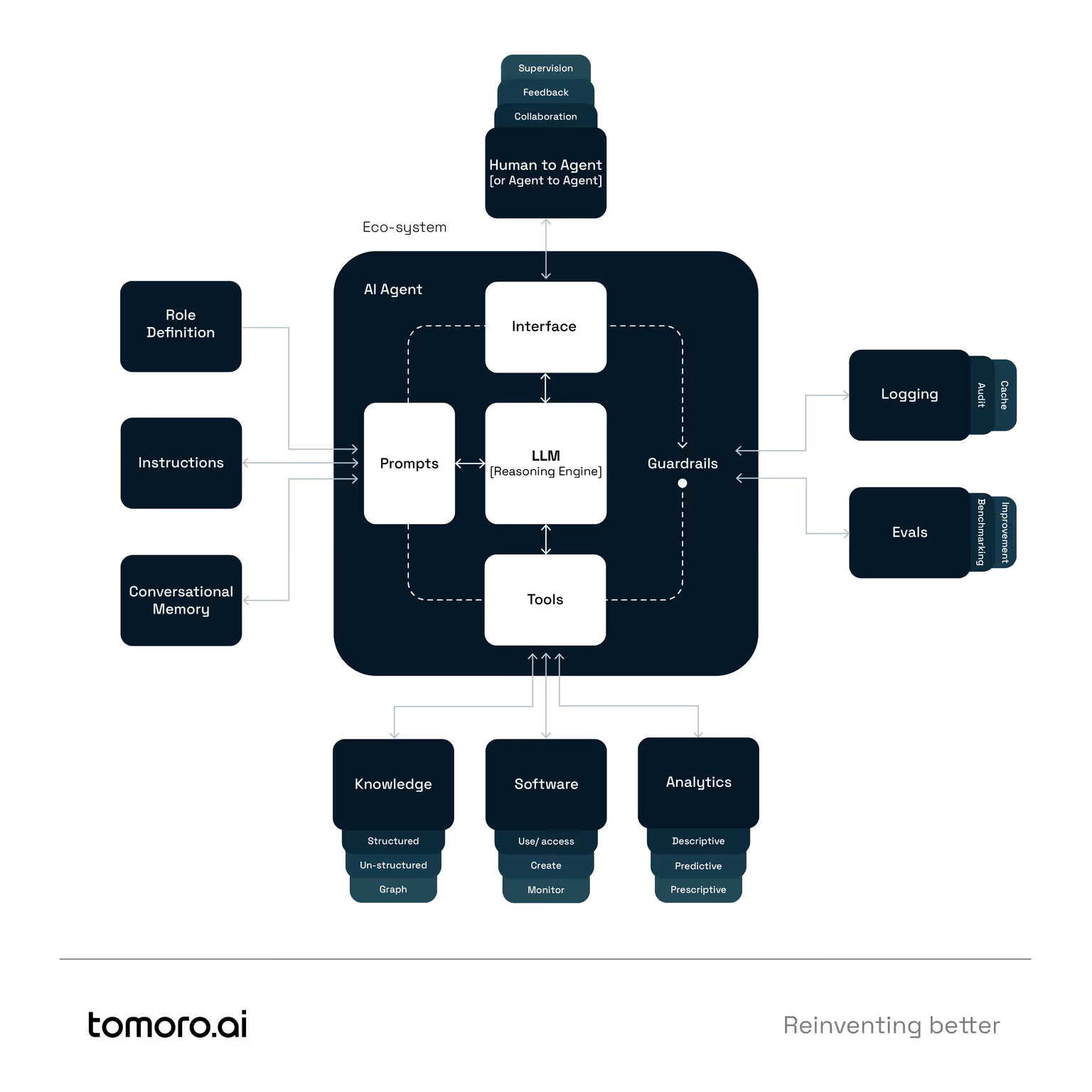

The components that make up an AI agent

Reasoning / decision engine (for example; GPT-4)

This is the anchor of an AI agent. The AI engine acts to orchestrate the other components and provide the reasoning and decisioning capability to turn messy input into clear next steps, or vice versa take generic next step actions and add unique personalisation and colour to a specific customer or colleague.

Tools: long-term, shared, knowledge (for example; a vector, graph or structured database)

One of the key factors that distinguish generative AI from AI agents is disentangling reasoning from memory. Because LLM’s recall capabilities are still somewhat unreliable, we need to augment LLM’s with well curated knowledge. In this case, the knowledge can be stored in either structured, vector (unstructured) or graph databases. Graph especially enhances the LLM's ability to process and utilize complex datasets, improving performance in tasks that require deep contextual understanding.

This shared knowledge can be improved by pre-processing existing knowledge into a more AI-ready structure. This can be achieved in many ways (from the simplest with chunking and vector-embedding source documents, to approaches for more complicated content such as LLM-enabled fact extraction and graph modelling).

Learn more here: AI knowledge chatbots, easy right!?

Tools: software and BI (for example; a calculator, or an existing data algorithm)

There’s a reason we, humans, use a calculator when we do maths. It’s not because we can’t do it with pen or paper (or some of us with just our brains), it’s because it's far easier and less energy-intensive to use a tool designed for that specific job - namely a calculator. The same logic applies to AI systems. It is possible to get a large language model to accurately compute a sum - but the effort in preparation and compute costs is far higher than training an LLM to recognise when a calculator is most needed, and getting it to call it as a feature. This premise applies way beyond calculators into using CRMs, other predictive AI models or even dedicated reasoning modules to augment the core LLM’s decision making.

Prompts: short term memory and role definition (for example; selection-inference prompt architecture & role definition of a marketeer)

Short-term memory, or agent-only memory, enables the agent to have a multi-message conversation with a user, or the system it's communicating with. Because LLM’s still struggle with multi-step reasoning out-of-the-box , this tends to be where you deploy techniques like prompt engineering to get the Agent to mimic the skills of a particular role, or to consistently get it to break apart its reasoning with techniques such as selection inference or chain of thought prompting.

Human and machine interface (for example; a chatbot interface)

Agents need to communicate either with us directly, or with each other in more complex, multi-agent systems. Either way, there needs to be an interface layer that enables input / output with requests to execute the desired process flow.

Guardrails (for example; an accuracy eval test for truthful responses compared to a ground-truth database)

What we can’t measure, we can’t trust, especially in an enterprise setting. One of the critical factors holding AI back today is the complexity of monitoring outcomes in AI systems, especially in more complex LLM-based and e2e processes. This capability includes ‘evals’, or tests, which are used to define and monitor a system within a set of defined guardrails - critical both for system development and compliance approval to run the systems in live settings.

The top 3 perceived limitations of AI agents today

While the key components are outlined above, as of April 2024 there are still a number of technical challenges which create limits (temporary or otherwise) on what most businesses trust AI agents to do in their business.

Alongside that, there are many more examples of limitations that people think are limiting factors - but actually already have robust mitigations in place if these systems are developed and utilised appropriately.

01

Limitation: LLM’s hallucinate - you can’t trust their output

Mitigation: Create a distinction between using an LLM to ‘reason’ (i.e. decide the right action) and as ‘memory’ (i.e. to know the answer to your question).

To gain trust in the response of an AI Agent system means having a source (or ground-truth) dataset that you can independently trust and audit - and get the LLM to return answers from this dataset with specific citations. This is why creating AI-optimised knowledge is so important for an AI agent system.

02

Limitation: LLM’s aren’t smart enough (yet)

Mitigation: GPT-4 (and similar level models) are smart - but they certainly aren’t experts at everything. Our (very general) rule of thumb is that a well-prompted LLM is as smart as a very keen new graduate in your business.

Therefore for complex tasks it often requires using multiple LLM’s, combined with more advanced prompt engineering techniques (see: Why compliance analysts make great AI prompt engineers.) can radically improve the reasoning ability of an AI system. The other mitigation is shifting from a single agent to a multi-agent system for the most complex use cases.

03

Limitation: There are so many new security, monitoring and performance things to worry about!

Mitigation: This is true!

However, there are proven solutions emerging for these constantly. One of the biggest areas of R&D investment at Tomoro is in complex AI Agent benchmarking across accuracy, security and performance.

Good solution design to mitigate prompt injection and control potential attack vectors is vital, and testing is not only inherent in production but needs to be central to the actual development of the system - to create positive feedback loops for the right behaviour.

Technology provider choices

In terms of enterprise players in the AI agent space - the most mature and e2e offerings are available from:

- OpenAI (GPT-4 and Assistants API)

- Nvidia (Open-source models (e.g. Llama 3), NIMs and NeMo framework)

- Microsoft Azure (Azure OpenAI and Azure AI Studio)

- AWS (Claude Opus and AWS Bedrock)

- GCP (Gemini and Vertex AI Agent Studio)

Each provider has the components required to develop meaningful custom agents with sufficient access to intelligent models, monitoring guardrails and tool and function usage.

Please get in touch if you’d like an architectural blueprint for these providers, and we’ll aim to get this up on a blog soon.