Introduction

Having built a number of these (and rescued a few more) this blog is written for leaders and organisations who are:

- Considering building a GPT as an AI knowledge chatbot to help colleagues access their institutional knowledge

- Building an AI knowledge chatbot and have got stuck in optimising the accuracy, performance and/or range of data and query types that it can be utilised for

- Trying to go to the next level from knowledge Q&A to causal reasoning and introducing temporal (time-based) analysis

How do knowledge management solutions work

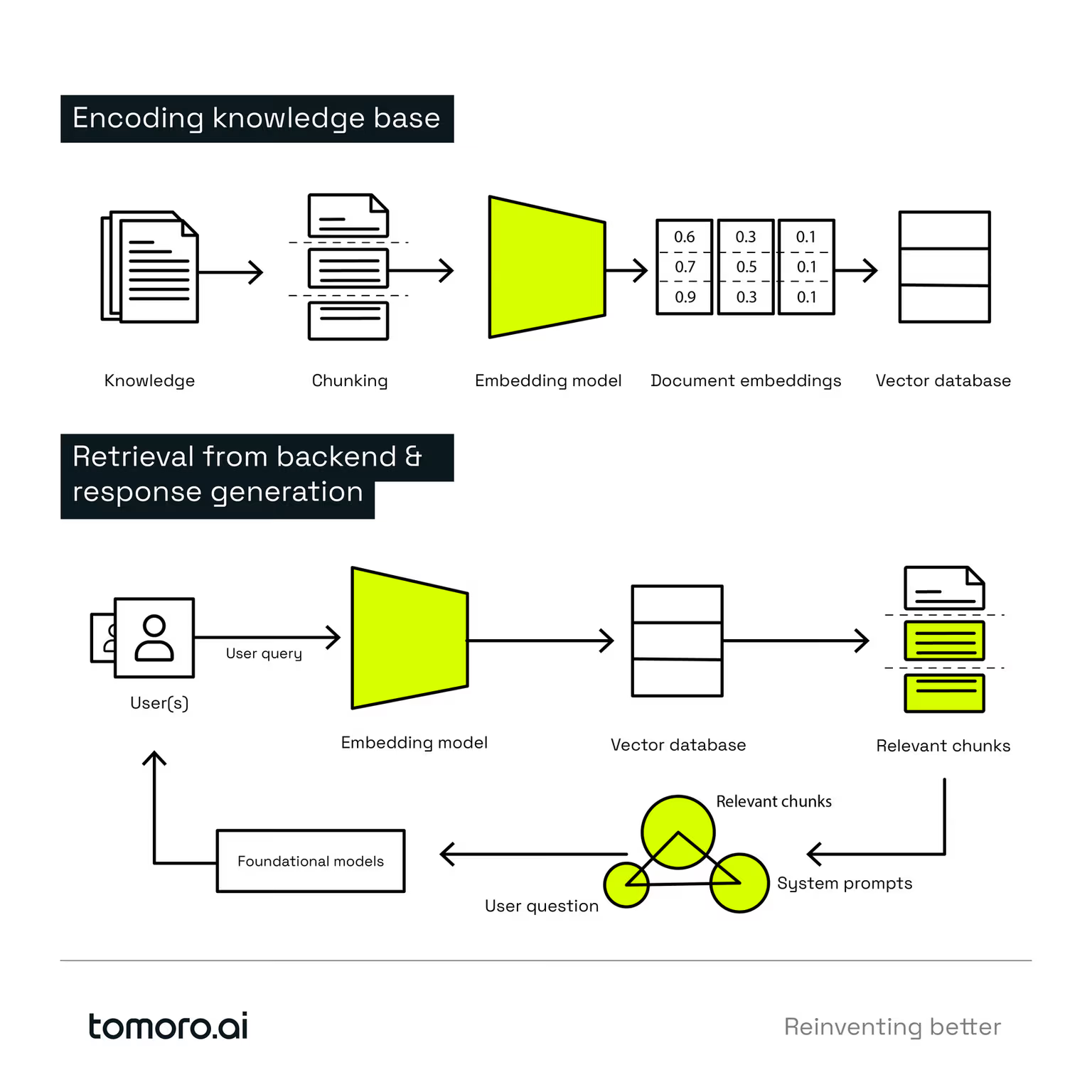

At the heart of knowledge management solutions is a concept called retrieval augmented generation (RAG). This diagram outlines the key concepts of how RAG works:

- Turning qualitative ('unstructured') documents from words into strings of numbers (embeddings)

- Storing these embeddings in a database known as a vector database

- Deploying a user-facing AI chatbot, which, firstly, converts user questions into strings of numbers (through the same embedding process)

- Matching the user’s question to the most similar text in your database

- Finally, returning the relevant content that the AI chatbot then summarises for the response to the user’s question

How to choose the right approach for your use case

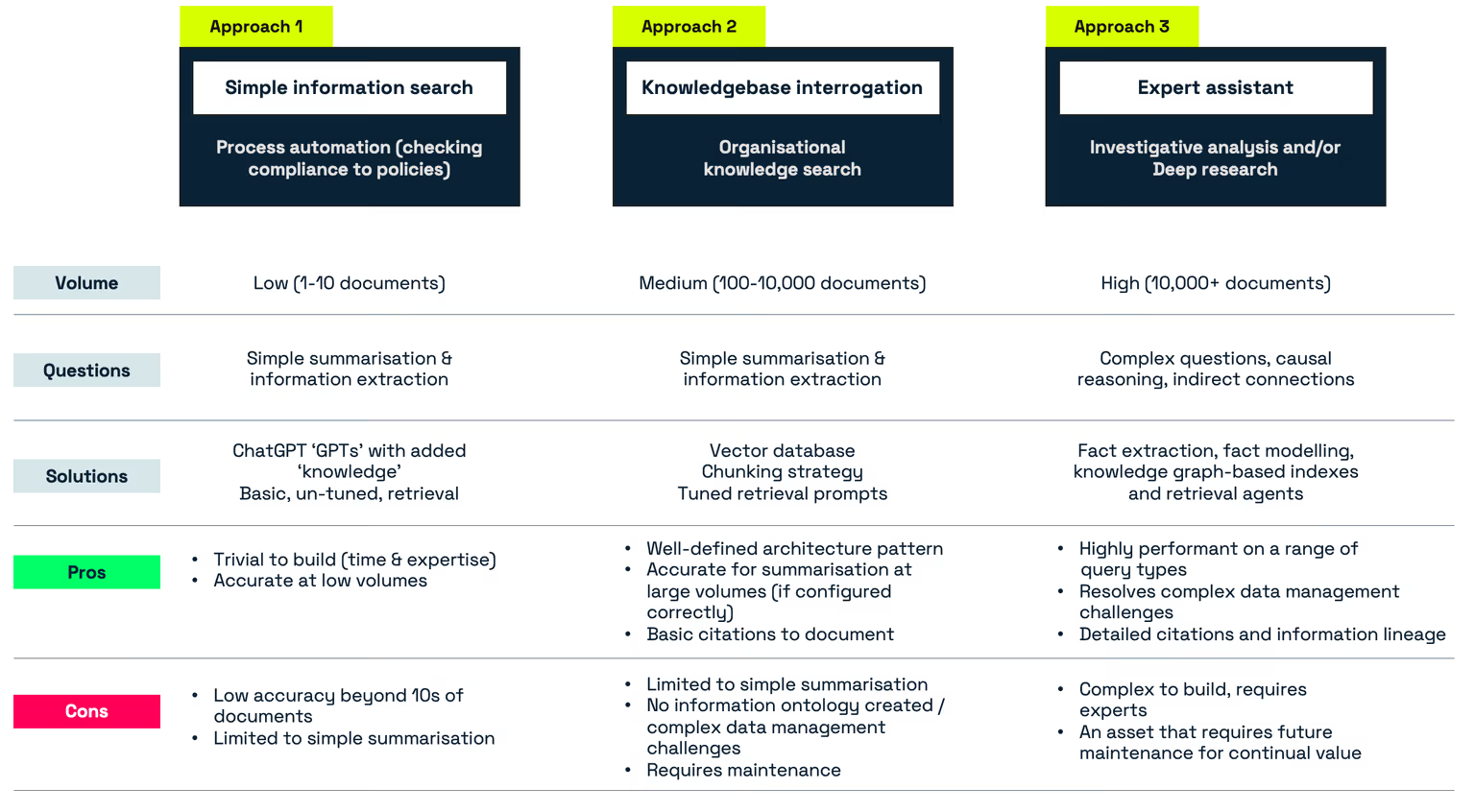

There is no 'one way' to do a knowledge management solution right.

We see 3 approaches that cover most if not all knowledge management use cases.

These approaches can be navigated by considering the following questions:

- Across how many documents do you expect to query?

If you are querying between 1-10 documents at a time, you are best off using a tool like Microsoft Copilot or OpenAI’s ChatGPT (Approach 1) due to the speed you can deploy it and the low cost (assuming you have one of these tools available already). These solutions are performant for ad-hoc queries across small volumes of knowledge. (for example, we use “NDABot” to help us review Non-Disclosure Agreements with our clients, the ChatGPT GPT helps us review these to find any terms we need to investigate further).

- Will the use case be limited to Q&A summary responses only, or will it involve more complex questions (What if?, How are these connected?, Has this changed over time? etc.)

If you are expecting to query lots of documents (100+) but the queries will always be variations upon “give me a summary answer to this question” then Approach 2 will be appropriate for your needs. This is more involved as it requires optimising how vast amounts of content are best embedded and stored but, with optimisation, this approach will provide suitable accuracy for these types of questions.

Note: we’ve worked with several customers who started out expecting only Q&A summary questions would be used in their knowledge chatbot, and then as trust built users quickly began to ask more complex questions. If you’ve followed Approach 2, this is where the possibility of ‘hallucinations’ or inappropriate answers starts to arise.

It is critical to have good ‘prompt monitoring’ in place to track how users are engaging with the system and continual measure the accuracy of responses against a designated benchmark

If queries are expected to be more complex (i.e. comparing concepts or inferring new information from existing information) then the kind of retrieval used in Approach 2 will break down. In this case, you need a more thorough approach to extract and index your fact-base from your knowledge, and different ways to join and parse this content which gives you confidence in the response. (You can learn more about how technically to do that here: Graph databases as RAG backends | Tomoro.ai ).

- How important is information lineage and traceability?

The final key question is the level of detail and confidence you require on source document traceability.

In our experience, the only method which provides high confidence of full traceability to source facts is Approach 3. In the other approaches, we often see missing, incorrect or hallucinated references, especially in complex, multi-document, queries.

How to test queries and performance

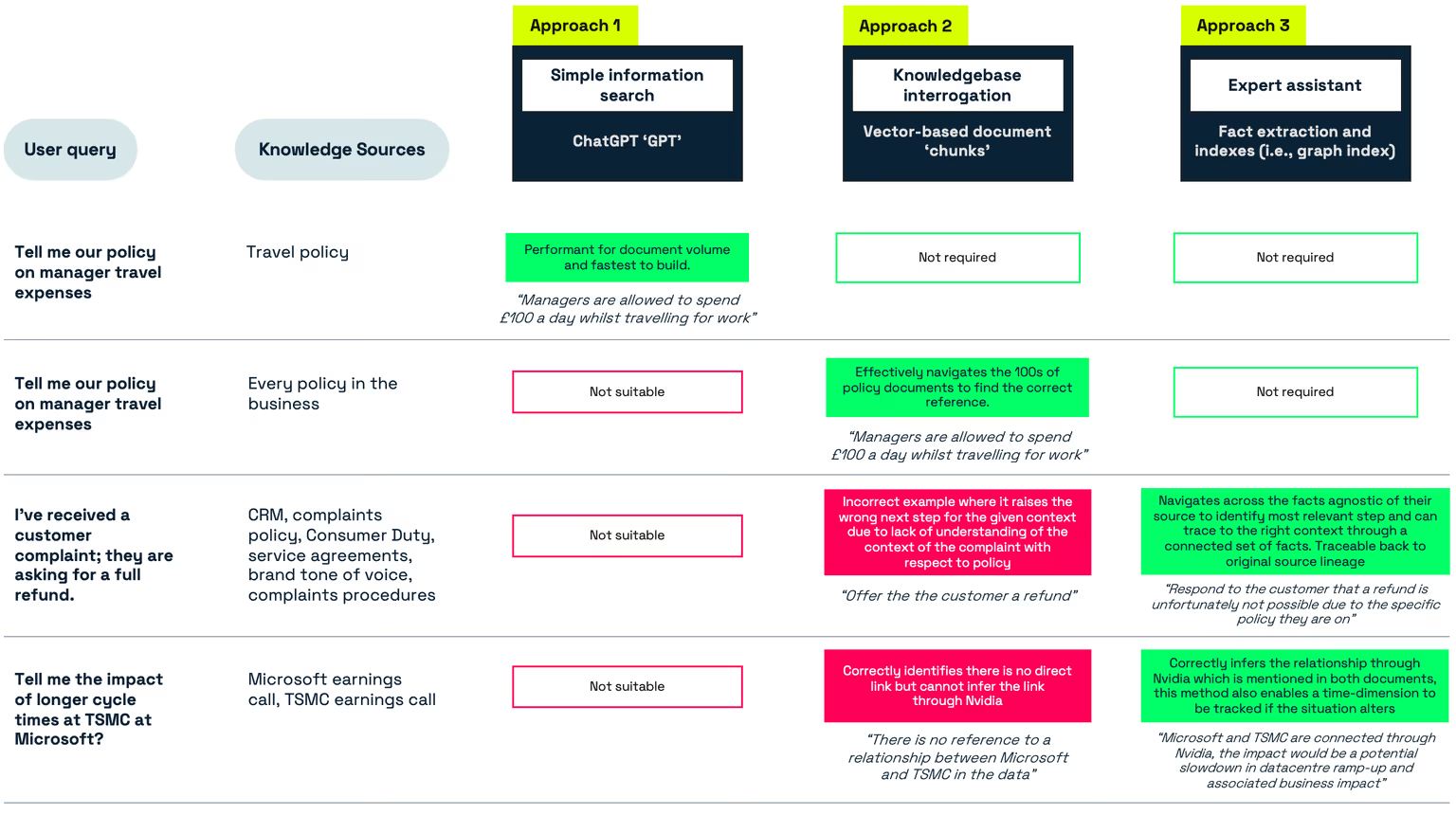

The best way to understand the relative performance of these approaches is with a series of examples.

- As the complexity of the query increases the need for a more nuanced method to traverse, interpret and infer an answer from the data is required - beyond summarisation

- As the volume of knowledge sources increases, the ability to effectively organise the institutional knowledge becomes critical to ensure the AI chatbot accesses the right information at the right time

How to choose the right model

There are many models to choose from:

- Open or closed (i.e. Meta’s Llama2 vs Google’s Gemini)

- Smaller to larger (i.e. Mistral’s Mistral7B to OpenAI’s GPT-4)

- Foundational or fine-tuned (i.e. Google's PalM-2 to Med-PalM-2)

In most situations, the best choice is to keep it simple and use the most powerful model (i.e. Gemini, GPT-4 etc.) that you can within the constraints of your IT strategy, especially at the start of your initiative.

However, there are some exception scenarios:

- A large volume of novel terminology specific to your context

If your organisation tends to use a significant volume of defined acronyms and terms which aren't present, or don't have the same definition, in standard English (or another language) then businesses should investigate fine-tuning as the method to embed this understanding into the knowledge AI chatbot.

- Highly sensitive deployments

If your business involves highly sensitive data, where you want the best possible understanding of the knowledge that is held within the LLM due to the nature of its training data it may be more appropriate to choose a model with greater transparency on the training process (i.e. Phi2b, Mixtral 8x7b etc.). These models are smaller (and hence trade capability for speed) but can be fine-tuned with relevant data to improve performance to reliable standards.

- Sub-second response times or in compute-constrained systems

For both of these using smaller models, which are fine-tuned to optimise for performance, will increase speed whilst being able to control for accuracy and performance. In this case, performance can be either/both the time to first token returned, or shorter and more accurate succinct results, instead of pages of text.

Fine-tuning is the process of using labelled examples (typically 100s) of ‘if you see A, respond with B’ to update the model so future results more closely match those labelled examples.

There are many flavours of fine-tuning, each of which can affect the underlying model in different ways, with different levels of permanence. This will be the topic for a future blog.

Fine-tuning can become expensive, potentially 10-100xing the cost of running your knowledge chatbot. Therefore using it when appropriate is important, as noted above.

Conclusion

In conclusion, AI knowledge chatbots start out easy, and then become a bit more tricky relatively quickly!

We often see companies, sensibly, evolving through these approaches step-by-step, unless there is a valuable reason why you’d jump straight to the end. This is to be encouraged as colleagues and businesses build the literacy and skills required to use this technology safely.

With that said, it can quickly become a problem if users have mis-set expectations on the performance of the AI knowledge solution. We have seen several organisations roll out their solution with approach one or two, and then as users become more dependent on it they ask more complex queries which either error, or worse, provide incorrect information based on the context. Ongoing monitoring of query prompts and performance is critical to control runaway issues.

If you’d like to learn more or explore the technical details behind these solutions, please feel free to reach out to us.