Introduction

LLM-based applications have taken the world of data-driven applications by storm. Almost every enterprise has been working on building a knowledge management application as an entry point to using LLMs.

While this is a great entry point to LLMs, the value of this class of applications truly comes from:

- Having a large corpus of information to power knowledge management solution

- Having the ability to retrieve relevant data given a context

To achieve this, most applications implement some form of RAG (Retrieval Augmented Generation) pattern. Using this pattern, it is possible to connect a data store to inform the generation of LLM-based responses in context and on a set of facts.

The most common implementation of the RAG pattern involves using vector databases as the backend.

However, in our testing, we found that when dealing with a large data source with complex unstructured documents graph databases tend to outperform vector database-based RAG in some surprising ways.

Observed Benefit

Reduced Hallucinations

Reduced hallucinations due to improved recall - Average Recall@5 on news articles was consistently 1.2% above vector-based retrieval in our experiments.

Reduced input token size

Using a graph database as a backend allows increased granularity and allows the capture data at fact-level (details below). We observed up to 20% reduced input tokens due to granular selection step.

This allowed us to better select for relevance and increasing the precision of the retrieval process.

Better Data Management

In this article we demonstrate methods for grouping facts related to specific entities, enabling "entity-level" data ownership.

This technique simplifies operational management and reduces complexity in data management processes. Additionally, it incorporates a time dimension and decay factor for recency metrics during the data retrieval phase.

Knowledge inference opportunities

Using graph databases as RAG backed allows opportunities to actively mine for inferences based on knowledge already held in the corpus. This boosts answer quality at the generation stage.

These benefits are most observed when the scale of the knowledge corpus used in RAG becomes large and approaches like map-reduce and in-context learning through context stuffing doesn’t work.

Additionally, we also observed benefits when the user questions required scanning a significant portion of the knowledge source (e.g. which reporter consistently provides a negative opinion on topic A).

The traditional approach to RAG

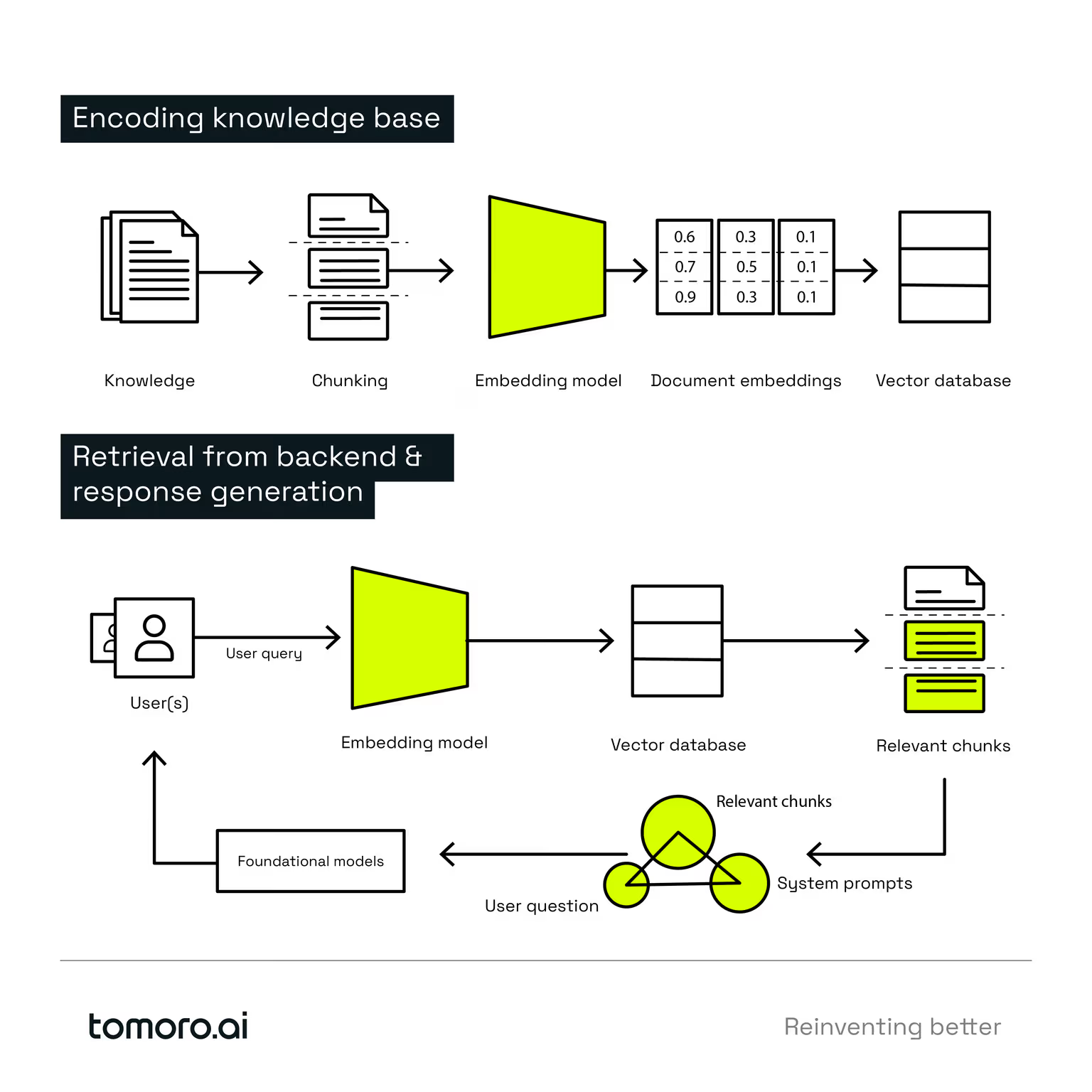

In traditional RAG implementations, the knowledge corpus is processed through an embedding model and the resulting output is stored in (typically) a vector store.

At retrieval time, the user question is processed through an embedding model and, using a similarity algorithm (e.g. cosine similarity), the “distance” between the user question and data chunks in vector databases is calculated. The chunks closest to the user question are deemed to be relevant to the question and retrieved. Then used as context to answer the question.

These knowledge chunks, along with the original question and accompanying context, are wrapped around a system prompt and sent to a foundational model to generate an appropriate response.

While this is the most popular implementation of the RAG pattern and it provides a good balance of functionality and complexity for small-scale applications, it tends to perform sub-optimally when solving knowledge management applications at scale.

This article describes some challenges faced with this approach and explores an alternative implementation of RAG using graph databases as the backend.

A case for using graph databases as RAG backend

Vector databases are designed from the ground up to store and perform operations on vectors (outputs from embedding models). This allows them to perform similarity calculations and retrieve relevant documents quickly and efficiently. Depending on the embedding model being used, the embeddings will match the user query semantically rather than specific keywords.

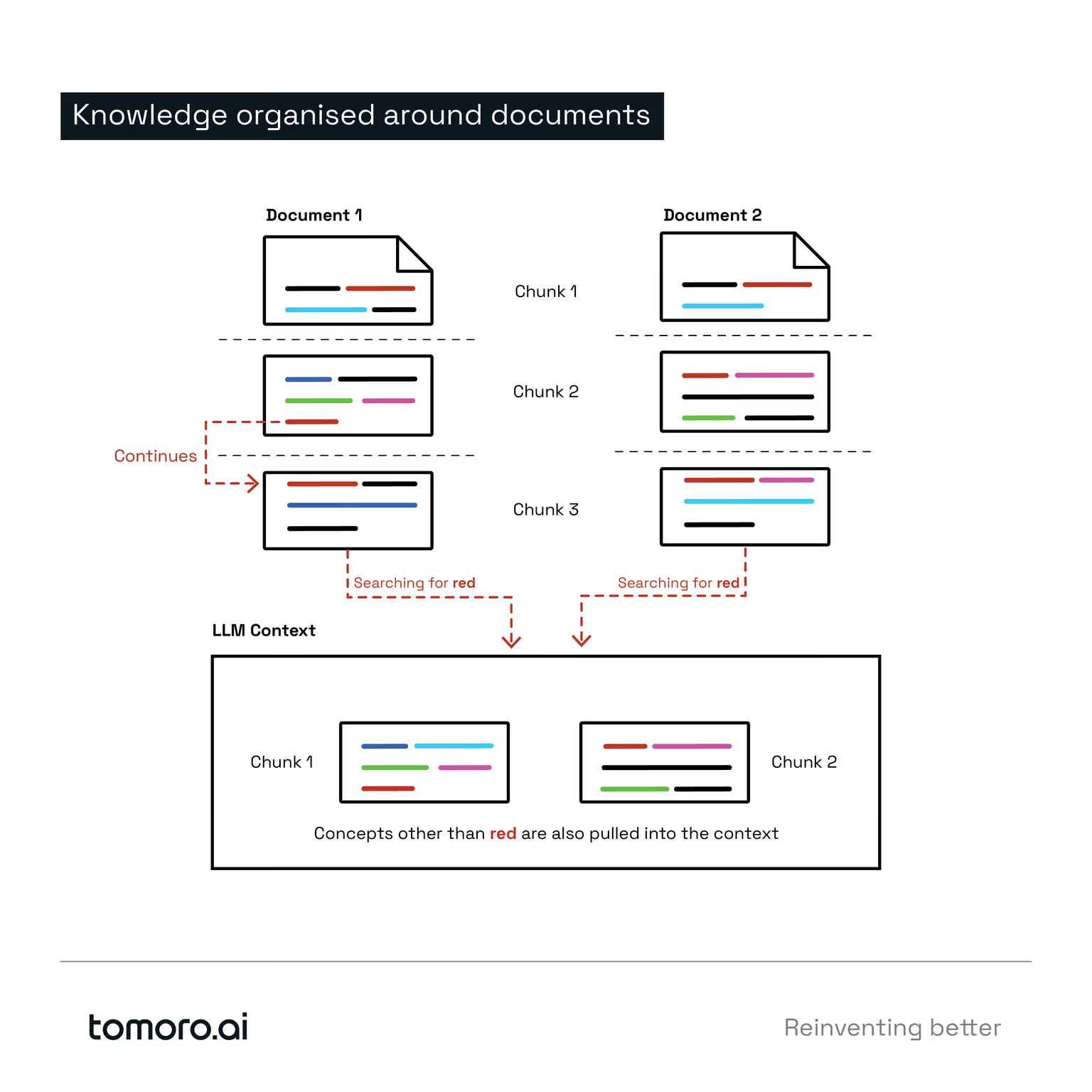

However, this still means that the search for relevant knowledge is restricted to the granularity of the document chunks. If the context relevant to a question is present across multiple chunks, then all such chunks must be retrieved and sent to the foundational model for a reasonable response.

In the real world, a knowledge corpus often consists of documents that discuss multiple topics and the relationships between them in a single document. This means that the information about an entity is often spread across multiple document chunks and across documents throughout the corpus.

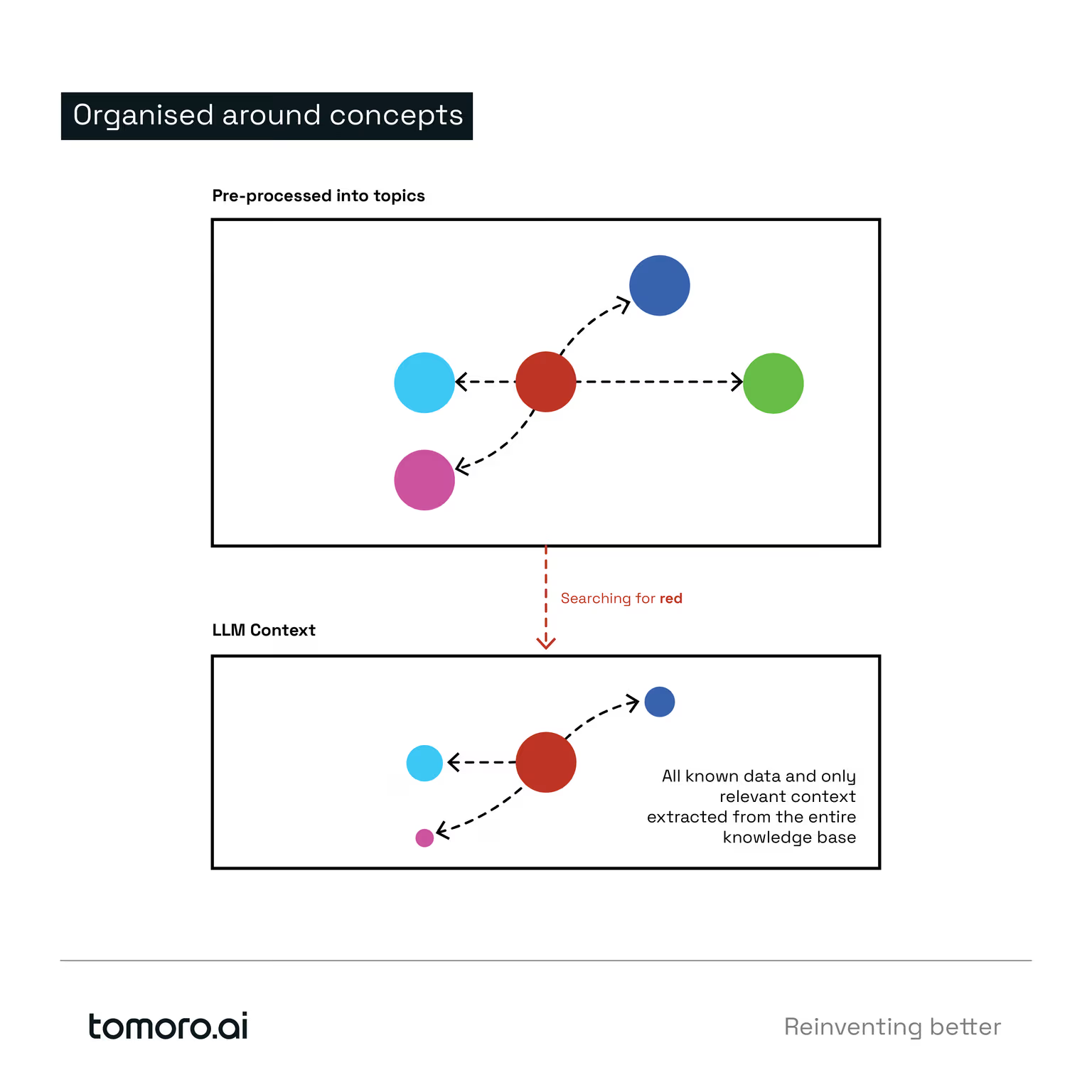

An alternative approach is to pre-process the knowledge corpus and, instead of keeping the information tightly connected with the document, re-organise information along core concepts.

In this approach, key entities are extracted from source documents and the facts mentioned in the document are represented as edges connecting the entities. These edges can also store operational metadata about the source document for easy citation of the facts.

At the retrieval stage, key entities are extracted from the user query, which can then be efficiently matched with the entities present in the graph database. All known facts about the given entity from across the knowledge corpus will be present in the neighbourhood of this node of the graph, implemented as edges.

Our retriever will selectively traverse outwards from the key entities to gather relevant context to satisfy the requirements of the user query and relevant context without being bound by the limitations of the original document chunks.

This approach provides many benefits over using purely vector-based document ‘chunks’:

- Increased Granularity

Using this approach the original document source is decomposed into independent entities and discreet facts connected to entities, the granularity of data is significantly increased.

Each edge connecting the nodes will encapsulate a pure fact presented in the document, along with the source and target-related entities. This approach is rooted in the implementation of Triples in RDF standards (Resource Description Framework).

Compared to document chunks, this approach with increased granularity allows us greater flexibility in selecting only the most relevant facts relevant to our query at the retrieval stage of the RAG implementation.

- Concept-based Indexing

Since all facts extracted about a given entity are organised as an edge connected to the node; everything known about an entity is only “one hop” away from the primary concept node.

This provides a way for the retriever to access all facts about an entity regardless of the source document. This higher density allows for retrieving context from a wide variety of data sources more efficiently.

For example, in a knowledge corpus of news articles; if the user was interested in finding the most “divergent view” on a subject; it would be possible for a retriever to traverse to core subject(s) edges and collect consensus view from edges within the graph databases. In this way, all news articles related to a subject, topic or concept can be scanned. This will allow a retrieval job to identify the news article with the most divergent view.

However, if the news articles were chunked as documents, this would likely be a complicated two-step process, to identify relevant documents that mention the subject and then identify the article with divergent views. Indeed, in some cases; this can be an impossible action to perform in complex cases.

- Data Updates and Management Options

Since facts are pre-processed and extracted from source documents; they are organised and managed as records in the knowledge corpus.

This allows flexibility in identifying conflicting facts and implementing data updates to core concepts at the point of ingestion. Facts can be updated and curated at the source, and controls can be applied to ensure that the index does not store conflicting facts.

This approach will also allow for implementing data ownership over the knowledge corpus. Independent nodes can be assigned to knowledge owners and data curators. Any major updates or new sources can be vetted and changes approved by the concept owners.

This significantly improves the citation quality returned to the user. The user will not only be able to audit the source of the fact but also get a time dimension of the fact as it changes over time.

Another popular use case is to apply “watch” on specific concept nodes. So when a key entity changes, a proactive message can be generated and pushed to interested users. This style of proactive recommendation use case is difficult to implement when data is organised as document chunks.

- Concept Traversal

This method of data organisation allows for making connections across documents even if the source documents do not explicitly discuss a direct relationship between concepts. Making these connections is a strong point of graph databases.

For example: In a corpus of news articles, if a user is interested in the “impact of oil futures on prices of orange juice” - It is possible that none of the documents directly discuss this specific impact. However when facts are organised in a graph database; a retrieval agent can traverse the shortest path(s) between two entities (oil futures and orange juice in this case) and collect relevant facts.

This retrieval approach tends to deliver a better-quality answer than a vector search on document chunks.

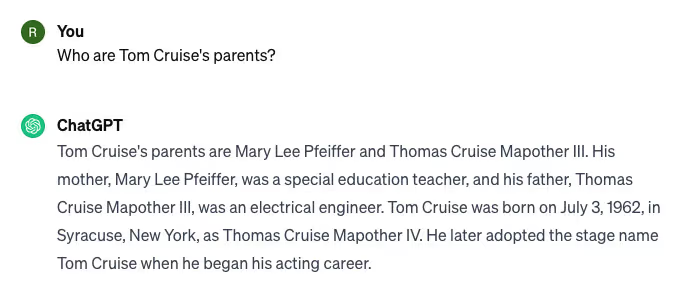

- Inference Mining

Facts often have a bidirectional relationship. And some LLMs on their own struggle to infer this on their own. A famous example of this is the often-cited example below (a.k.a reversal curse).

In a different session, when you ask for the same information, but in reverse:

Organising data as in a graph model opens up an opportunity to mine relationships to infer new information that may not have been explicitly mentioned in any one document.

This type of “If A is True; and B is True; can C be True?” style information mining can be performed offline and can significantly improve knowledge management solutions performance.

Putting the approach into practice

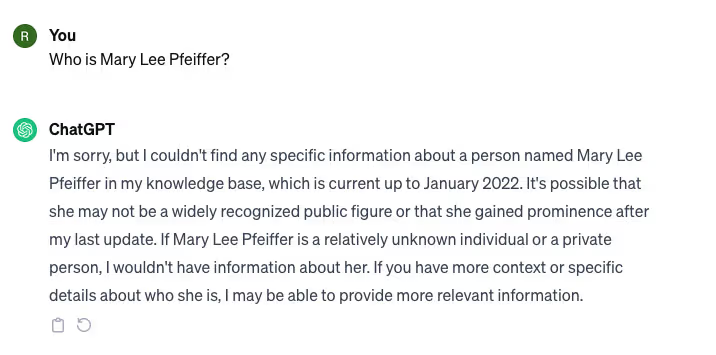

At Tomoro, we use an agent-based design pattern to implement our solutions. This allows us to deploy self-sufficient agents programmed with intentions and objectives to perform discrete actions.

In our RAG implementation using knowledge graphs, we modified our modeller agent with new skills of entity extraction and graph database modelling skills. This allows our modeller agent to automatically process incoming documents into constituent entities (modelled as nodes) and represent facts as edges connecting appropriate nodes.

The agent is intelligent and can recognise and resolve entities with different names (like JPMC and JP Morgan Chase) automatically and ensure that concepts are created at the right level of granularity for a given use case. This was possible thanks to excellent research by our in-house graph expert data scientist Mihai Ermaliuc and prompt engineer Albert Phelps.

During the online retrieval cycle, our retriever agent is programmed to identify the key entities in the user query, resolve them to graph entities and traverse the facts in connected edges to retrieve relevant context. This concept is then used for grounding an LLM to generate a response.

A separate offline knowledge management agent traverses the known facts and applies data management rules and inference algorithms to monitor and improve the quality of the index continuously.

These agents combined implement RAG pattern using a Graph Database and in our internal tests improve upon standard vector database patterns in the following ways:

- Provides a much better quality of response when a query relates to a concept spread across the knowledge corpus.

- Reduces the number of input tokens due to increased granularity and information density

- Provides opportunities to implement better data management policies including data ownership and a simpler authorisation model.

- Allows to actively mine for new inferences based on knowledge already held in the corpus. This allows for improved answer quality.

If you are interested in learning more about our research and more detailed technical benchmarks, please contact us at hello@tomoro.ai.

We also plan to publish a series of in-depth technical articles delving into specific designs of agents and related benchmarks.

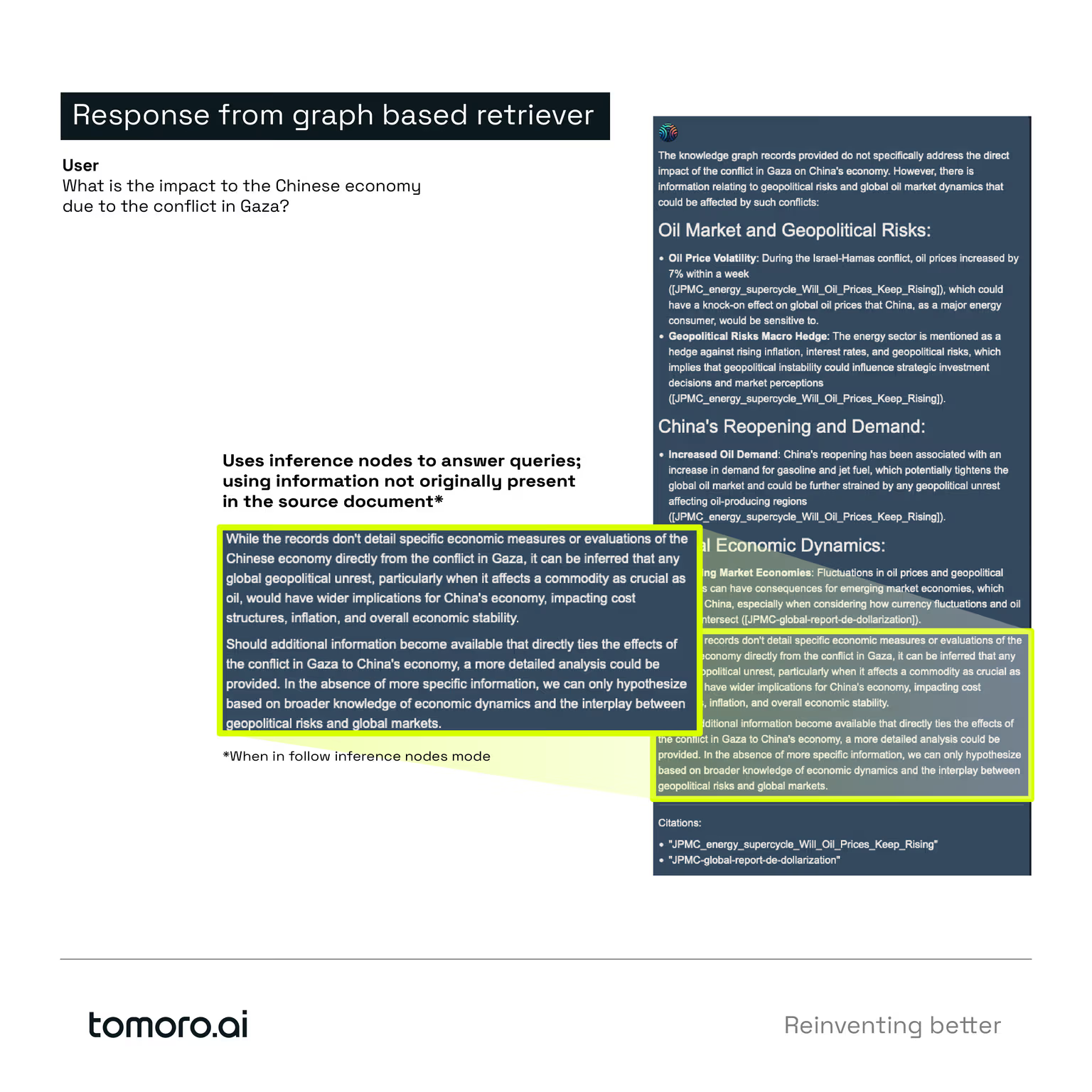

Meanwhile, here is an example of agent inferring details (when in “traverse inference edges node”) not originally present in the source documents. It can do this by traversing the inference edges that were created during the knowledge-mining process.