The great, but tough, job of being an AI enablement function in a large enterprise (and what to do about it)

This blog is for those people who are grappling with the hard problem of building an enablement function for generative AI, and more widely AI agents, across a large enterprise.

Why is this tricky?

For a bunch of reasons! Being an enabler has always been extremely tricky - someone else gets the value story and you get the problems of getting them building quickly, safely and scalably.

Generative AI is of course an amazing new tool to build great solutions with - but it comes with some new and old problems for enablement teams.

The deceptively easy-to-build problem

Let's start with a new problem. Building compelling prototypes is just too easy! In the time I spent writing this blog I could have built any number of initial solutions with a few prompts and the ChatGPT or Claude interface.

That’s so useful! But it doesn’t really mean I’ve built something that could be put in production at a large enterprise. It’s got great demo value, but once I use it for a while, I’ll realise that sometimes it hallucinates, or it confuses itself with the knowledge I’ve given it, or it has any number of other issues.

The problem with this technology, compared to other tech revolutions, is that there is no bigger gap in difficulty between a compelling prototype and a trustworthy at-scale production solution - and that’s naturally hard for people to understand!

The lack of enterprise patterns

This is a technology where academic papers are outpacing enterprise deployments. That is not normal!

The field is still very much emerging in terms of the ‘right’ patterns to enable enterprise capability, and any organisation that wants to be a leader needs to accept that a level of self-discovery is required to determine what works for them.

With that said, better answers are out there (see some below) and some terrible advice! It can be hard to distinguish between the two until you’ve got some development scars.

Everyone’s learning what is and isn’t possible

Compounding this, the age-old problem is rampant that people just don’t really understand the technology - and therefore even if you have 100s of use cases on your list a lot of them are just bad ideas for what generative AI is actually good at! There are far too many chatbot-style use cases, which is only a small part of the true enterprise value. It’s a hard education problem to help people shape great use cases that you can accelerate quickly.

What do you need to focus on?

Ok, before you despair too much, there are absolutely some key areas to focus on here.

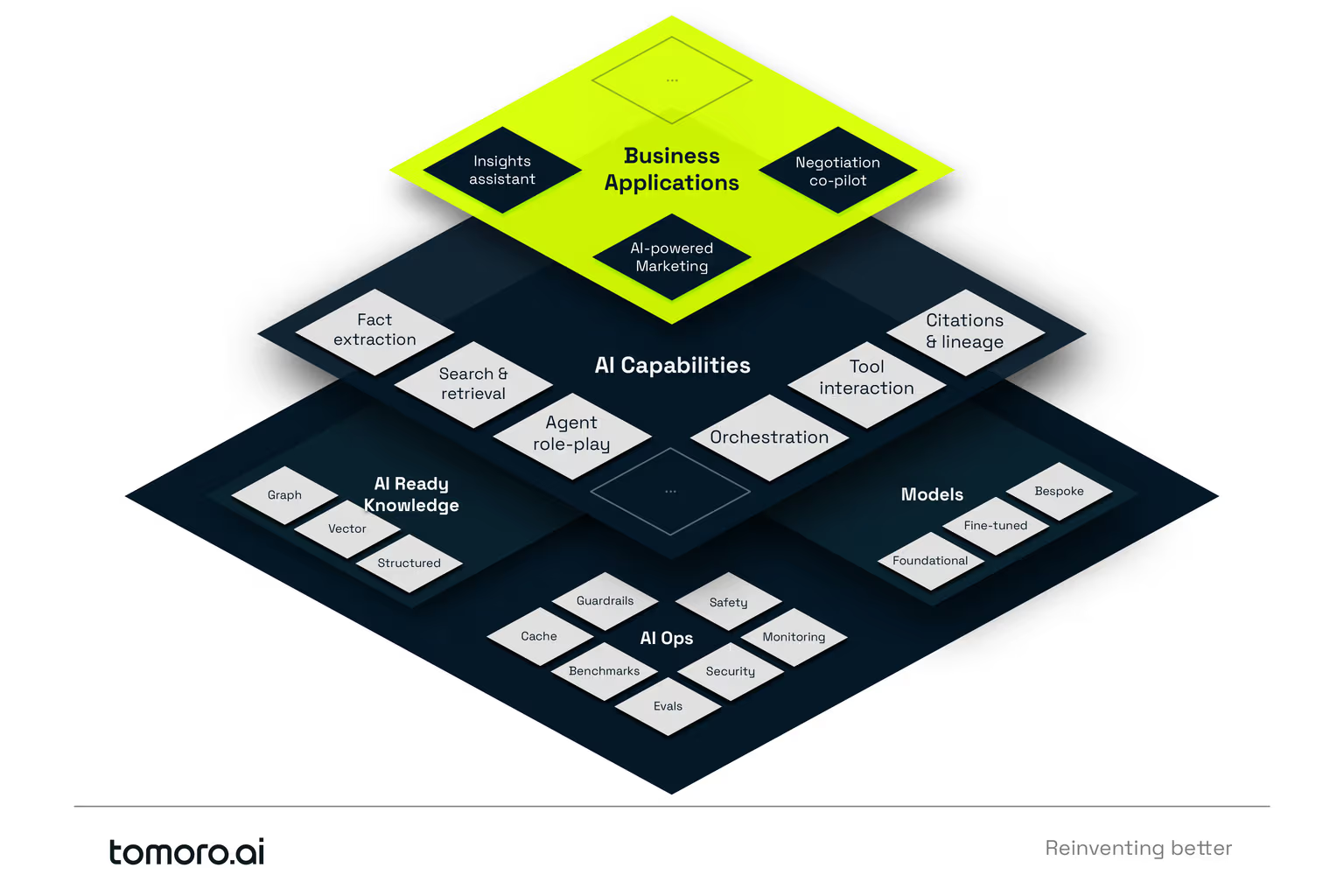

Distinguishing between AI business applications and AI enterprise capabilities

What are AI business applications?

These are your customer service chatbot ideas, your investment research copilot, your generative AI image marketing campaign, your supply chain negotiation copilot, your insights assistant for users etc. etc. etc.

They’re solutions that drop into the business function and deliver some real value. A solution that helps the business accomplish the jobs to be done to make money or run the business.

What are AI capabilities?

These are your different accessible AI models (foundational, fine-tuned or unique models just for you), an AI-optimised knowledge base, your evals framework and evals, your fact-extraction solution, your agent orchestration framework, your retrieval capability etc. etc. etc.

They’re common ingredients that get combined into different recipes for particular use cases to drive business value - each with a route to scale and measurable confidence so people don’t reinvent the wheel with their ChatGPT prototype each time.

So once you’ve got your head around that - why is that important?

It’s because all those business solutions are highly similar when it comes to actual build! Almost every solution requires:

- AI-optimised knowledge to use as a reference ‘truth’ (and control hallucinations)

- E2E and complete evals to monitor what performance means for the specific application

- Flexible ways to retrieve information and context from the knowledge base

- A framework to orchestrate multiple uses of AI (e.g., multiple LLM calls) and/or combine AI with more deterministic functions (e.g., use a calculator, update the CRM).

Crucially, while a lot of these components can be prototyped with prompts or functionality inside tools like ChatGPT, Copilot, or Claude - they cannot be scaled without a meaningful enterprise implementation.

So the enablement team, guided by the business priorities, needs to relentlessly focus on building these capabilities and then prove their value with the business applications using and evolving alongside them. If you don't do this, you will be forever left with a list of use cases and a bunch of prototypes!

Highly aligned, loosely coupled

Once you draw this distinction, it becomes easier to see how you control the enablement function’s backlog and the relentless demand from the business.

If you can focus on building, or buying, capabilities rather than applications - you can remove yourself from the grind of the business application roadmap and shift that towards a more federated model. Albeit a model where the business teams use your capabilities as a route to real production value.

Note - this isn’t to say tools like Enterprise ChatGPT or Microsoft Copilot aren’t useful. They absolutely are! But it’s about helping the business distinguish between where general-purpose assistants, or small-scale agents like GPTs, are useful and where a more enterprise-ready approach of thorough evals, hallucination control and complex function-calling is required.

The next-best first thing to do

This is highly dependent on your unique context. We’re always up for a chat if that helps but we’ve laid out a few common examples below.

If you have a long backlog but no idea what to do first

Analyse the backlog to determine the common components required to build each application. Answer the following questions for each use case you have:

- Do we need generative AI and LLMs to achieve this?

- Do we need an AI-optimised knowledge base for this to work - or are prompts / basic RAG enough?

- How complex is the retrieval - are we doing summaries or asking for multi-document comparisons?

- Is this a single LLM request problem - or do we need to orchestrate multiple LLM calls for this to work?

- What are the appropriate evals required to know this solution is working well and how complex are they?

If you can work through the answers to these questions (for both the first MVP and the likely scaled version of the solution) you’re on the right track. Then, you can pick a valuable (most important bit!) use case that can also be used to accelerate the development of the common capabilities you’ll need for everything else on the list as well.

If you have a use case to build/scale asap

If you’ve already picked a use case and have started to build (or have got a prototype and are now stuck on how to get it to production).

First of all, try to understand how much of the code is reusable of what’s been developed so far. If it’s all in-context prompts - this is a great start but it will highly likely not be robust enough for a mass-scale enterprise deployment.

The critical questions remain similar but with a slightly different entry point:

- What is the acceptable rate of incorrect responses or missing data? (50%, 20% ,0%)?

- What data sources is the use case using, and to what extent will those sources be reused by other applications by other teams in the business?

- How is performance intended (or already) measured for the solution? How are these evals reported on and interpreted by users?

Conclusion

Being on the (Gen)AI enablement team for a large enterprise is a huge privilege and a fantastic job—you get to sit in one of the driving seats for reinventing your business with AI.

Sadly though, that doesn’t mean it’s an easy gig! There are many unknowns to navigate, but with the right approach to delineating between applications and reusable capabilities, you can create a highly aligned and loosely coupled plan that will deliver value in the short-, medium- and long-term for the business.

We hope this blog has given you a few things to consider. If you have any more questions, feel free to contact us anytime.