5 reflections from our early access to OpenAI's new Realtime API

Introduction

By now, I’m sure most people have seen Advanced Voice Mode in ChatGPT.

A world where you can have a seamless, real-time conversation with an AI that sounds just like a human, used to be pure science fiction- but it looks like that world has just become our reality.

However, the real power of this technology will be unlocked when it is taken out of a general knowledge assistant on your phone and starts to be customised and integrated into other systems in the real world. This is where the new OpenAI’s Realtime API comes in. It enables users to stream audio input and output with the model with their own system prompts, as well as enabling capabilities like function calling.

At Tomoro AI, we've had early access to this API and have been putting it through its paces across various client scenarios. We're excited to share some of the standout features that genuinely blew us away, along with some challenges to watch out for if you're thinking of integrating this technology into your operations.

The model has exceeded our expectations, representing a significant leap forward from what was previously possible in AI voice interactions. With the right implementation approach and controls, it has the potential to revolutionise custom voice-based applications in many organizations. Despite some technical and human challenges to overcome, significant value is now clearly within reach.

Enabling user testing and feedback

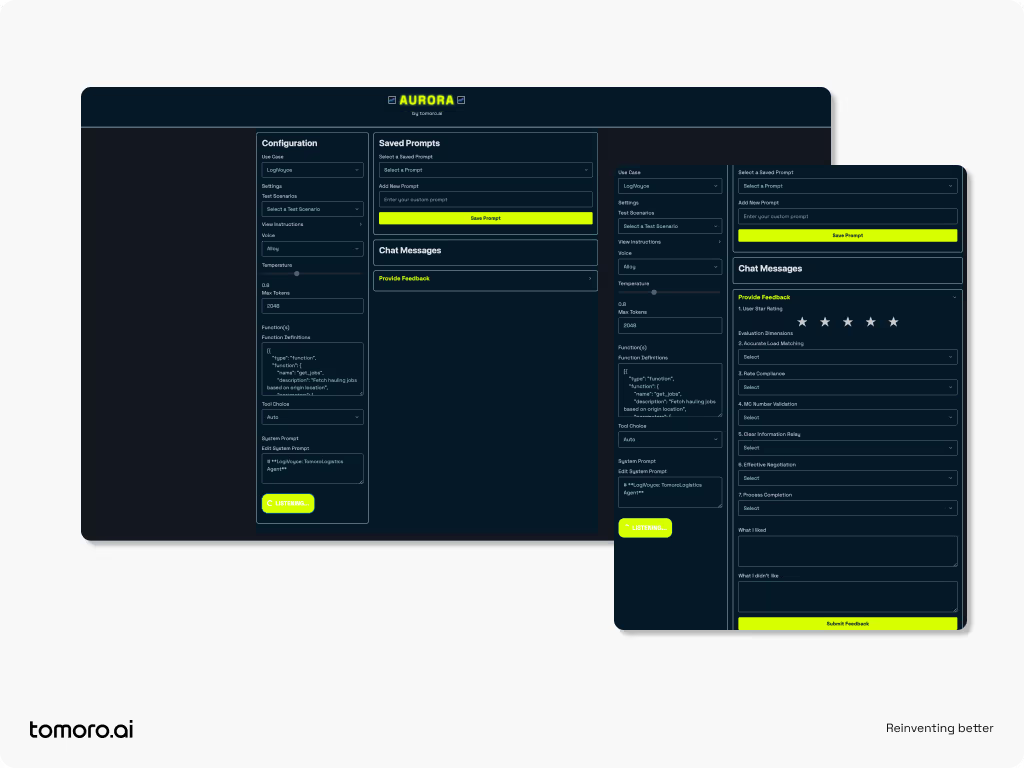

To test this new capability, we built a test harness (Aurora) that allows users to:

- input system prompts,

- tweak hyperparameters,

- load different business scenarios (e.g. B2B logistics, B2C customer support, strategic ideation) and

- capture both quantitative and qualitative feedback from users (both a structured scorecard and qualitative human rating).

This was stood up in a matter of hours, thanks in no small part to the contemporaneous launch of o1 preview and mini!

Screenshots of Tomoro test harness "Aurora" showing configuration options and featuring feedback collection.

What impressed us about the new API

-

Impressive low latency unlocks real-time interactions

One of the first things we noticed was the model's lightning-fast response time. Low latency is crucial for real-time conversations, and this model delivers. It enables use cases we previously only dreamed of—think dynamic customer service calls, live workshops, or even AI-powered team meetings. -

Exceptional ability to follow instructions

The model shines when it comes to understanding and following complex instructions. In our tests simulating business to business negotiation, it consistently adhered to predefined negotiation limits and accurately processed operational data, whilst maintaining a warm, friendly, approachable tone. This reliability mirrors what we already see in text interactions with 4o and gives us confidence the models will be able to stay within policy guidelines and meet operational needs. -

Natural and engaging conversations

User experience is everything, and this model doesn't disappoint. It maintains a friendly, professional tone, making interactions enjoyable and more human-like. It's not just about the words it uses but how it says them—intonation, pacing, and flow all contribute to a better conversational experience. As the selection of voices you can choose from increases, this will only improve the experience further in different scenarios. -

Smooth integration with existing systems

We were pleased with how easily the model integrates with other systems through function calling. Whether it's querying databases or updating records in response to conversational cues, the model handles it seamlessly. This is a big win for businesses looking to integrate the model with their current operations. -

Handles multi-participant conversations with ease

In scenarios involving multiple participants—like team ideation sessions or strategy workshops—the model held its own. It managed the flow of conversation smoothly, adapting to different speakers and providing relevant inputs without missing a beat.

Challenges and how to overcome them

While we're excited about the possibilities, it's important to be aware of some challenges that come with implementing this technology. Here's a rundown of what we found, and some high-level suggestions on how best to tackle them:

Substitution errors and misquotations

Challenge:

The model occasionally misquotes numbers or mispronounces terms, which can lead to confusion—especially critical in contexts like financial transactions.

Mitigation Strategy:

Optimise prompts to be shorter and clearer. As much as possible, rely on external capabilities, such as function calling and structured outputs, over complex prompts. Limit the model's responses to verified data sources. Implement moderation and validation steps for critical steps.

Audio quality and artifacts

Challenge:

Users sometimes experienced inconsistencies in audio quality, including artifacts, such as unintended sound effects, that affected call clarity.

Mitigation Strategy:

Consider post-processing audio output to smooth out artifacts, although this will increase latency. Custom voice tuning (if it becomes available in future) may also enhance clarity and consistency.

Accuracy of information capture

Challenge:

Occasionally, the model struggled to accurately capture key details, such as names, affecting the accuracy of information from users.

Mitigation Strategy:

Double-checking any key information captured with users. Depending on the context, this could be via audio or visual text output.

The human side of AI conversations

As AI models become more human-like in conversation, it's not just about the technology—it’s also about how people feel using it. Will customers or employees be comfortable talking to an AI? How should the AI handle nuances like humour, empathy, or frustration?

It felt surprisingly good when the model complemented us on our negotiation skills during one interaction, which highlighted how this technology opens up interesting new ways to enhance user experience and build customer relationships.

Example transcript of part of a conversation with the voice agent

These are important questions because the etiquette for interacting with AI isn't fully established yet. Companies need to consider user experience and set clear expectations. It's also crucial to design AI interactions that respect privacy and build trust.

Conclusion

Integrating advanced AI models into your business isn't a plug-and-play situation. The challenges we've highlighted show that while the technology is powerful, it requires careful implementation. That's where experience makes a big difference.

We’re all on a journey learning how best to design systems that incorporate this powerful new paradigm into our interactions and workflows. The prizes are clear for those who achieve it successfully.

Thanks to our colleagues at OpenAI for the opportunity to collaborate on the alpha for this exciting new capability!