Introduction

Over the last year, weʼve helped companies unlock the full potential of their data. Equipped with a foundation of quicker and more actionable insights, they are defining the frontiers of whatʼs possible with AI in their respective industries — and achieving market-leading positions as a result.

Retrieval-Augmented Generation (RAG) is at the heart of this.

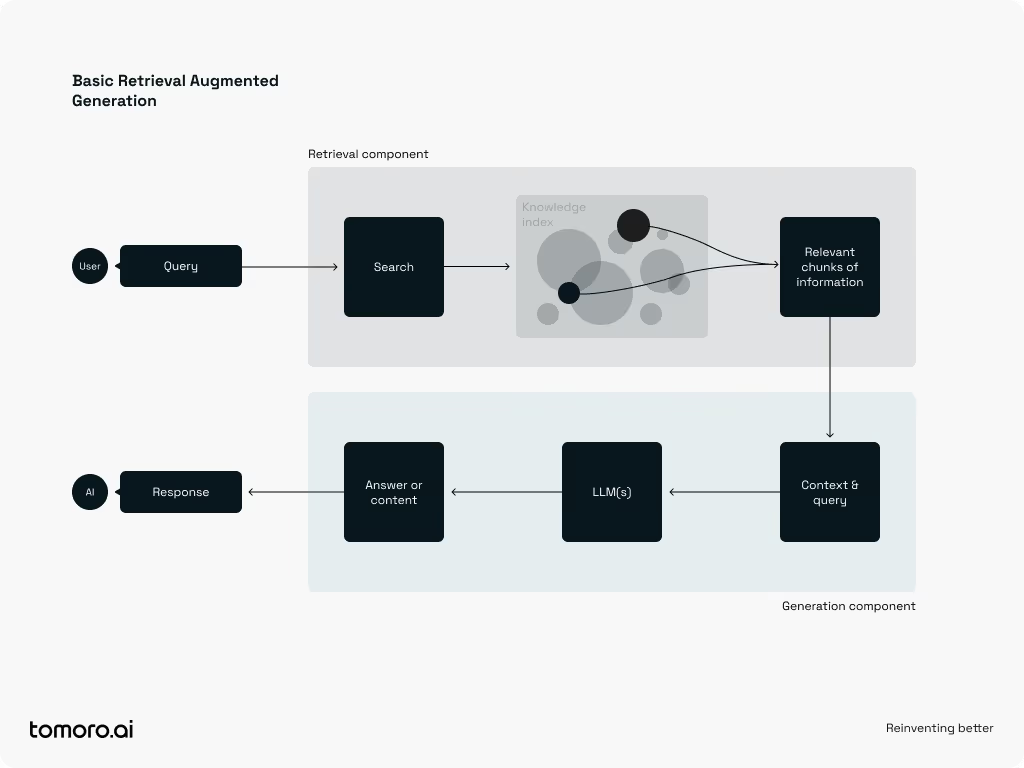

RAG leverages your data to enhance AI responses by retrieving and integrating relevant data from your sources during generation. Here's how it works:

-

Retrieval component

Identifies and fetches the most relevant pieces of information from your databases for any given question or task. -

Generation component

Once the key information is retrieved, a Generative AI model such as GPT-4o uses that data to answer queries, create summaries, or even create new content.

Weʼve noticed businesses exploring RAG are often confronted with a labyrinth of options and complexities. This slows their progress. This guide aims to help you navigate these challenges and set your projects up for success.

1. Garbage in, garbage out: The importance of getting your data right

The success of any RAG system hinges on the quality of your data. Itʼs a simple principle, but one that can be surprisingly difficult to get right.

Data quality is key

Take time to ensure that your data is accurate, up-to-date, and well-structured. If you do this right, you will only have to do it once.

We worked with a leading investment management company to create a unified knowledge base across terabytes of data, forming a strong foundation for RAG systems that deliver value across their organisation.

Large Language Models (LLMs) play a critical role here too. The days of spending countless hours categorising files manually are a thing of the past. You can quickly configure LLMs to clean and label your data, helping you to transform chaos into clarity.

Maintaining data privacy

For many organisations, making data more accessible across teams is new territory, often raising questions about who should see what. It may even uncover situations where employees have had access to data they shouldnʼt.

These are critical challenges. But they are solvable.

RAG systems can be built in a way that addresses these concerns, even down to managing access at a single document level. This ensures that users only see what they are authorised to see, helping your organisation remain compliant with regulations and internal policies whilst empowering teams to collaborate more effectively without the fear of data leaks or privacy breaches.

2. Build something people need

For any project to succeed, it must address real challenges. Engage your users early to uncover their pain points and try to understand how you can solve them.

In many cases, RAG can be an effective tool to help alleviate these challenges. Across industries, RAG often lies at the heart of larger solutions. Weʼve leveraged RAG to build cutting-edge applications for clients ranging from customer service chatbots handling millions of interactions to better-than-human data cleansing.

By building small prototypes that incorporate RAG and sharing them with users for feedback, you can refine your systems to be both intuitive and user-friendly. Keeping users at the centre of your development process ensures youʼre creating technology that teams truly value — and actually use. This is critical for translating your AI investment into competitive advantage.

We worked with a global drinks company to run a global marketing campaign focused on helping students develop start-up ideas ideas that would make a positive impact to people, community and the planet. The ambitious goal was to increase applications by over 20 times compared to 2023. To achieve this, we integrated GenAI into every aspect of the user experience. From generating personalised starter ideas to simulating expert advice on crafting compelling communications, GenAI played a pivotal role in driving engagement and delivering exceptional results.

3. The dark art of RAG: Retrieval

Context management is king in RAG systems. Itʼs all about getting the right data to the right model at the right time.

There are many ways to optimise your systemsʼ performance, but we often see the most gains by focusing on improving the retrieval step - the search for the data to answer a userʼs question.

What works best will depend on your specific use case, but we can share a few best practices that will help improve your systemsʼ performance across many domains.

Step 1: Getting your data structure right

Once youʼve cleaned and labelled your data, there are a few different options for storing it.

-

Vector database

-

A vector database is ideal for quickly locating relevant chunks of information based on meaning rather than exact keywords. This works best when you have primarily unstructured data and your dataset has a relatively narrow domain.

-

Example use case: Customer service chatbot.

-

-

Mixed vector-keyword database

-

This approach combines vector search with metadata (e.g., topic tags) attached to chunks of data, allowing the system to focus on specific themes that the user can choose. It is particularly beneficial as datasets grow larger or span multiple distinct topics.

-

Example use case: Company- or division-wide knowledge base.

-

-

Graph database

-

A graph database encodes relationships between pieces of information. This makes them invaluable for very large datasets or those where understanding the connections between data is crucial.

-

Example use case: A tool for searching over medical literature.

-

Step 2: Enhancing your database search

-

Rephrase usersʼ questions

Users may not provide the clearest starting point. Rewriting their queries — incorporating prior messages or user context — can offer a better path to the answer. -

Expand the question

On knowledge-intensive tasks, broadening the user query to related topics can help to uncover overlooked insights. Subtle nudges to explore adjoining areas can reveal connections that users might miss.

Step 3: Enhancing the data you retrieve

-

Re-rank your content

Much like people, LLMs benefit from seeing the most important details first or just before they answer. By prioritising key information, you reduce the risk of critical insights being missed. -

Iterate and fill in the gaps

Sometimes retrieval doesnʼt find everything in one go. Pausing to check if anythingʼs missing before returning a response will ensure a more complete answer — you donʼt want to leave vital details behind.

When embedding models donʼt quite work

For more nuanced data, such as technical or legal data, common embedding models can struggle to capture subtle but pivotal distinctions. In these cases, you can instead use an LLM to label relevant pieces of information more precisely.

These designs deliver significantly improved retrieval accuracy with trade-offs in latency and cost. However, as the cost of AI has dropped by over 99% in the past two years, the development of bespoke systems like this more accessible than ever.

4. Evaluating your RAG system

Once youʼve built your RAG system, you face one last hurdle - getting your team to trust it.

Define clear metrics

Start by identifying the key metrics that reflect your systemʼs performance. Common metrics include accuracy, relevance, speed, and user satisfaction. Tailor these metrics to align with your organisationʼs priorities. For instance, a customer support bot might prioritise safety and speed, whilst an investment knowledge base may emphasise accuracy and relevance.

Leverage synthetic data

If youʼre building a RAG system for a workflow without a lot of historic process data, it can be difficult to evaluate the performance of your system. In this case, synthetic data generation can be useful to get a benchmark. Using LLMs, you can quickly generate example user inputs or underlying data to test how your system performs. This helps you tune the system before pilot testing.

Put it in front of real users

Synthetic benchmarks are useful, but nothing beats real-world testing. As soon as you can, deploy your system for a pilot with your most engaged end users to:

-

Highlight gaps in functionality

-

Uncover unexpected user needs

-

Gather insights into adoption barriers and areas for improvement

Build trust through transparency

Build explainability into your system. Transparency is key for building user trust, particularly in regulated or high-stakes industries. Build explainability into your system by:

-

Showing users the sources of retrieved data — you can keep this high-level and link to source documents or even reference down at a sentence level

-

Providing insights into how the system generated its responses

-

Allowing users to flag answers they find unclear or incorrect

5. Building for the future

RAG was the star of the enterprise AI show in 2024. But, forward-looking organisations are already asking: whatʼs next?

Future-proof your system

Build a flexible RAG system. New AI models and tools are emerging every day - this way you will be able to quickly integrate them without major overhauls.

Embrace fine-tuning

Organisations at the forefront of enterprise AI are turning to fine-tuning to unlock more complex workflows and higher-value automation. Fine-tuning involves retraining a general purpose model to perform more effectively within a specific task or domain.

At a high level, the enterprise use cases for fine-tuning can be thought of as falling into two categories:

- Driving higher performance:

When general LLMs fail to meet desired performance standards, fine-tuning on relevant, high-quality data can bridge that gap. This typically happens when you're working with specialised data, such as medical, legal, or technical information, that isn't well-represented in the public datasets used to train LLMs. Fine-tuning allows you to create a bespoke model that surpasses general models in accuracy, specificity, and reliability for your particular use case.

We developed a “Prompt Guard” for an international transportation firm’s customer-facing chatbot to block inappropriate content and protect their reputation. Initially, we implemented a prompt-engineered GPT-4o-mini solution, achieving c.92% accuracy in rejecting unsuitable messages. By prototyping and collecting data from this solution, we built a dataset to fine-tune GPT-4o-mini, ultimately surpassing 99% accuracy in detecting illicit messages while significantly reducing false positives.

- Saving cost:

By distilling knowledge from a larger LLM into a smaller one—such as using responses from o1 to fine-tune GPT-4o-mini—you can maintain comparable levels of performance in a narrow domain while significantly lowering operational expenses. This is particularly powerful for use cases which process a lot of data or have large numbers of users.

We worked with a leading international insurance firm to migrate an internal productivity copilot from GPT-4o to a fine-tuned GPT-4o-mini, achieving a 50x cost saving whilst maintaining performance.

There are several ways to fine-tune LLMs. A common entry point is Supervised Fine-Tuning (SFT), where you train the model to produce correct, domain-specific responses based on a curated set of input-output examples.

OpenAI have recently introduced two new fine-tuning methods which can be used to push models to the next level.

-

Preference Fine-Tuning: leverages a technique known as Direct Preference Optimisation (DPO). DPO is a powerful method for aligning your model to a specific style or use case. By pairing examples of a good and bad answer to a given question, you can fine-tune the models to align with your usersʼ preferences. This is particularly powerful when combined with SFT.

-

Reinforcement Fine-Tuning: enables you to fine-tune OpenAIʼs o-series models. By scoring the modelsʼ outputs with a reward model, these models can learn the correct reasoning pathways to solve your most complex problems. Notably, with Reinforcement Fine-Tuning you do not need to spend time creating long or complex sample answers. You only need to provide questions and final outputs, and this fine-tuning approach will do the rest.

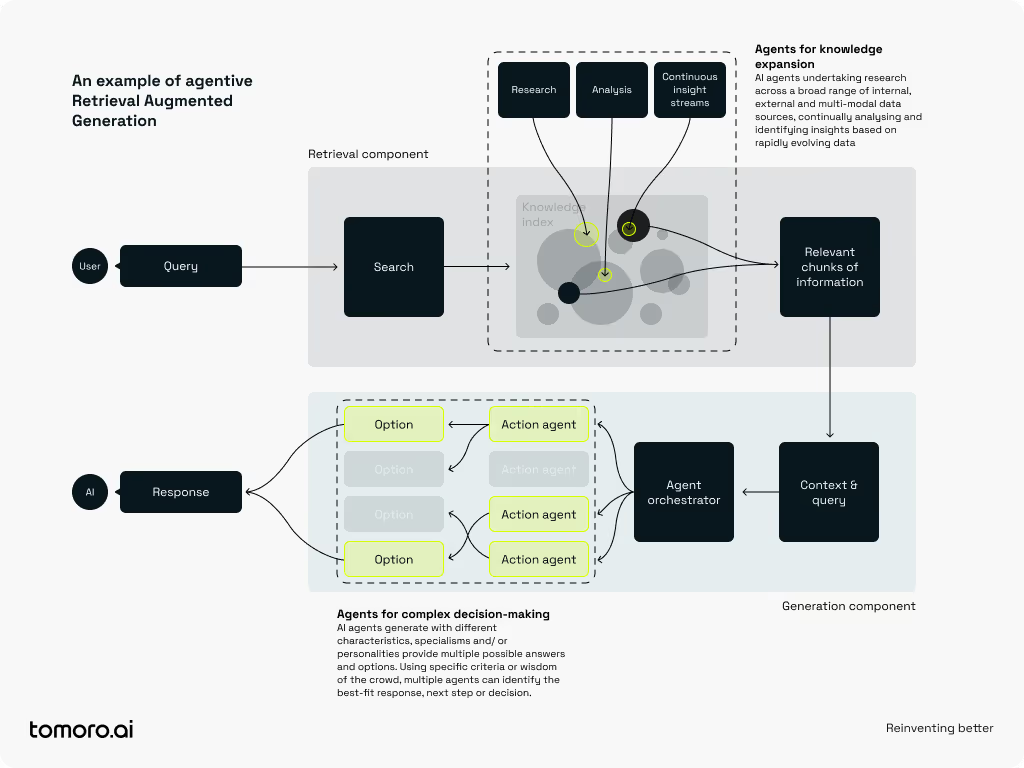

Prepare for agents

The future of RAG doesnʼt end with improved retrieval and cost reductions - itʼs about orchestrating advanced AI components to enable entirely new classes of solutions.

What does this look like in practice?

-

Knowledge expansion:

In this article, weʼve outlined how your organisation can get the most out of yesterdayʼs data. With todayʼs LLMs, you can do much more than that. AI can be used to unlock and manage entirely new data streams that have been out of reach before -

Integrating decision-making agents:

By combining RAG with agents that can reason, plan, and act on your behalf, you can automate complex workflows that were previously manual or reactive. Instead of simply retrieving information, these agents continuously analyse conditions, evaluate options, and initiate actions to achieve your desired outcomes

Maybe this all sounds like science fiction? Itʼs not. Itʼs happening now.

We recently partnered with a major sports league to deploy an AI agent that leverages RAG to analyse fresh game insights in the context of historical data, autonomously generates tailored content for fans, and decides when to publish based on its own criteria for whatʼs timely and exciting.

This system isnʼt just delivering information — itʼs making editorial judgments and taking action on them.

The Path Forward

Deploying a successful RAG project isnʼt just about adopting the latest technology — itʼs about delivering measurable business value. At Tomoro, we specialise in aligning advanced AI solutions with your strategic priorities to achieve real impact. Whether youʼre launching your first RAG initiative or looking to take your production-ready system to the next level, our team is here to guide you every step of the way.

Are you ready to lead the charge into 2025?

Connect with our specialists today and start transforming your vision into reality.