Introduction

Large Language Models and AI agents are reshaping how we interact with technology. We're increasingly collaborating with AI in dynamic, conversational environments. In this blog, we explore six emerging patterns in AI Experience Design (AI XD).

We've all experienced disappointing interactions with AI, or know people who “hate chatbots", often because the ‘vanilla' experience isnt enough to make it feel useful, valuable or joyful. Very often, the disappointment we feel during the interaction has less to do with the capabilities of the model and more to do with the experience; the interaction between a person (or people), an AI agent and a desired outcome. Its a significant barrier to adoption, especially in an enterprise setting where the design of interactions between humans can contribute to overwhelm, ignore real-time context or even disregard user intent and behaviour entirely.

From this perspective, we've set out the benefits each pattern offers, the challenges we face from a design perspective, and some practical guidance on how to leverage it. These patterns aim to be agnostic of interaction types or modality, offering opportunities within external, consumer facing experiences or internal AI solutions to support knowledge workers in making better decisions.

We want to share the emerging patterns we're seeing - from both building and playing with many generative AI solutions - to help design better human-AI relationships.

Why do we feel these emerging design patterns are important?

There are hallmarks of our interactions with technology today, both as knowledge workers and consumers, that make getting the things we want to do more difficult than they should be. They limit our productivity, effectiveness and value.

For example, knowledge workers and consumers alike are overwhelmed by the sheer volume of information, choices, and tools we have. This constant barrage of messages, often contradictory, prevents us from making clear decisions or achieving the focus we need. In fact, studies show that employees spend up to 40% of their workday just searching for the information they need [McKinsey, Reference. ] This information overload hinders productivity, but also happiness.

Additionally, we all face a limited capacity for attention. Even if we could find it, we simply can't process or analyse all of the knowledge required to make informed decisions. We can only focus on a small subset of available data at any given time, often clinging to existing beliefs or ideas, which reduce our ability to consider alternative solutions or outlying patterns.

Then we have the tools themselves, which often fail to recognise these limitations. Most technology is designed for “averages" —one-size-fits-all solutions that don't adapt to individual contexts. Whether its the software we use for work or consumer-facing apps, they don't account for our specific needs, constraints or the unique circumstances in which were working. As a result, technology ends up being inefficient or counterproductive. In this sense, generative AI offers a unique opportunity to enable more adaptive, personal interactions.

Finally, there is an inherent misery in many work environments. Increasing volumes of ‘busywork', the likelihood of needing to be ‘always-on' and the need to repetitively perform non-value-adding tasks contributes to disengagement and chronic stress. While generative AI and AI agents hold the potential to remove this misery by reducing or even eliminating these types of experience, there is a mistrust of AIs ability to improve work overall. People often resist the idea of “AI taking over, perhaps down to a fear over displacement, but also because we feel a sense of ownership over our own work - bull**it work included.

The application of AI alone isnt a solution. Instead of improving experiences, poorly integrated AI creates new tasks and anxieties, especially for knowledge workers. These types of challenge create the need for human-AI interactions that are not only efficient, but aligned with our cognitive capabilities, individual circumstances and the constraints were under as people. The six patterns set out below represent emerging approaches to designing interactions that acknowledges people, fostering a more adaptive, transparent, and meaningful relationship with AI.

Six emerging design patterns for human-AI interaction

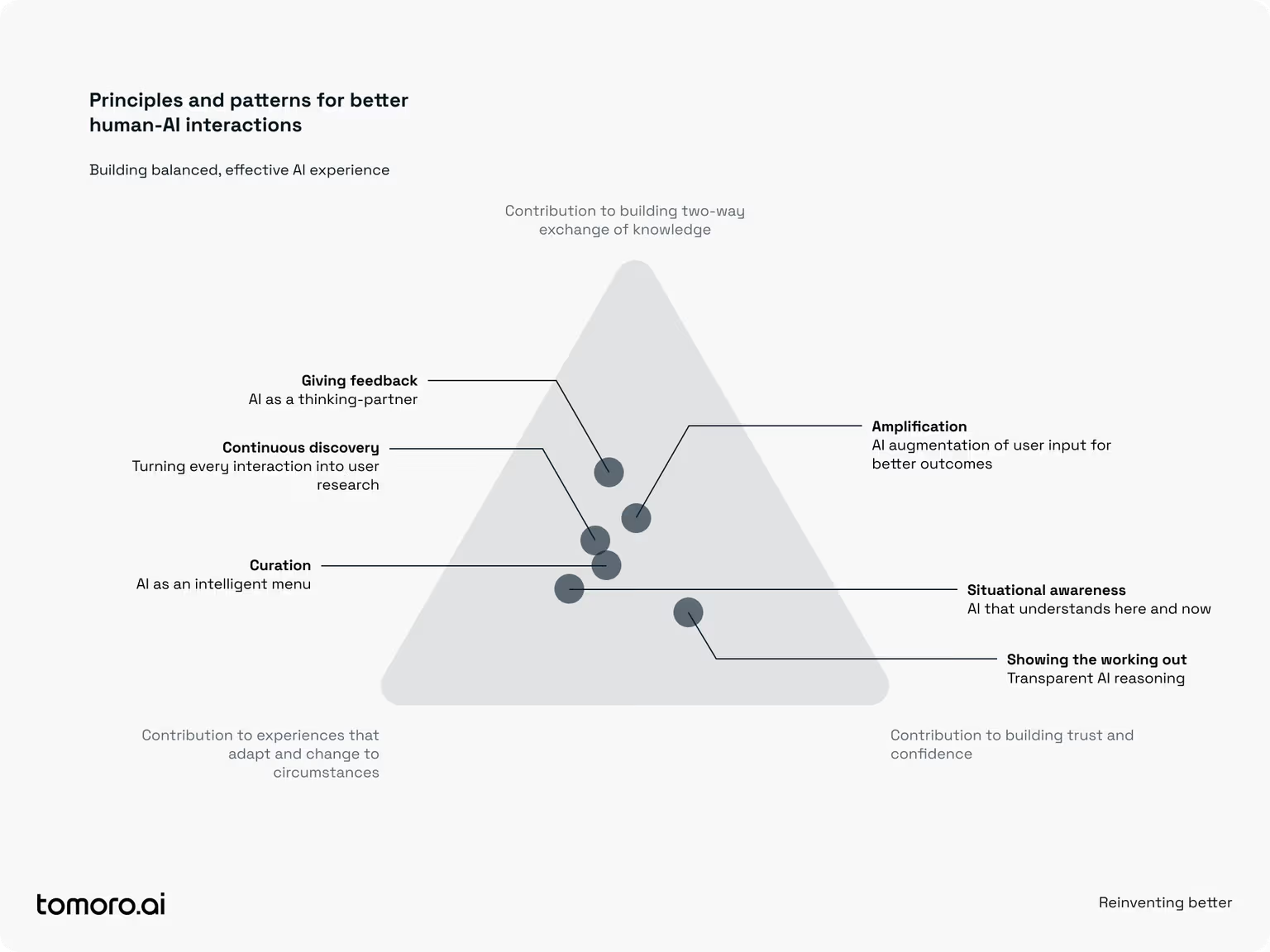

[The relationship between emerging design patterns and principles to guide effective, human-centred AI experiences]

-

Curation: AI as an Intelligent Menu - AI curates and organises information, reducing overwhelm by helping us navigate vast datasets.

-

Giving Feedback: AI as a Thinking Partner - AI provides real-time, thoughtprovoking feedback that challenges assumptions and offers alternative perspectives to improve work.

-

Situational Awareness: AI that Understands Here and Now - AI adapts to real-time context, making interactions more relevant by continuously processing environmental and user need

-

Continuous Discovery: Turning Every Interaction into User Research - AI uses every user interaction to uncover insights and refine its understanding, ensuring ongoing learning and improvement.

-

Amplification: AI Augmentation of User Input for Better Outcomes - AI enhances user input behind the scenes to produce better results while preserving the users original intent.

-

Showing the Working Out: Transparent AI Reasoning - AI makes its decisionmaking process visible and useful, fostering trust and enabling better, more informed decision-making by users.

These six patterns highlight the fact that designing effective AI solutions is about much more than user interface. They are about shaping and coordinating the behaviour of both people and AI agents. Ultimately, we are aiming to facilitate the overlaps between decisions, humans and AI agents within complex systems of knowledge. These patterns allow us to improve interactions between people and AI systems by:

-

Prioritising two-way knowledge exchange: enabling a dialogue where both AI and humans contribute ideas, context, and insights. This will be the key to developing more capable AI systems, equipped with expert knowledge and intuition that isnt codified today.

-

Creating experiences that adapt and change across circumstances: AI tools and applications can become obsolete or irrelevant very quickly. AI systems that have situational awareness, self-learn, and adapt to changing context and the unique circumstances of a user will remain far more useful overtime.

-

Improving trust and confidence: transparent, collaborative interactions lower barriers to usage, drive higher engagement and enhances both human and AI decision-making. Design helps people remain confident in the systems decisions and outputs overtime.

Summary: Moving Beyond the Bot

The design of AI experiences is not trivial, but there are battle tested approaches to navigating the challenges. At a fundamental level, similar principles of understanding users and designing great experiences apply, but there are new factors that we have to account for. We're now designing for AI with agency, as well as for people. Great AI experiences that focus on meaningful interactions between people and AI systems - experiences that curate information effectively, give useful feedback, learn and adapt transparently - can reduce overload, improve decision-making, and build trust.

These patterns help overcome common hurdles to adoption, making AI genuinely helpful, adaptable, and more valuable for everyone involved.

If you would like to dive deeper, the guide below provides more detail on each pattern, including the different ways we can leverage the pattern as part of a broader AI experience.

1. Curation: AI as an intelligent menu

What it is: Instead of users manually sifting through endless search results or documents, AI agents can curate information from vast datasets and present the most relevant insights. In essence, the AI becomes a librarian, organising knowledge to fit the user's context.

Why it's useful: Put simply, reducing information overload. Consider that the average knowledge worker spends 1.8 hours per day searching for information, contributing to burnout for nearly half of employees - [McKinsey, Reference]. AI driven curation tackles this by indexing, finding, and combining information from a vast array of sources, often helping a person to find information, options or insights they might not have found independently, through traditional search.

The challenge: Moving from a traditional GUI-based search to an agent-assisted approach requires a shift in user expectations. People are accustomed to search engines showing a list of links they can manually verify or buttons they can press to filter a data set. An AI curator, by contrast, synthesises answers from sources the user may not even know exist. This capability comes at the cost of users perceiving less direct control over filtering and must trust the agents choices. We need to help users adapt to this new paradigm by blending AI-driven curation and collaborative design with more familiar mechanisms of searching for and finding information.

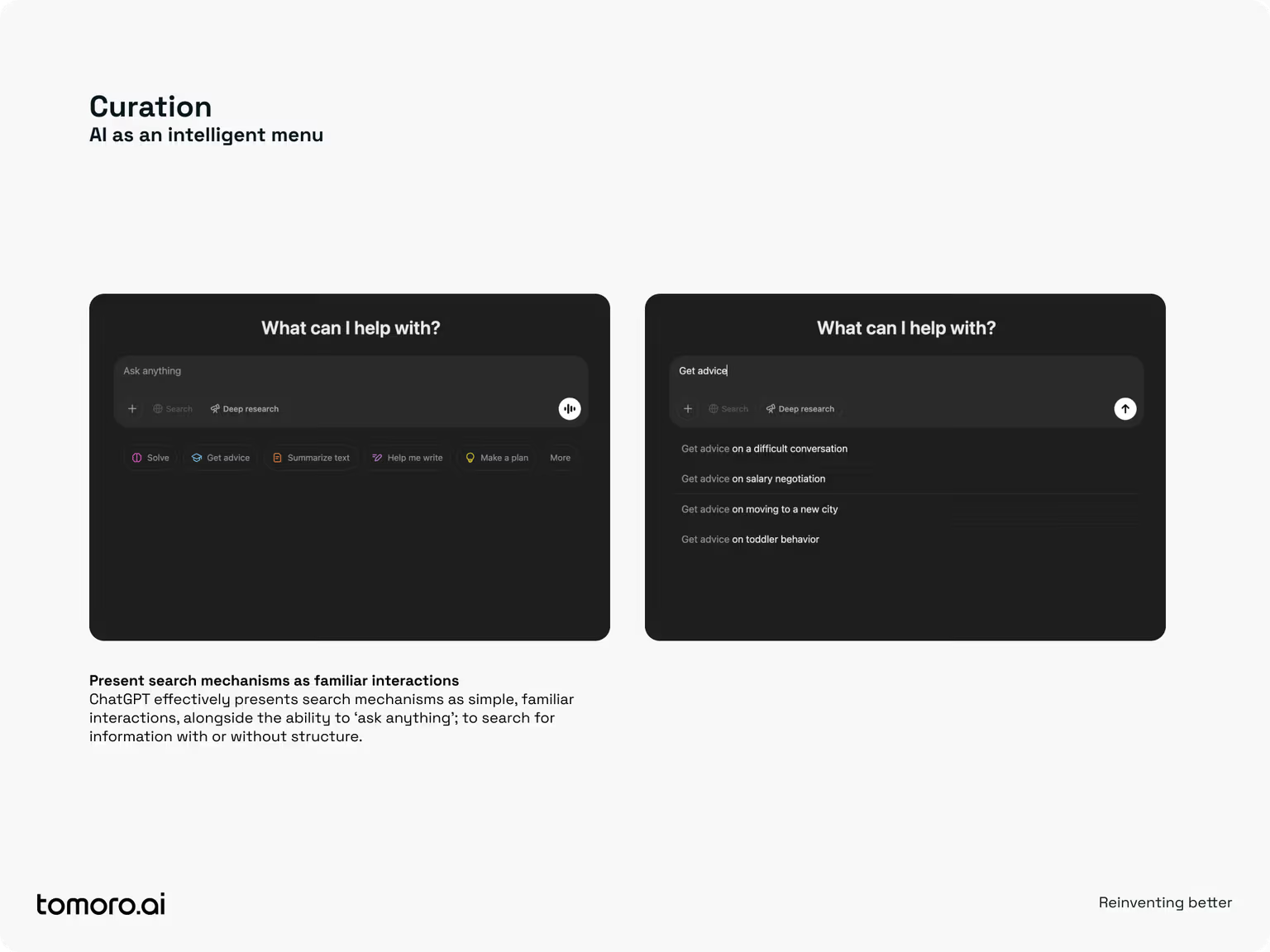

[How OpenAI utilise simple, familiar interaction patterns to enable structured and unstructured discovery of information]

Useful approaches to AI curation:

Present search mechanisms as familiar interactions:

To ease the transition from traditional search, present search mechanisms as familiar interactions, rather than blank canvases. If users feel like the experience is an evolution of what they know - presenting options, questionnaires or contextual suggestions - they'll be more comfortable.

Use buttons as prompts instead of requiring open-ended input:

Rather than relying solely on free-text input, which can be intimidating or time-consuming, pre-set or auto-generated buttons make curation more accessible. For example, “Filter by most recent", “Compare pricing", or “Include global sources" can instantly guide the AI to refine its curation. This lowers the barrier to user input, encourages exploration, and shapes the AIs recommendations by clarifying user intent.

Let users tell a story to refine search results:

Sometimes, the best way for users to communicate what they need is through storytelling rather than direct queries or filters. Especially in complex scenarios—like navigating dense dashboards or searching for the perfect product—people feel overwhelmed by traditional search tools or uncertain about exactly which parameters to adjust. If you let them “tell their story" — whether its a specific pain point, a personal goal, or a type of product theyre looking for—the AI can translate that narrative into structured search parameters. For example, someone explaining “I just moved into a smaller home and need versatile, budget-friendly storage solutions" could receive curated product sets, design hacks, and best-fit recommendations. By embracing storytelling, you not only reduce the cognitive burden of knowing what filters to apply but also foster more confidence and clarity in the final curated results.

Use memes and cultural references to personalise discovery:

Utilising memes can aid AI-curation in two ways: they enrich how the agent understands the user, and they bring search results to life in a more human, relatable way. First, by selecting or referencing memes that illustrate their goals, mood, or style (“Im feeling the ‘Treat Yo Self vibe today!), users are effectively sharing their “theory of mind with the AI. This helps the agent finetune curation by inferring context that might be lost in a standard GUI or text query. Second, the agent can “playback results in thematic or pop-culture– inspired “starter packs, making search results like e-commerce recommendations feel more relevant and personalised. For instance, if a user is revamping their home office, the AI might create a “WFH Starter Pack" meme that bundles desk accessories, tech tools, and inspiration.

Create clarity for the user through next best actions:

Whether the information or results an AI agent find are or arent hitting the mark, users should easily be able to easily refine the parameters for exploration. For example, “Show me more like item 2 or “Exclude sources older than 2020". However, it can be hard for users (especially new users) to understand the next steps. Providing ideas for next best actions can simplify the process, providing guidance that steers the AI curator without overburdening the user.

Useful approaches to AI curation:

Present search mechanisms as familiar interactions:

To ease the transition from traditional search, present search mechanisms as familiar interactions, rather than blank canvases. If users feel like the experience is an evolution of what they know - presenting options, questionnaires or contextual suggestions - they'll be more comfortable.

Use buttons as prompts instead of requiring open-ended input:

Rather than relying solely on free-text input, which can be intimidating or time-consuming, pre-set or auto-generated buttons make curation more accessible. For example, “Filter by most recent", “Compare pricing", or “Include global sources" can instantly guide the AI to refine its curation. This lowers the barrier to user input, encourages exploration, and shapes the AIs recommendations by clarifying user intent.

Let users tell a story to refine search results:

Sometimes, the best way for users to communicate what they need is through storytelling rather than direct queries or filters. Especially in complex scenarios—like navigating dense dashboards or searching for the perfect product—people feel overwhelmed by traditional search tools or uncertain about exactly which parameters to adjust. If you let them “tell their story" — whether its a specific pain point, a personal goal, or a type of product theyre looking for—the AI can translate that narrative into structured search parameters. For example, someone explaining “I just moved into a smaller home and need versatile, budget-friendly storage solutions" could receive curated product sets, design hacks, and best-fit recommendations. By embracing storytelling, you not only reduce the cognitive burden of knowing what filters to apply but also foster more confidence and clarity in the final curated results.

Use memes and cultural references to personalise discovery:

Utilising memes can aid AI-curation in two ways: they enrich how the agent understands the user, and they bring search results to life in a more human, relatable way. First, by selecting or referencing memes that illustrate their goals, mood, or style (“Im feeling the ‘Treat Yo Self vibe today!), users are effectively sharing their “theory of mind with the AI. This helps the agent finetune curation by inferring context that might be lost in a standard GUI or text query. Second, the agent can “playback results in thematic or pop-culture– inspired “starter packs, making search results like e-commerce recommendations feel more relevant and personalised. For instance, if a user is revamping their home office, the AI might create a “WFH Starter Pack" meme that bundles desk accessories, tech tools, and inspiration.

Create clarity for the user through next best actions:

Whether the information or results an AI agent find are or arent hitting the mark, users should easily be able to easily refine the parameters for exploration. For example, “Show me more like item 2 or “Exclude sources older than 2020". However, it can be hard for users (especially new users) to understand the next steps. Providing ideas for next best actions can simplify the process, providing guidance that steers the AI curator without overburdening the user.

2. Giving Feedback: AI as a thinking partner

What it is: A lot is written today about the delegation of typically human work to AI. We talk less about the remarkable ability of agents to act as real-time collaborators. Rather than just following instructions or producing content, an agent can actively contribute to tasks by asking thought-provoking questions, challenging assumptions, or offering alternative perspectives. This turns AI into a thinking partner that can help the user improve their own work in the moment.

Why it's useful: We all benefit from in-the-moment feedback, we just don't often get it. Our thinking benefits from both dissent and advocacy, helping us to quickly identify coherent patterns before we become too attached to our ideas. We can use AI agents effectively to challenge our thinking, generate ideas or suggest alternative interpretations of our data, none of which replaces human judgment. In fact, weve found that that humans often respond extremely well to this type of agentic feedback. AI agents are surprisingly effective in generating counterarguments, to expose gaps in reasoning or reveal alternative perspectives.

The challenge: For AI feedback to be effective, it must be intuitive and actionable – otherwise it risks becoming a distraction. Users don't welcome a meddling AI that interrupts with irrelevant suggestions, or one that nitpicks every minor detail. Striking the right balance is tough: the AI should interject at the right moments and with the right tone. If its too passive, opportunities for improvement are lost; too aggressive, and users feel second-guessed by a machine. The challenge lies in how to surface the AIs feedback: should it appear as hints, as an optional review, or as a two-way dialogue? Getting the interaction right will determine whether users embrace the AI as a partner or simply see Clippy 2.0.

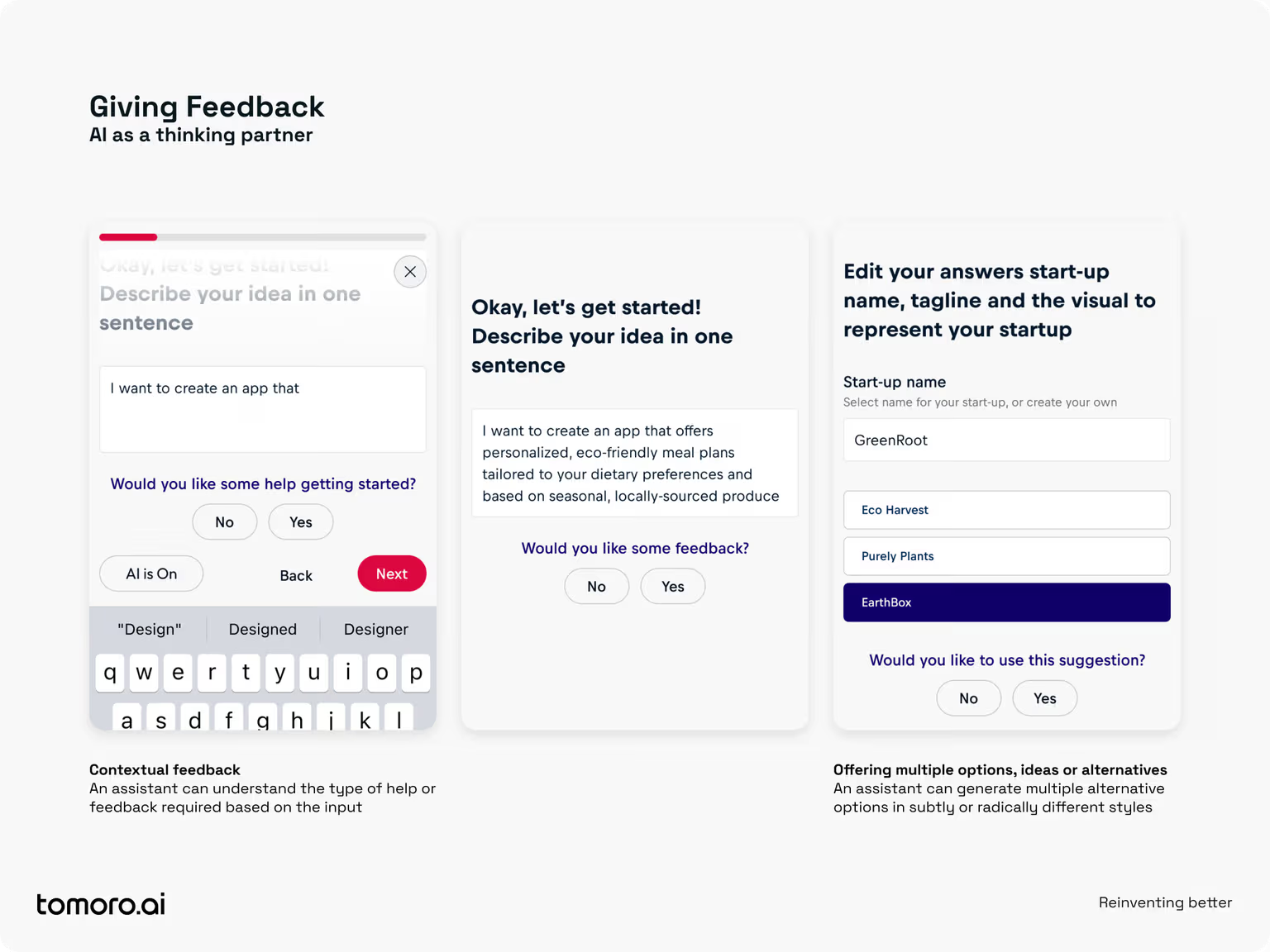

[Examples of ways AI can nudge, feedback and make suggestions]

Useful approaches to making AI feedback intuitive and actionable:

Ensure that feedback is proactive but not annoying:

Allow the AI to offer suggestions at natural breakpoints. For instance, in a document editor, the AI might wait until a sentence or paragraph is finished before offering a rewrite suggestion. Weve experimented with the agent waiting a second or two once a text area is selected but blank before dropping in. Tone matters too; phrasing suggestions as “Here's an idea to consider…" is much more acceptable than blunt assertions.

Turn feedback into an exploratory dialogue:

If the AI's feedback is unclear or if the user wants to know why it suggested something, make it easy to ask for clarification (e.g., “Why do you suggest changing this?). A simple way to do this - so that AI feedback can effectively feel more constructive - is by giving AI a curious persona. Like a good therapists ask questions at the right time or a good facilitator asks good thought-provoking questions. This turns feedback into a conversation, helping the user learn. It also lets the AI gather context – meaning both the user and the agent become better equipped to allow meaning to emerge from the exchange.

Offer multiple options, ideas or alternatives:

Instead of a single idea, solutions or challenge, an agent can provide a different perspectives. For example, an AI agent might generate three alternate layouts; a writing AI could suggest multiple versions of a tricky sentence for different audience types. Presenting options empowers the user to choose and reinforces that the human is ultimately in control.

Make sure that feedback is context-aware:

Make sure that feedback is context-aware: The agent should adapt its “thinking partner" role based on the users current context. For example, if a user pauses at a question or text box without typing, the AI might gently nudge them by asking a thought-provoking question, rather than jumping in with a fully-formed answer. On the other hand, if a user is nearly done and just needs a rephrase, a concise suggestion they can accept or reject ensures the user maintains ownership. Crucially, the AI must know when to step back— prolonged back-and-forth can lead to a “doom loop of endless edits, undermining the users confidence. By staying attuned to context and user actions, the AI agent can tailor its feedback to be genuinely helpful without overstepping.

3. Situational Awareness: AI that understands here and now

What it is: Situational awareness refers to an agents ability to perceive and interpret contextual information in real time, and adjust its behaviour accordingly. Rather than operating in the past, in a vacuum or based on predetermined inputs, AI agents can continually take in and data about the environment, the users state, and other real-world factors. This could mean an AI that adapts a user interface based on user need in-the-moment, or an agent that understands the current context of a market or industry in order to increase relevance.

Why it's useful: The real world is not static – conditions change from moment to moment. AI systems with situational awareness can be far more effective and helpful because they account for the here and now. In the context of AI agents, situational awareness is the key to both personalisation and usefulness. When an AI grasps context, interactions feel significantly more natural and relevant. The more backstory and real-time context an AI has, the more grounded and useful its outputs can be.

The challenge: Building AI with genuine situational awareness is easier said than done. There are obvious challenges around data access and integration – which can be technically complex and difficult to do.

When it comes to human-AI interactions, theres a fine line between helpful context-awareness and annoying overreach. Weve found that users deeply appreciate an AI agent that “gets it" but feel annoyed or uncomfortable if the AI requires too much information from them or is perceived as too persistent. Maintaining situational models can be challenging – contexts can change rapidly, and human expertise and intuition can be invaluable. Ultimately, this means that AI needs a user to help it continuously maintain its “world model" in order to be useful.

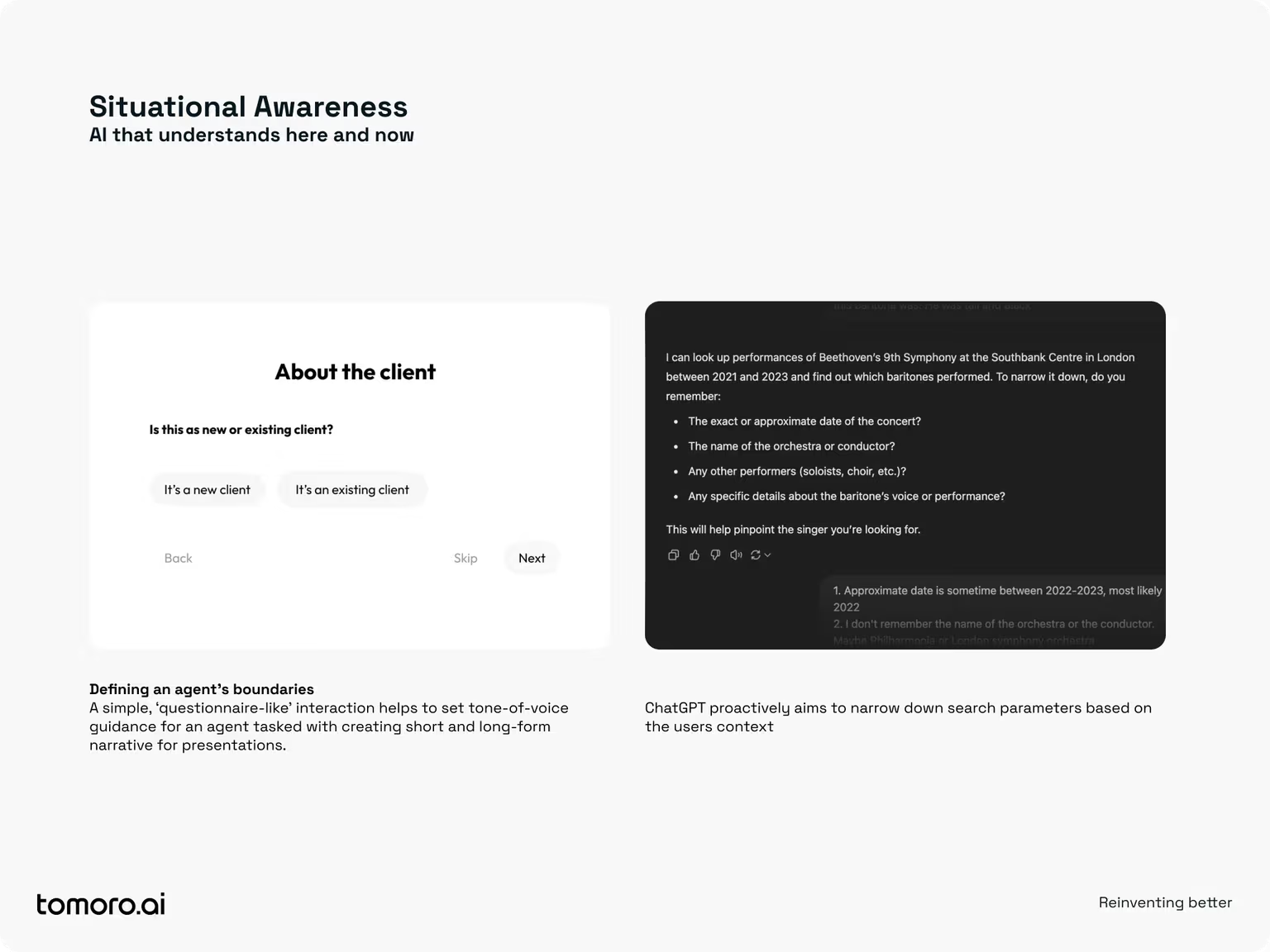

[Simple ways we can help users to collaborate with AI agents to develop situational awareness]

Useful approaches to helping AI adapt to a situation:

Show users what good context looks like:

The recent emergence of reasoning models (like Open AI's o1) has changed the way people provide context to a Large Language Model. Regardless of the model, rich descriptions of context are vital to being situationally aware. However, users aren't always aware of what good context looks like, or what they need to provide. We can show them. Small acts of guidance (placeholder text, hints, or expandable tips) that provides sample context entries and briefly explains why they matter. Explicitly showing the user what “good context looks like can remove the guesswork that stops people from otherwise specifying meaningful details.

Make it easy to define the agents boundaries:

No matter how much context the AI has, the users immediate needs are paramount, but often opaque. In the context of a situationally aware AI agent, we can enable the user and agent to collaboratively shape the topics, domains or tools an AI agent might explore or use. Deep Research (within ChatGPT) does this extremely well by asking the user relevant clarifying questions before it starts its research.

Offer a “visible context" for quick edits and additions:

Sometimes users need a snapshot of what the AI currently assumes (focus, goals, data sources). For example, a dedicated panel that displays the AIs current knowledge cutoff, assumptions or the relevant data its using can help to let users know what, when and how context is being used. We might show a small icon when location is actively being used for AI decisions, or display how many records are being analysed to form an insight. Its incredible helpful for users can see at a glance what the AI “knows" and easily add, remove, or update context (topics, goals, constraints) with minimal friction.

Let the AI select UI features based on usefulness:

AI agents can selectively “turn on" or “turn off" certain capabilities based on situational cues. For example, if someones drafting a white paper, the AI might automatically activate advanced citation; if they are editing speaker notes it may surface editing features. This can allow us to integrate broad functions and capabilities within experiences that feel streamlined and hyper-relevant.

4. Continuous Discovery: turning every interaction into an opportunity for user research

What it is: Experience design and product development often treats user research and discovery as a phase – something you do at the start of a project or at periodic intervals. Continuous discovery is the idea that understanding user behaviour, needs and preferences is an ongoing process, and AI can help turn every user interaction into an opportunity to learn. Each conversation, click, or piece of feedback can be analysed to uncover insights, even those that users don't explicitly articulate. In essence, the AI is always learning, helping to refine a adapt to the problem space in real-time.

Why it's useful: It can be extremely difficult to capture feedback from experts, practitioners or general users in relation to how effective, coherent or usable an AI output actually is. Whats valuable, is allowing agents to help us gather reactions, feedback and intuition in-context; in a meaningful situation, rather than aiming to do so removed from the experience of work.

The challenge: Capturing feedback can be difficult. We often capture aspects of a user interaction that sets out what happened, or what the user liked or disliked. Getting to why, continuously over time, is more of a challenge. It can feel like were asking a lot of people to commit their thought process to memory and provide feedback to shape the systems future capabilities. This is particularly important in tightly bounded AI systems, where a free-form, conversational exchange or iteration with an AI agent is neither desirable or possible.

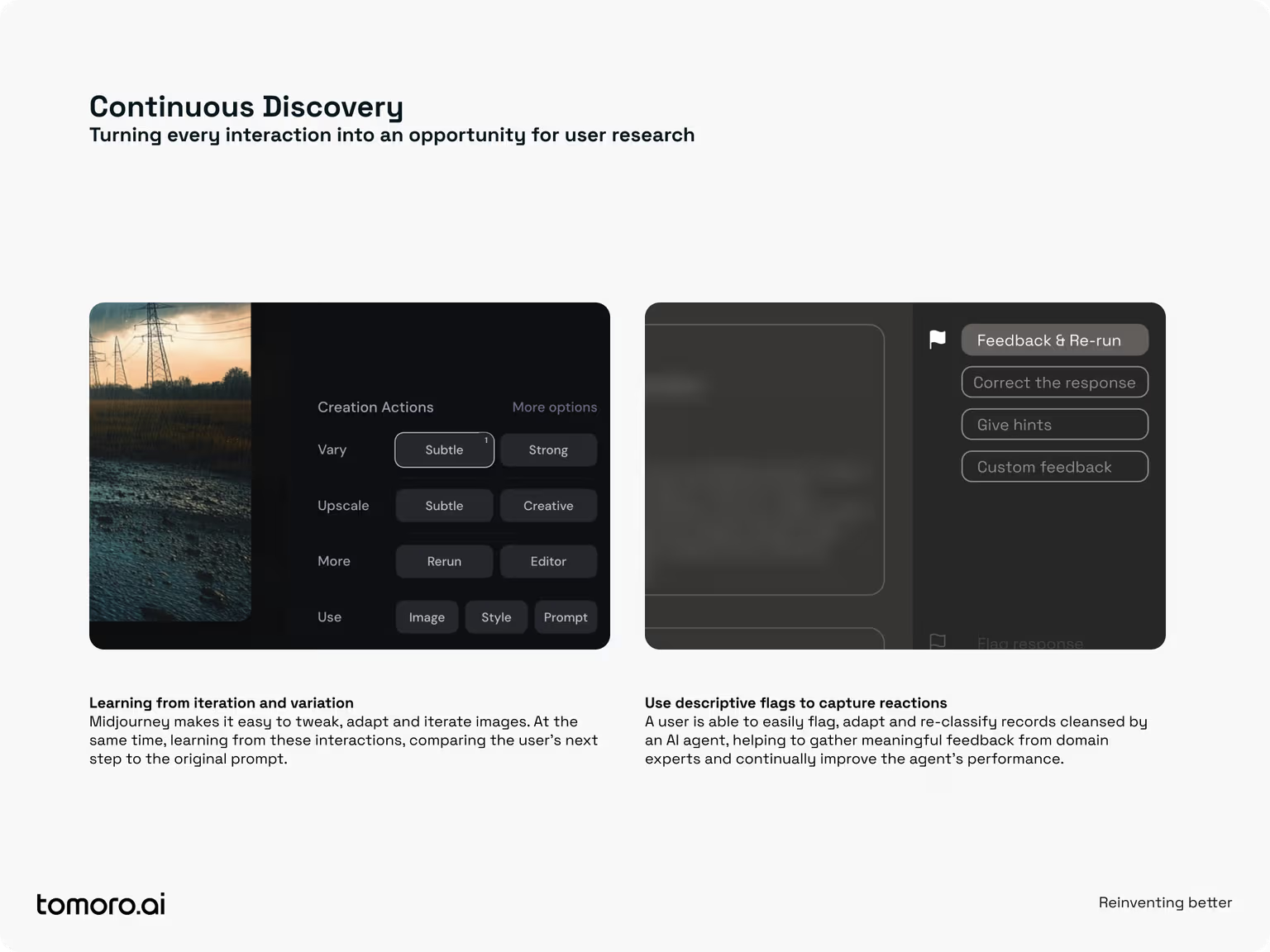

[AI systems can continuously learn from users choices, iterations and conversations]

Useful approaches to embed continuous discovery with AI

Use descriptive flags to capture reactions:

A simple “thumbs up/down" rarely conveys the reason behind a users reaction. Instead, allowing people to “flag" the output with context—e.g., “helpful for budget planning" or “missing regulatory info" can help us more meaningfully analyse outputs. Flags can be generated based on the context of the interaction or simply allow the user to share a more meaningful perspective at a point in time. This adds a quick rationale that the AI can learn from, ensuring feedback isnt just a rating but a micro-story.

Steer AI agents with ‘flashcards':

When the AI gets something wrong (or even partly right), give users a frictionless way to correct or “teach it" on the spot. Think of it like creating flashcards the agent can learn from: a prompt appears (“How would you phrase it?" or “What detail did we miss?"), and the user supplies the fix. Flashcards can be committed to the agents memory—either short-term for the immediate context, or long-term for repeated use.

Learn from iteration and variation:

Sometimes the best feedback is just trying again. Providing clear and simple ways to regenerate outputs helps users to quickly cycle through fresh options or ideas, identifying those they prefer. This encourages both exploration and learning, helping us to connect an input, to a desirable outcome via the different options a user generates. Done well, it simplifies iteration where experimentation is the most valuable tool for self-improvement.

Observe interactions:

AI agents can work together to learn across multiple interactions. For example, imagine an agent is tasked with creating a research summary, a parallel agent might be responsible for understanding each interaction and exchange to make inferences about the effectiveness of the experience. In this sense, agents can act as ethnographers, observing interactions and identifying difference between a persons intent and their perceptions of the quality of the output. These observations can be used as part of continuous adaptation and improvement of a system - continually surfacing learning or suggesting and implementing improvements.

5. Amplification: invisible augmentation of user input for better outcomes

What it is: Amplification refers to the adaptation of a users input, prompt or instruction to produce a better result – all while preserving the users original intent. In other words, the AI does extra behind-the-scenes thinking beyond the users explicit ask. For example, ‘shadow prompting involves the AI agent reformulating or enriching a users query invisibly to get a more useful answer. The user provides a spark and the agent takes that (often) minimal input and adapts where it beleives a better outcome can be achieved.

Why it's useful: Users often (quite reasonably) give minimal or high-level instructions, but the optimal answer might require a more invovled set of steps. AI agents can bridge that gap without requiring the user to spell everything out. For instance, if you ask an AI, “brainstorm marketing ideas for my new coffee product", a straightforward single-thread answer might give you a fairly uninspiring list. An amplified approach could have AI agents run multiple, increasingly ambitious brainstorms, ultimately combining the best ideas. The end result is richer and more diverse than a single-pass response.

The challenge: The flip side of doing things behind the scenes is the risk of losing users sense of ownership or transparency (which is why the next pattern relating to transparency is so important). If an AI modifies a users request without telling them, does it risk doing something the user is disconnect to or didnt want? There's a trade-off between helpful amplification and unwanted manipulation. If amplification is implemented poorly, it could actually distort the users intent. From a design perspective, figuring out how to let users guide the amplification (if they want to) is tricky.

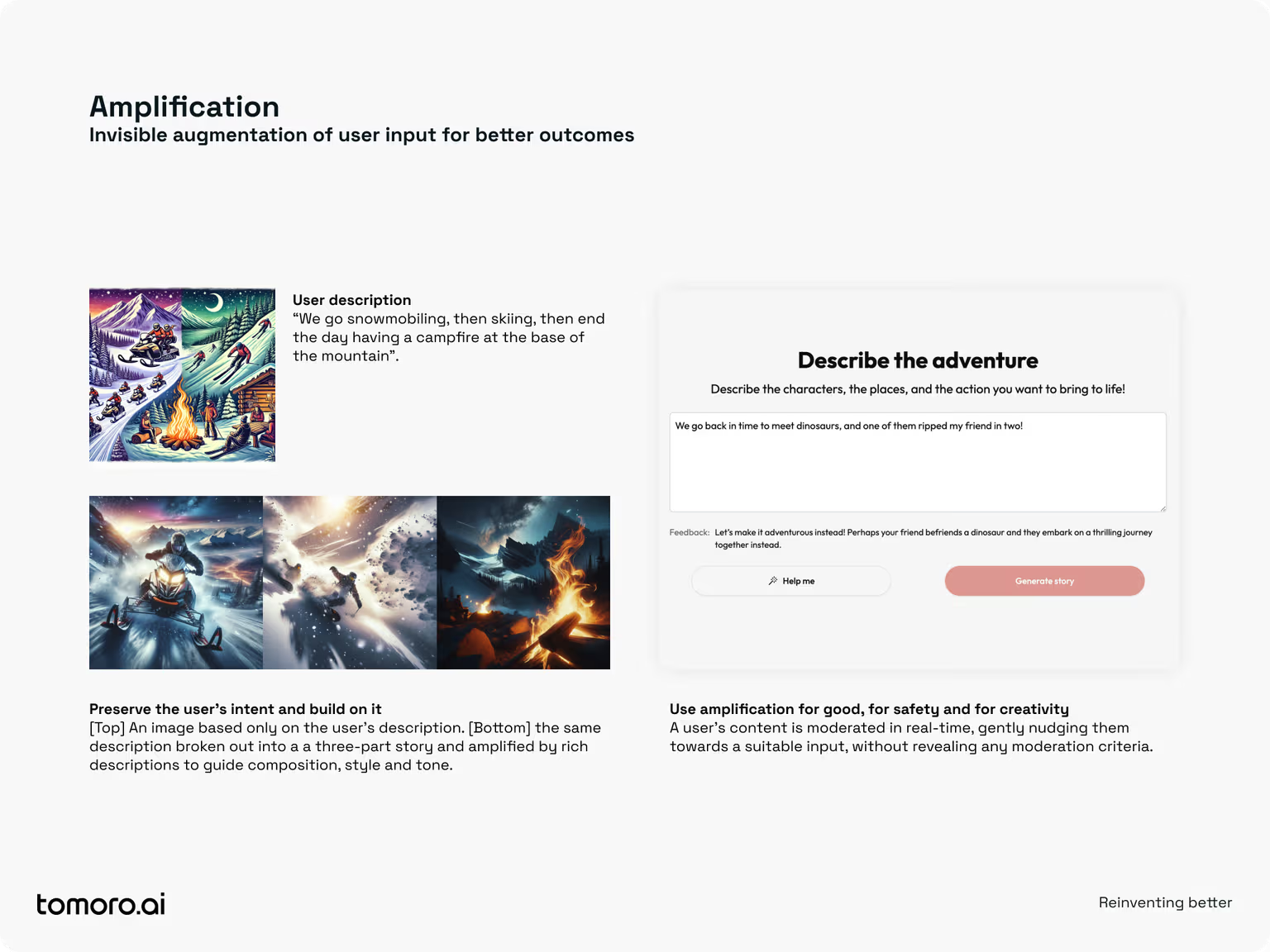

[Utilising (sometimes) invisible assistance to drive better outcomes]

Useful approaches to amplify user inputs:

Preserve the users intent and build on it:

Any amplification should be designed to enhance the stated goals of the user, not add new ones. Its good practice to check – after the AI has expanded a prompt or run invisible subtasks, have it (or a separate validator) compare the final output against the original request. If something in the output wasnt asked for (and might not obviously feel like a beneficial addition), this should be communicated to the user.

Use amplification for good, for safety and for creativity:

One justified worry with approaches like shadow prompting is that it could be used to over-censor or shape user requests covertly. To maintain credibility, use such techniques primarily to enhance clarity, safety, or completeness, not to negate the user's intent. If something must be adjusted for safety (e.g., rephrasing a query to avoid harmful content), be upfront about it or provide the user with immediate feedback. This is a pattern we apply often in the context of content moderation. Users see the output of moderation but, they don't see the moderation logic or criteria itself.

Borrow tried and tested facilitation techniques:

We've found AI agents to be effective in using facilitation and collaboration techniques (such as Liberating Structures or other workshop methods) to shape the AIs interaction with the user. These techniques provide lightly structured frameworks that maximise creative and analytical potential in human groups – and an AI can apply them in principle to amplify a single users input. The idea is for the AI to internalise patterns used by skilled facilitators (brainstorming methods, idea filtering exercises, etc.) and deploy them invisibly during the conversation. By doing so, the AI introduces “tiny shifts" in how the interaction unfolds that can unleash a much greater diversity of ideas and insights.

Utilise parallel threads to help surface useful, usable content:

Instead of a single linear exchange, the AI can spawn parallel "threads" that explore different interpretations, ideas, or solutions at the same time. This way, a complex or open-ended query can be approached from several angles simultaneously – much like having a panel of experts brainstorming in parallel – without losing any potentially valuable insights. The users messy exploration is amplified by the AIs ability to pursue diverse thought paths in tandem, while a coordinating process in the background eventually weaves these threads into a coherent, actionable response.

Use amplification to educate users (subtly):

Over time, well-implemented amplification can actually educate users on how to better interact with the AI. For example, if the AI often says “Ive considered X and Y in my answer, users learn that those factors matter, and might include them next time themselves. In essence, AI agents can model good inquiry techniques. When communicated effectively, this turns amplification into a two-way improvement: better output now, better user inputs later.

6. Showing the Working Out: transparent AI Reasoning and thought process

What it is: This pattern is all about making AI agents' thought processes visible. Where we design interactions to “show working in a way that is meaningful and conducive to effective human decision making. This often means providing explanations for why an AI model or agent gave a certain answer or providing citations backing its response. Chain-of-thought reasoning popularised asking a model to show the intermediate steps it took to arrive at an outcome. We're finding that “showing the working out can be most effective when its incremental and explorable; meaning users can dial the level of detail up or down as they see fit.

Why it's useful: Trust is clearly a cornerstone of widespread AI adoption, particularly in an enterprise setting. If people cant understand or verify what an AI is doing, theyll be hesitant to use it for anything important. Transparency also aids repeatability and consistency. When “working out" is visible and explorable, it's easier to diagnose issues, provide timely course correction or produce repeatable approaches that can be replayed over time. Transparent agents help users make better decisions.

The challenge: Explaining AI decisions is hard and theres a challenge in presenting this information. For example, too much transparency can overwhelm users. If every answer comes with a dump of probabilities or a long chain-ofthought narrative, people can tune out. We also need to remember that transparency alone doesn't guarantee the truth. So, we need to tackle a number of challenges; the quality of reasoning, the clarity of its presentation, and allowing reasoning, citations and sources to become part of a dialogue.

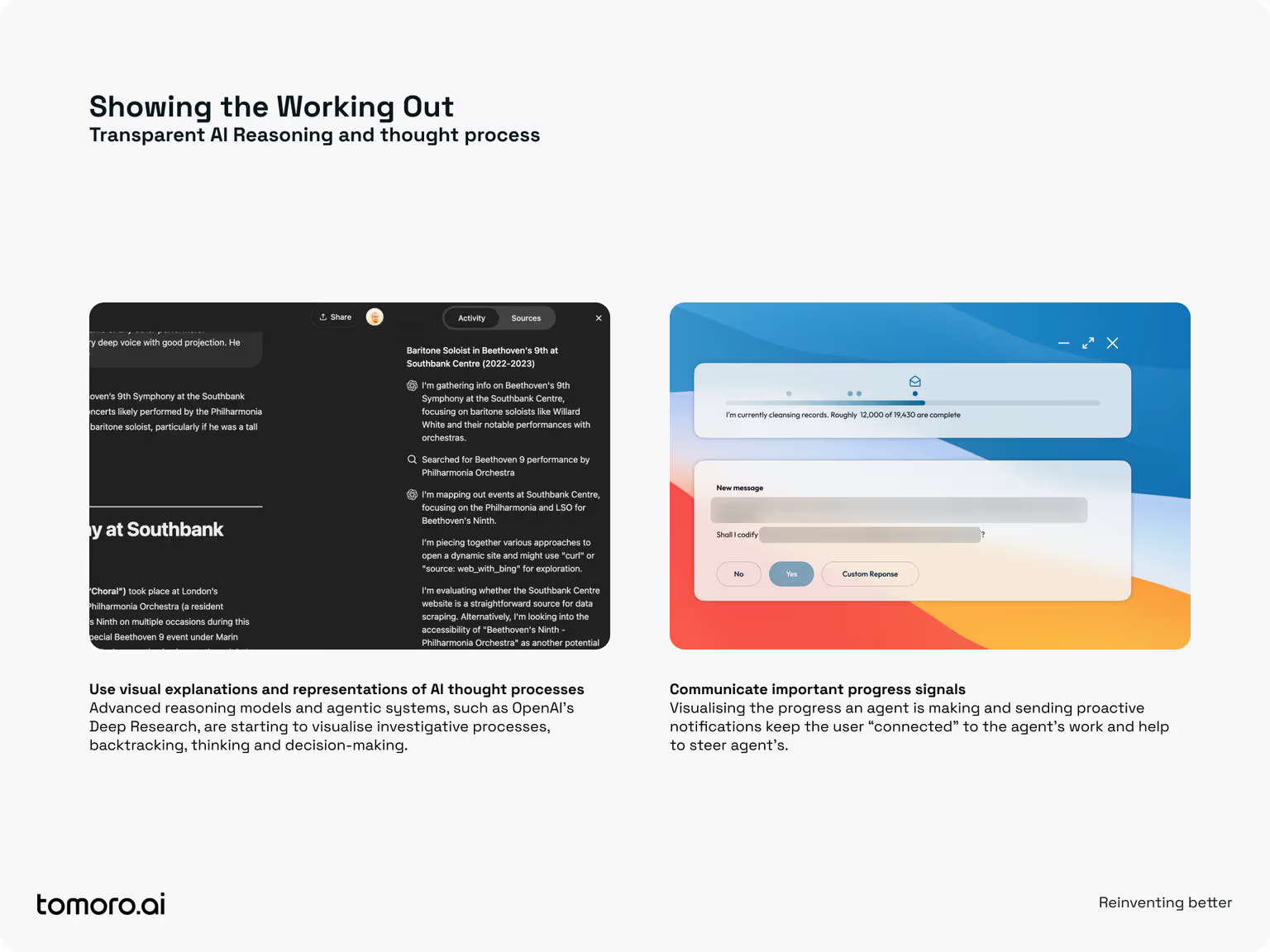

[Finding ways to surface AI agents thought process, decisions and progress to users is vital in the context of trust and confidence]

Useful approaches to design for transparent, trustworthy AI outputs:

Allow agents to think expansively, then compress the results:

AI should reason expansively but deliver responses in digestible, structured formats. Instead of simply truncating outputs, use hierarchical compression—where high-level insights are presented first, with expandable layers of supporting detail. Techniques like progressive disclosure or providing hyperlinks in summaries can help users access depth when needed without cognitive overload. A well-designed user interaction can house complex insights behind simple ways to explore more content, ensuring clarity without sacrificing depth.

Provide explorable citations and references:

AI-generated outputs should not just include citations but offer users an intuitive way to explore the source material directly. Instead of static references, design AI interactions that allow users to inspect the raw data behind a claim. Beyond web links, this could mean easy access to extracts from internal reports, surfacing key excerpts from qualitative research, or dynamically generating a drill-down view into the quantitative data. In complex RAG-based systems for example, users should be able to navigate from AI summaries to specific supporting evidence, filtering or expanding details as needed. The goal is often not just verification, but enabling deeper inquiry as a route to trust.

Communicate important progress signals:

As AI agents take on increasingly complex work, that work will involve changing direction, backtracking, and combining multiple threads of investigation (as described above). Users are more comfortable with latency, as long as they know whats going on. Weve found that its important for users to be able to check-in, or be updated, on progress. We can help users to understand what the agent has done, what its doing and where it will go next. This extends to proactively communicating with the user (or with other AI agents) when its stuck, or reaches a decision that requires multiple perspectives.

Enable step-by-step reveal of AI outputs:

Rather than overwhelm users with the entire response, we can reveal an output in stages. For example, a slider or toggle between an overview and a detailed view. For example, an AI advising on investments could show a one-line recommendation, but if you toggle details, it expands into a multi-step rationale: “Step 1: Assessed risk profile (you are moderate risk). Step 2: Checked current portfolio balance (overweight in tech). Step 3: Identified diversification opportunities (suggest bond fund X.) Step 4: Formulated recommendation. Users who care can inspect each step; those who don't can ignore it. This elasticity satisfies both individual preferences and the emergence of trust over time.

Use visual explanations and representations of AI thought processes:

Explanations should be as consumable as the primary output. For example, if reasoning involves numbers or relationships, an agent might produce simple charts or diagram. But this principle should extend to an agents processes. If an AI agent can map queries, processes, reasoning and references we can help users to course correct (adjust parts of that process), communicate the process to others or replay those steps again in the future. By seeing the “reasoning in an intuitive format, users can grasp it, and use it, more quickly.