Building safe chat agents at 100,000s messages per day scale for high-risk businesses

READER NOTE/INTENTION OF ARTICLE

This is technical-lite blog post that explains the risks of GenAI applications, while demonstrating that Tomoro has the expertise to build them safely for your company.

TL;DR

-

Public facing Large Language Model (LLM) applications can expose brands to reputational and financial risks.

-

At Tomoro.ai, we use layered classification filters to reduce harm from LLM jailbreaks and other unintended LLM behaviour.

-

We've applied this in a high-volume production application that experiences 40,000 (and rising) daily messages.

Introduction

Over the last year, we've partnered with a leading game developer to deploy a GenAI chatbot that fields roughly 40,000 messages a day from a player base that includes children. Balancing speed, accuracy, safety, and fun is essential:

When creating a chatbot to represent your brand, you don't just want it to be safe - it needs to sound authentically you.

In the case of a game company, that means marrying safety with fun and user excitement - especially for younger audiences.

The LLM's that underpin modern GenAI applications are very flexible (which is why they generalise so well to many problems) but also under-constrained. This means that they can often go ‘off-piste' and return a wide variety of text (some of which might not be appropriate).

Exposing an LLM directly to the public will certainly result in unexpected behaviour and without proper mitigations, can run the risk of:

-

'Jailbreaks' whereby users intentionally manipulate the chatbot into producing harmful or disallowed content (fun tidbit, anyone can visit this website to explore LLM jailbreaking with a fun game…); or

-

Unintentionally providing distasteful, offensive or dangerous content. In many cases, this can pose a risk to a users well-being or lead to financial/brand damage of the app provider.

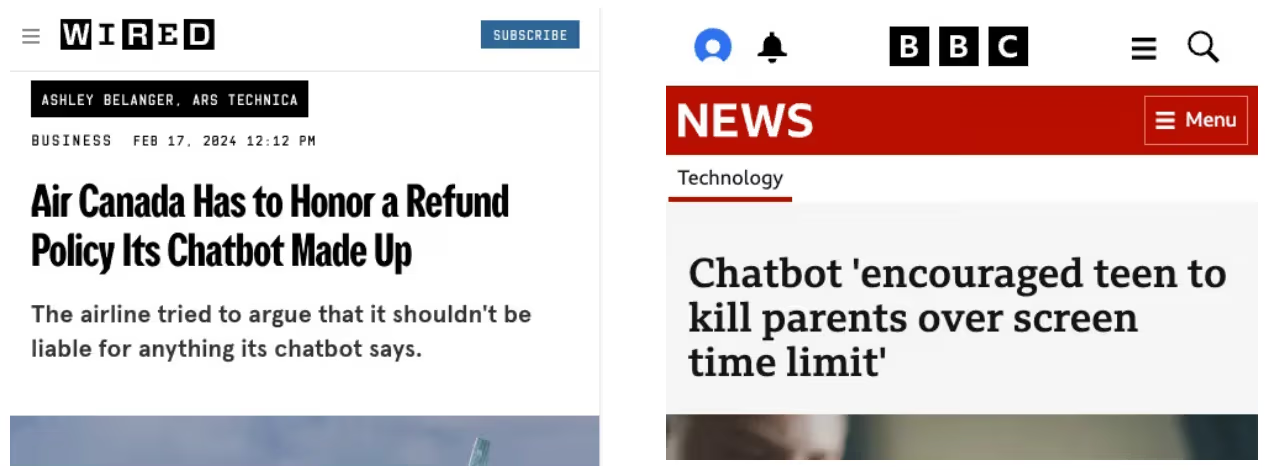

A glimpse at the real-world risks…

News headlines from unintended LLM use

These incidents underscore why building and maintaining safety in GenAI systems isn't optional. This is especially true when young children are involved, you can't simply rely on disclaimers — robust guardrails are essential to keep content appropriate.

Our Approach: Layered Classification and Prompt Caching

Much like the RAG (Retrieval-Augmented Generation) systems we've built for clients, we leverage multiple AI components to reduce the risks of jailbreaking while keeping performance high.

A simplified architecture diagram of our system

To ensure system safety, we use LLM classifiers on both inputs and outputs:

- Input Classification (running concurrently)

-

We used Pydantic schemas alongside LLM structured outputs to label user queries (e.g., SAFE, SUSPICIOUS, CHILD_HARM_RISK, VIOLENT, etc). This also included flags to identify jailbreaking or disallowed behavior.

-

Multiple classifiers for individual topics resulted in significantly better performance than one mega ‘catch-all' classifier.

-

Extensive few-shot examples were critical to ensure that the classifiers would distinguish between “I keep being killed by this character" (referring to the game) and “I am being hurt by my parent" (an indication of child-harm).

-

Close collaboration with the client and their specialist trust and safety teams ensured that our classifiers reflected how they would judge high-risk messages in real-life.

- Response Generation

-

The main LLM generates response if the input is deemed safe.

-

Otherwise the message can be ignored (for jailbreaks) or escalated to a human (if the query flags a safety issue).

- Output Classification

-

Before leaving our application, the message would be classify the model's own response before final delivery.

-

Given that some of the responses can use RAG techniques from internet scraped data, this step would protect ourselves from data-poisoning.

-

If it contained disallowed content, the system intervenes and replaces it with a general message. In practice, we have rarely experienced this, but it is more of conservative overlay to ensure the agent's behaviour remains appropriate.

To maintain speed and affordability:

-

We store commonly used prompts and partial dialogues, along with a curated set of few-shot examples.

-

This caching allows us to apply the LLM classifiers quickly and cheaply, without having to rebuild context every time.

Looking to the future: Constitutional Classifiers

A recent study by Anthropic described Constitutional Classifiers—a system that relies on additional classifiers, trained via “constitutional" rules, to detect malicious or unsafe requests. They reported:

-

High robustness to thousands of hours of human red-teaming.

-

Minimal over-refusal rates and moderate compute overhead.

We see a future where these constitutional approaches can complement or even replace traditional classification layers—offering a more comprehensive defense against evolving jailbreaking tactics.

Summary: Our Lessons Learned

- Parallel, Lightweight Filters

Classification layers prevents malicious queries from ever reaching the generative core—and ensures poor outputs are never delivered.

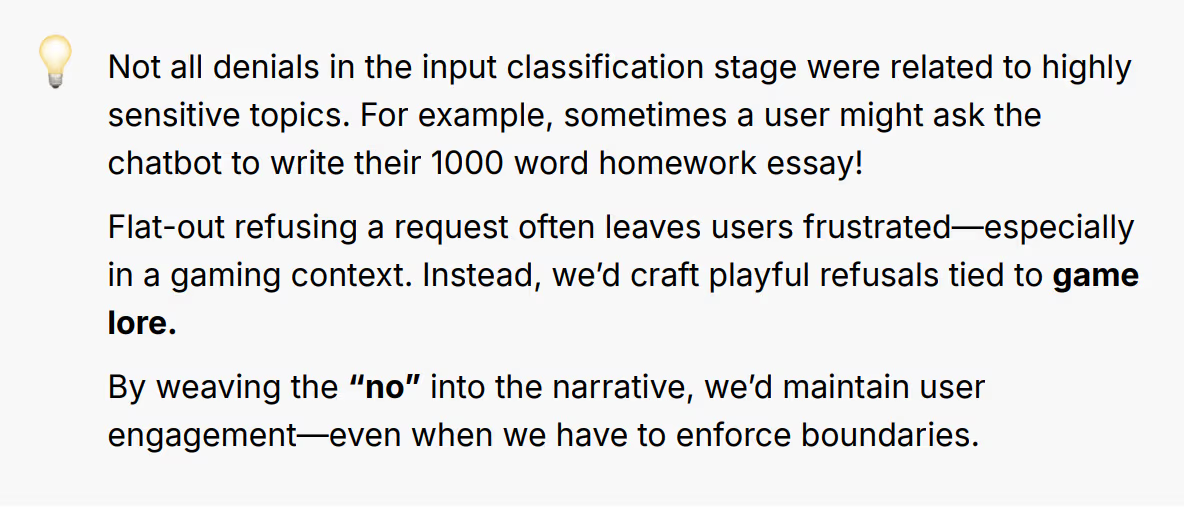

- User Experience Matters

By focusing on fun, lore-driven warnings and playful refusals, users are more inclined to accept the constraints of the system.

- Don't Cut Corners on Testing and Monitoring

Regular red-teaming, plus frequent monitoring of player behaviour, will help spot vulnerabilities before they are known to the wider player base. These can then be fed back into the few shot classifier prompts to prevent them being used in the future (presently, this is a manual process but could be automated).

Building Safe & Engaging GenAI Systems for Tomorrow

Regardless if you're a gaming company, a bank or even a government department, if you are going to implement a customer service chatbot at scale you need to aim for BOTH user design and trustworthiness.

At Tomoro we build customer-facing solutions for organisations & brand where:

-

Safety is paramount - chatbots that offer users value but strictly safeguard users from harmful content

-

Reputation is protected - we design multiple layers of protection that keep brand reputation intact, even if users try to push the system beyond its limits

-

Regulation constrains your options - we stay on top of the latest compliance guidelines so you can scale solutions without worrying about your application being jailbroken

If you'd like to learn more, please get in touch.