What's the fuss?

Since Anthropic released the Model Context Protocol in November 2024, it's become one of the most discussed topics in AI development circles, with industry publications describing it as "suddenly on everyone's lips" and "the new HTTP for AI agents."

The industry response has been remarkable: OpenAI is adding MCP support across their products, Microsoft has integrated it into Copilot Studio, AWS is supporting it in Amazon Bedrock, and both PydanticAI and Databricks have announced full implementations.

This unprecedented collaboration between competitors signals something important: MCP isn't just another technical specification—it's solving fundamental problems that benefit the entire AI ecosystem.

What is the problem?

In 2025, it's widely recognised that large language models (LLMs) serve as genuinely powerful tools. However, they become far more powerful when tightly connected to relevant information and context.

Without this connection, LLMs can behave unpredictably. Consider this analogy:

Imagine sitting a maths exam with an open-book policy.

Relying solely on memory puts you at a disadvantage. You might miss critical details or mix up similar concepts.

Questions you could easily answer by consulting your textbook become stumbling blocks.

This is hallucination in LLMs – making confident assertions without checking available facts.

In the same exam scenario, refusing to use a calculator creates another handicap.

You might solve simple problems correctly, but as calculations grow more complex, accuracy drops dramatically.

Tasks that would be trivial with proper tools become unnecessarily difficult.

This is lack of proper tooling in AI systems.

During a lengthy, multi-section exam, even with your textbook and calculator, you face another challenge.

As reference materials pile up on your desk, finding the exact formula or concept becomes increasingly difficult. You easily recall what's in your most recently reviewed notes and what you studied first, but struggle to locate critical information buried in the middle of your materials.

This isn't about lacking information or tools—it's about efficiently accessing what you need precisely when you need it.

This is the context management problem in LLMs – having access to information isn't enough if you can't effectively retrieve the relevant parts at the right time.

Anthropic's Model Context Protocol (MCP) elegantly addresses all three limitations by creating a standardised way for LLMs to access information, tools, and manage context when needed.

So what is MCP?

At its core, MCP (Model Context Protocol) is a standardised language and framework that connects LLMs to the information they need when they need it.

Developed by Anthropic and released as an open-source project in November 2024, MCP provides “a simpler, more reliable way to give AI systems access to the data they need" while enabling persistent context management beyond the limitations of traditional context windows.

How does it work?

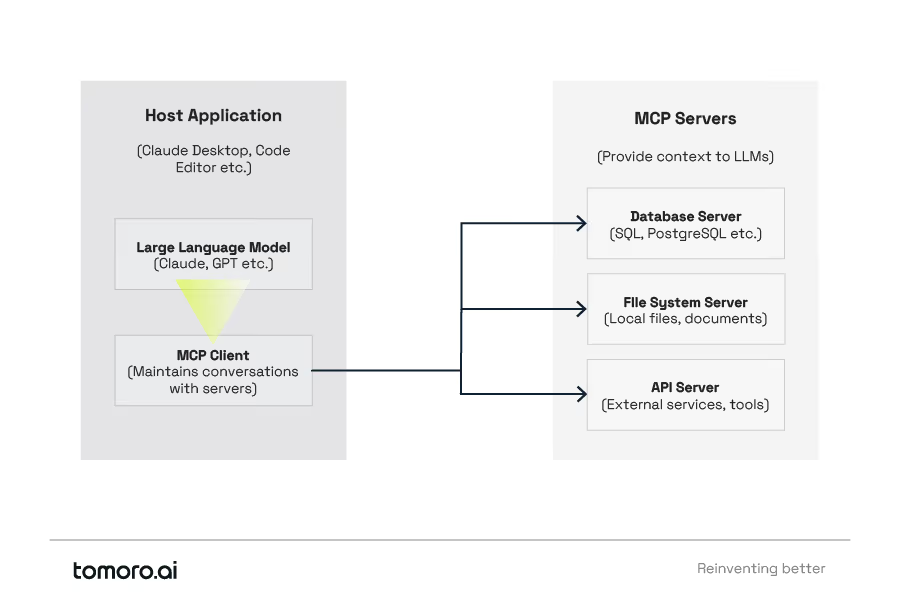

MCP uses a straightforward client-server architecture:

-

Hosts: LLM applications like Claude Desktop or code editors that serve as the environment

-

Clients: Components that maintain connections with servers from within the host application, tracking available capabilities to select the appropriate tools for specific contexts

-

Servers: Services that provide context, tools, and/or prompts to clients on demand

This de-coupled architecture creates a flexible system where LLMs can request information or tool access precisely when needed, rather than trying to incorporate everything into the initial prompt. By separating context management from tool execution, MCP enables more efficient and maintainable AI systems that can evolve independently.

What problem does it solve?

MCP addresses three fundamental challenges in AI system development:

-

Communication language: How should systems communicate internally and with third-party services consistently?

-

Message transport: How should these messages be efficiently transmitted?

-

Context management: How can models maintain relevant context across complex, long-running tasks?

What makes MCP powerful is its elegant approach to these problems.

Communication language

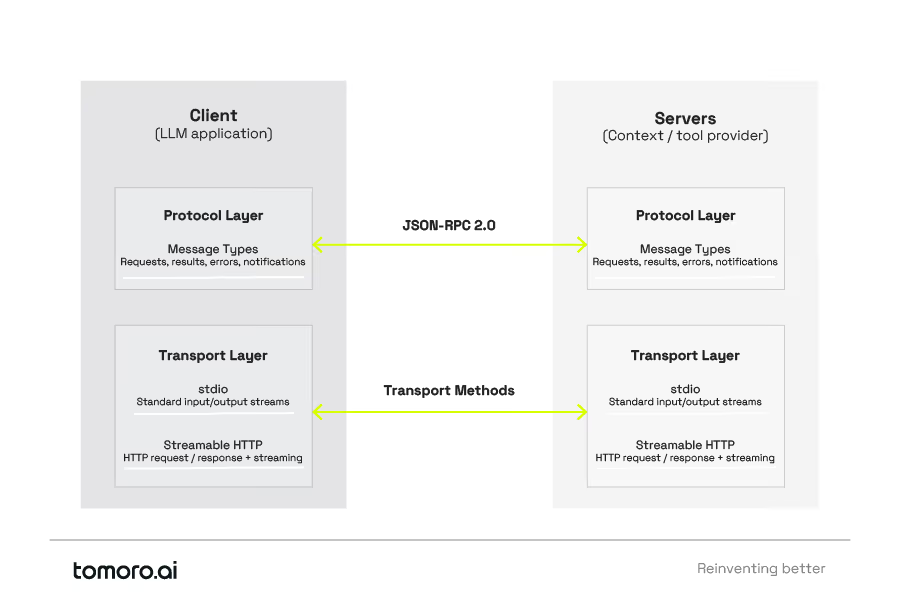

The protocol layer uses four JSON-RPC 2.0 message types:

-

Requests: Commands sent from client to server, expecting a response — think of these as questions your AI assistant asks when it needs information.

-

Results: Data returned in response to requests — the answers provided to those questions.

-

Errors: Structured failure information when requests cannot be fulfilled — clear explanations when something goes wrong rather than silent failures.

-

Notifications: Asynchronous events requiring no response — status updates that keep components informed without needing acknowledgment.

This minimal set creates clear, predictable communication patterns between MCP clients and servers, similar to how well-designed APIs function in modern software engineering.

Message transport

The transport layer offers two standardised methods:

-

Standard Input/Output: Communication channels built into every operating system. Programs can receive information (input) and send back results (output) through these standard channels, creating a simple text pipe with zero configuration required for local process communication.

-

Streamable HTTP: An HTTP-based transport that uses a single endpoint for both sending and receiving messages. Offers better compatibility with network infrastructure while supporting real-time, bidirectional communication between clients and servers.

Context management

MCP's architecture enables strategic context management through:

-

On-demand retrieval: Models can request specific contextual information exactly when needed, rather than hoping to find it in a lengthy context window

-

External storage: Context can be maintained outside the model's limited context window in specialised servers

-

Persistent memory: Information can be maintained across multiple interactions, allowing for truly long-running tasks

In essence, MCP embodies the principle that it's not the size of the context window that matters, it's how you use it. Rather than simply racing to expand context windows (as many AI companies were doing), Anthropic developed a more elegant solution that enables models to strategically access and manage relevant information when needed.

This approach directly addresses the “U-shaped retrieval" challenge – where models struggle to access information in the middle of large context windows.

When to use MCP

Use MCP when you need:

-

Real-time access to external data

-

Your LLM to take actions through tools

-

Connections to multiple systems (databases, APIs, etc.)

-

Standardised interfaces across different data sources

-

Managing coherent context over long-horizon tasks, especially when involving multiple tool calls

-

Planning and executing multi-step solutions to complex problems

-

Building extensible systems where new tools and capabilities can be added without disrupting the core functionality

Consider alternatives when:

-

Working with static, unchanging data

-

Building simple, single-purpose applications

-

You don't need your LLM to execute actions

-

Handling short, self-contained tasks that don't require persistent context

-

Your interaction comfortably fits within the context window of the LLM

-

Dealing with low complexity scenarios that don't require specialised tools

MCP is primarily about extending your LLM's capabilities beyond just generating text—letting it access fresh information, maintain context across complex interactions, and interact with other systems in real time.

Let's dive in…

In the following video, we're going to get hands-on and actually set up an MCP server that will allow Claude to do real-time web searching the way we might find in ChatGPT.

The Path Forward

MCP has evolved from an Anthropic initiative into an industry standard. Its architecture connects LLMs to tools and data precisely when needed.

OpenAI, Google, AWS, Microsoft, and PydanticAI have all embraced MCP, with specialised servers emerging daily. While simple implementations like web search show immediate value, the true power lies in MCP's modularity.

This rare industry convergence signals something profound: MCP solves fundamental problems that benefit the entire AI ecosystem.

Interested in bringing this modular approach to your AI strategy? We'd be happy to discuss the possibilities.