Introduction

TLDR: much of what you already learned about prompting LLMs (Large Language Models) is still important, but there are key differences.

- Few-shot prompting (giving the model a few concrete examples in your prompt) is less important

- Chain of thought prompting (explicitly requesting the models internal reasoning in the final output) is completely unnecessary - as its baked into the model by default

- The ambition of your prompts and use cases should increase to match the increased cognitive abilities of the new generation of models!

Finally, there is no substitute for using these models extensively, and testing them with your own areas of expertise. Building intuition is far more important than slavishly following a ‘prompting framework'. Don't outsource your thinking to online promptfluencers (even me 🙂!)

What you'll learn

In this blog I'm going to talk about some personal best practices I've uncovered from extensive usage and testing of the latest generation of reasoning models from OpenAI (as of this blog, o1, o1 pro, o1 mini) and r1 from DeepSeek. Most of my examples will use o1 pro because its the most capable model and the one I use every day. But the key lessons should apply to all models of its kind.

For a round up of how best to prompt the previous ‘4o' generation of AI models, check out my previous blog here, which covered foundational concepts like Chain of Thought CoT, Few Shot prompting, and Metaprompting:

https://tomoro.ai/insights/prompt-engineering-is-dead

(If youre newer to AI or not steeped in ML jargon, keep an eye out for quick definitions in parentheses throughout the text—this should help you follow along.)

Heres some key themes I'll cover in the blog:

-

Context is king

-

Theory of mind

-

Clear instructions, few examples

-

The bitter lesson

Context is king

Without context, all of the intelligence in the world is useless. Imagine Einstein had stayed a patent clerk and never applied his considerable brain to problems of physics, or Tolstoy had never picked up a pen and fully committed to his peasant lifestyle long before Anna Karenina.

The same can be said for reasoning models. Many of the anti-patterns or poor results I've seen from users disappointed in o1 or o1 pro come from prompters using single-line prompts or asking simple questions that previous-generation models could also trivially answer.

(health warning: this may or may not be a true example - but demonstrates the challenge many early users bump into!)

One of the big drawbacks of previous LLMs was that the more information you gave them, the worse they would do in recalling or synthesising that information. Hence the need for complicated, multi-context window prompts to break problems down into manageable snippets. For o1 pro this is not the case. It thrives on difficult tasks and heaps of input data. Don't just give the model a task. Give it background on your motivations, your role, the task you have in mind, approaches you've already tried, intuitions you have about the problem space, and relevant data to the problem (e.g. a codebase, a document, a Reddit thread… remember that the most reliable RAG (Retrieval Augmented Generation) system for getting what you want into the model is human-operated copy and paste!) This context is as much for YOU to actually understand the task as it is for the model.

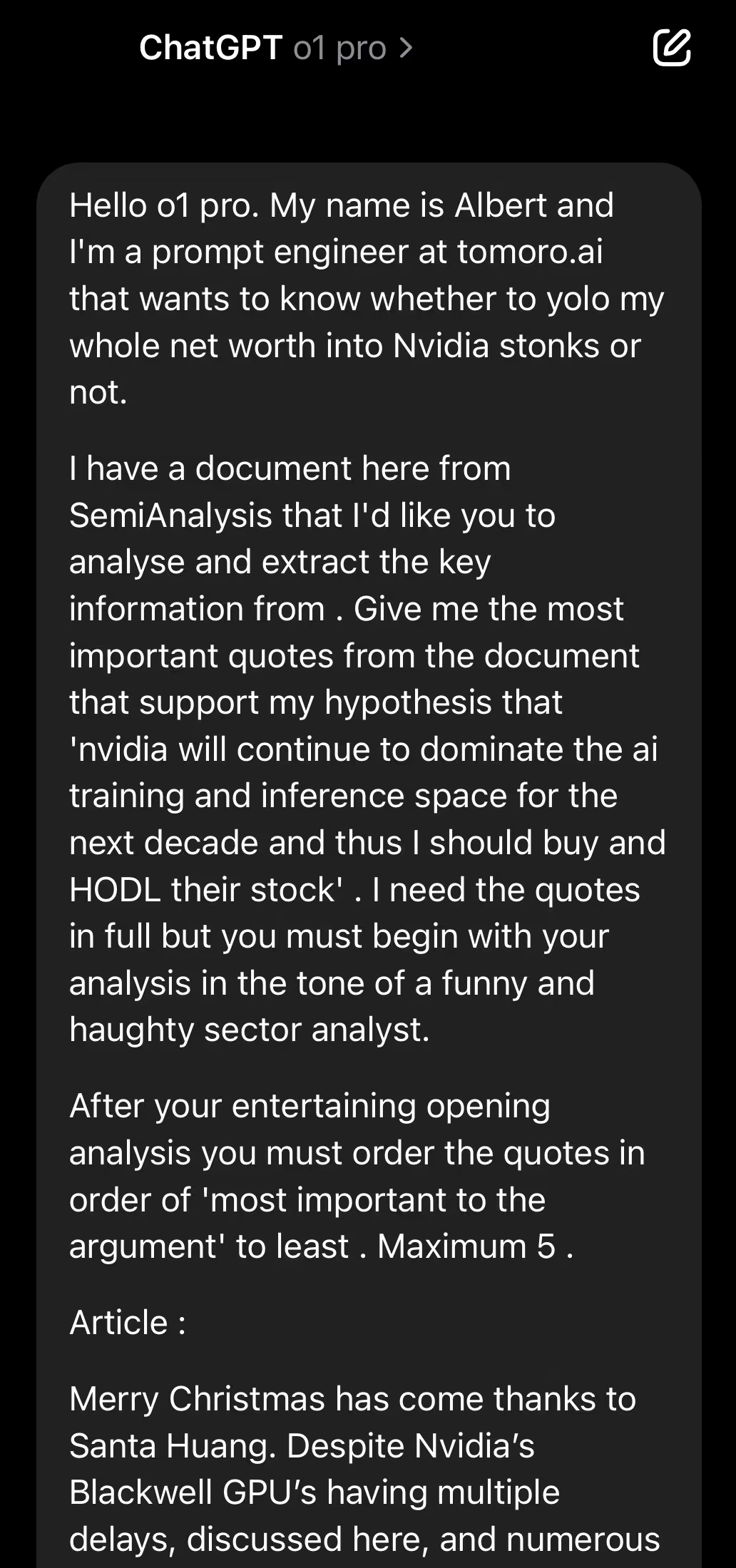

o-series models prompting example: go big on context

Prompt breakdown:

- Who I am

- What I want

- The documents I'm providing

- What I want it to do with those documents

- The ‘role I want it to simulate

- The tone I'm looking for

- My artificial constraints

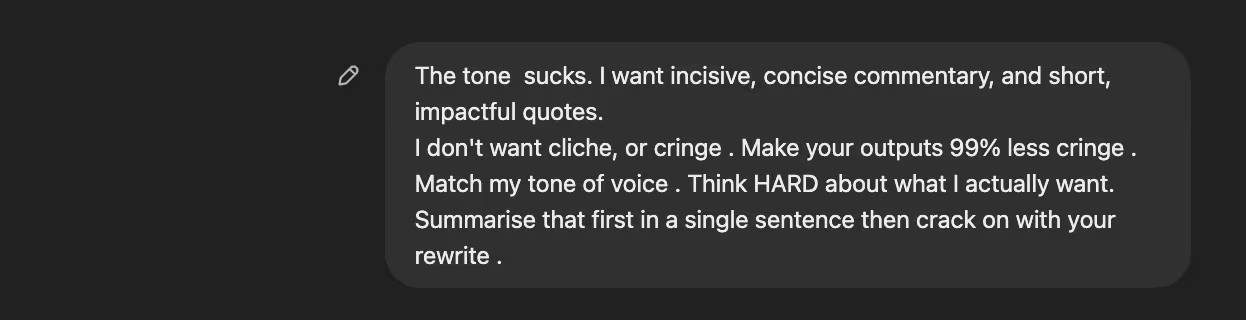

me:

o1 pro:

Theory of mind

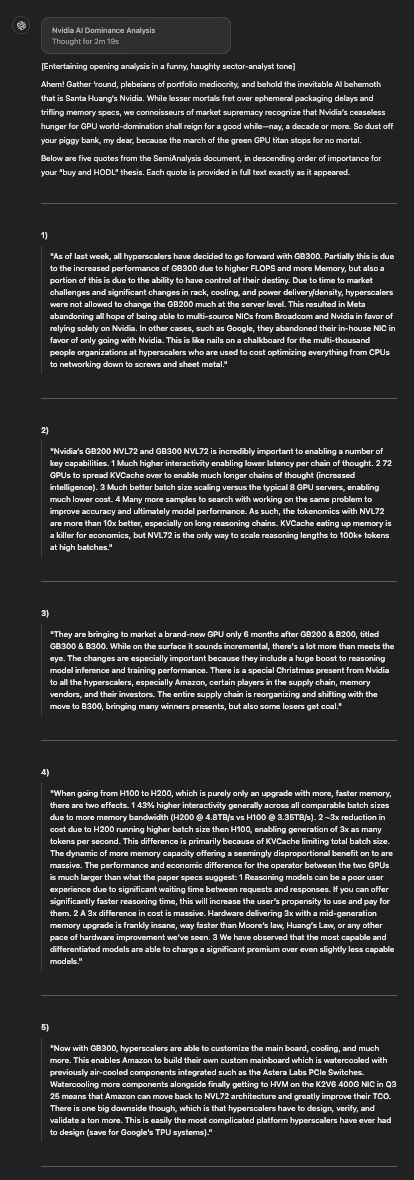

Right, context-maxxing got us some interesting content in here but the tone leaves something to be desired and it's far too long to be useful to a busy prompter like myself.

Next, we need to get the model to better model my theory of mind (i.e. show it how I think). A posh way of saying: “get it to properly understand what I want and who I am."

Remember that many of us don't know what we want until we see what we don't want.

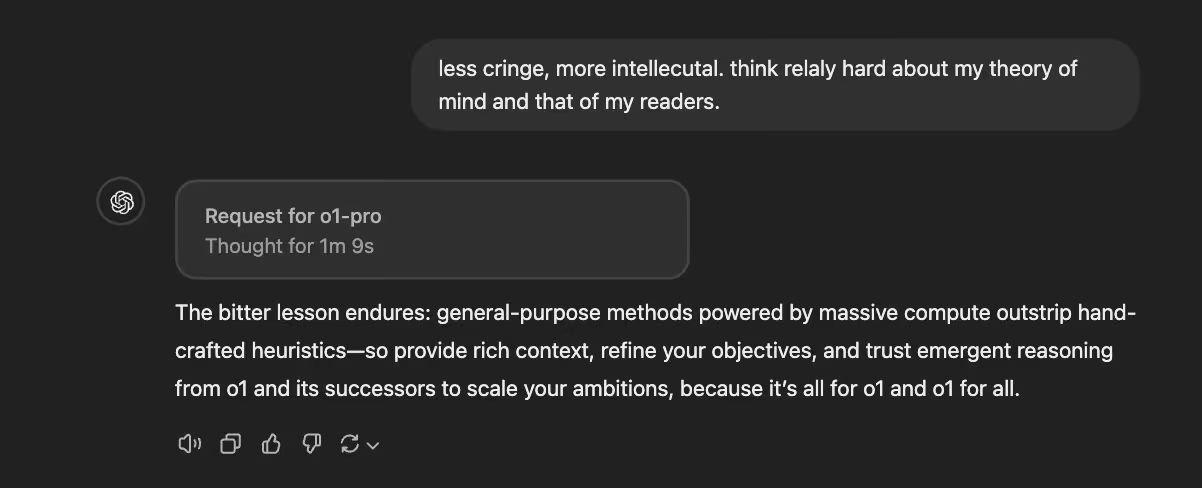

me:

o1 pro:

Better, eh?

What you should notice is that you can start with a slightly silly prompt and still end up with a brilliant response by using that first prompt/ response pair as a jumping-off point to find what you like and don't like, and iterate from there. You'll also see the unreasonable effectiveness of theory of mind prompting in improving reasoning model outputs. Getting the model to explicitly state its model of you and what you want can help with debugging its outputs, as, with OpenAI models, you dont have direct access to the Chains of thought (the hidden reasoning steps) its using at reasoning time.

After extensive prompting sessions, Ive also asked the model to estimate my Big 5 or OCEAN traits, and the results surprised me (it modelled me as far more conscientious and less agreeable than I had previously thought).

To know thyself is the beginning of wisdom.

Clear instructions, few examples

What the keener-eyed amongst you will also realise is that at no point did I give the model an example of the writing that I wanted or try to get it to output ‘chain of thought' in its outputs to me. This is because the model does not need to learn ‘implicit' reasoning from example inputs (the type of imitative learning we call ‘few-shot' prompting) nor does it need to output chain of thought in the final answer to do reasoning (as its GPT-style model forebears did).

Its a continuation of a secular trend of human inputs becoming less important to model capability as models get better. (For example, with GPT-3.5 we had to write a 50-shot few-shot prompt for image moderation. With 4o mini, its analogous successor, our moderation prompt was perhaps at best 5-shot.)

Reasoning models are — you've guessed it — good at reasoning, and use specialised tokens to this effect. So we don't need to provide explicit instructions and implicit examples, or request chain of thought in its outputs. We can just give it feedback like we'd give an unreasonably clever grad student. It's already able to answer your question; you just havent figured out the best way to ask it yet.

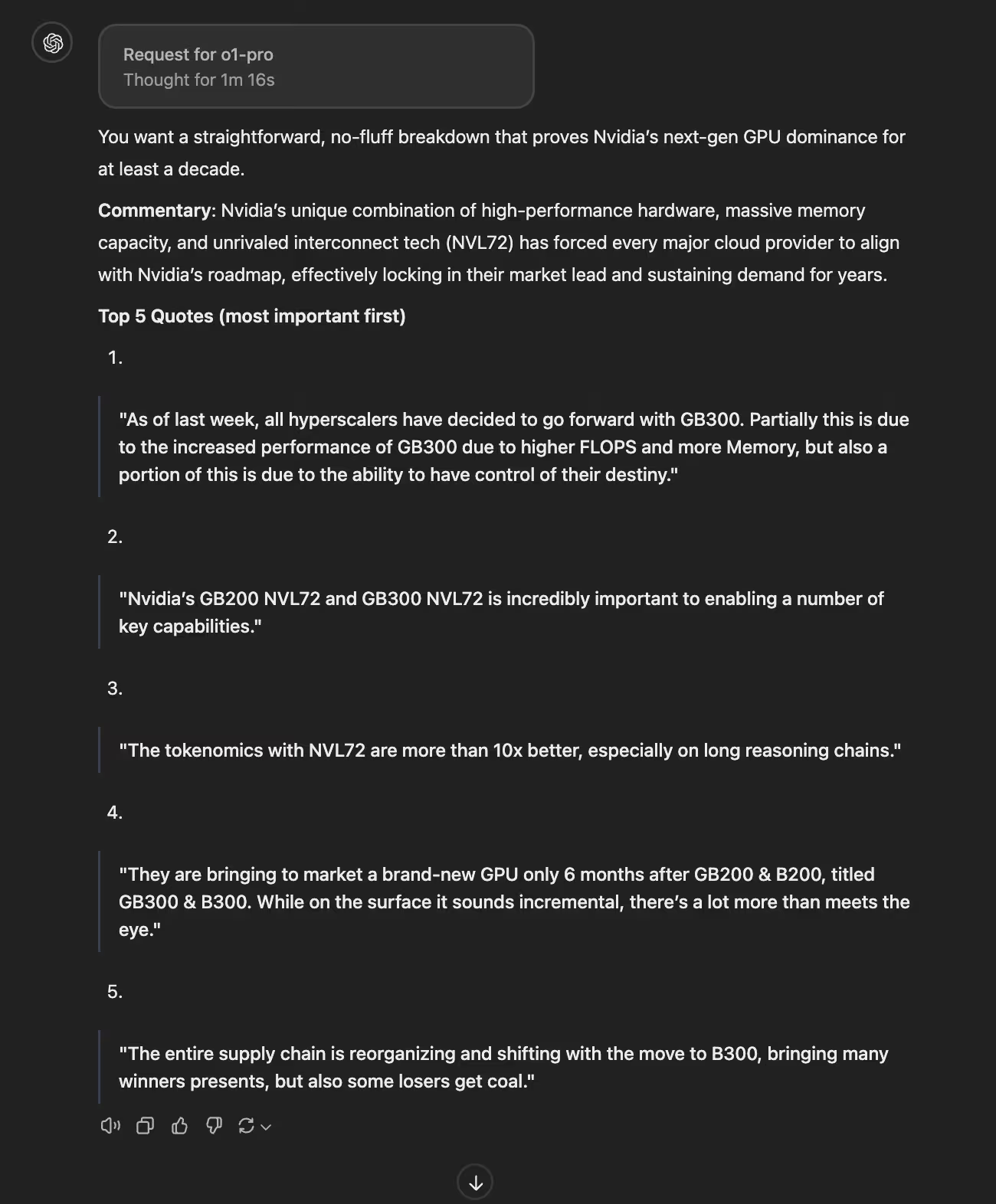

This is borne out in a recent paper from Microsoft Research (https://arxiv.org/abs/2411.03590), a follow-up to the paper spotlighted in my previous blog. Where SOTA (state-of-the-art) results with 4o ‘Medprompt' came from dynamic few-shot prompting (retrieving the most relevant examples to help answer questions), the research team switching to o1 preview found

“a diminishing necessity for elaborate prompt-engineering techniques that were highly advantageous for earlier generations of LLMs",

and

“In some cases, few-shot prompting even hindered o1-previews performance",

but

“Writing a custom-tailored prompt that describes the task in greater detail had a marginal positive effect."

Prompt simply, but prompt with taste and clarity about what you want. Rick Rubin would be proud.

The bitter lesson

One thing that should be learned from the bitter lesson is the great power of general purpose methods, of methods that continue to scale with increased computation even as the available computation becomes very great. The two methods that seem to scale arbitrarily in this way are search and learning.

Richard Sutton

When it debuted with benchmark-smashing aplomb, everyone wanted o1 to be powered by fancy techniques, like the Monte Carlo Tree Search (MCTS) used by AlphaZero.

The reality was much simpler:

Its “just" an LLM trained with RL (Reinforcement Learning, a training method where an AI ‘tries things out and gets rewarded for better outcomes). Unlike the RLHF previously employed on frontier models, the objective here is not ‘making words that are more pleasing to humans' but, ‘picking the best reasoning paths to answer the question', a much more powerful technique.

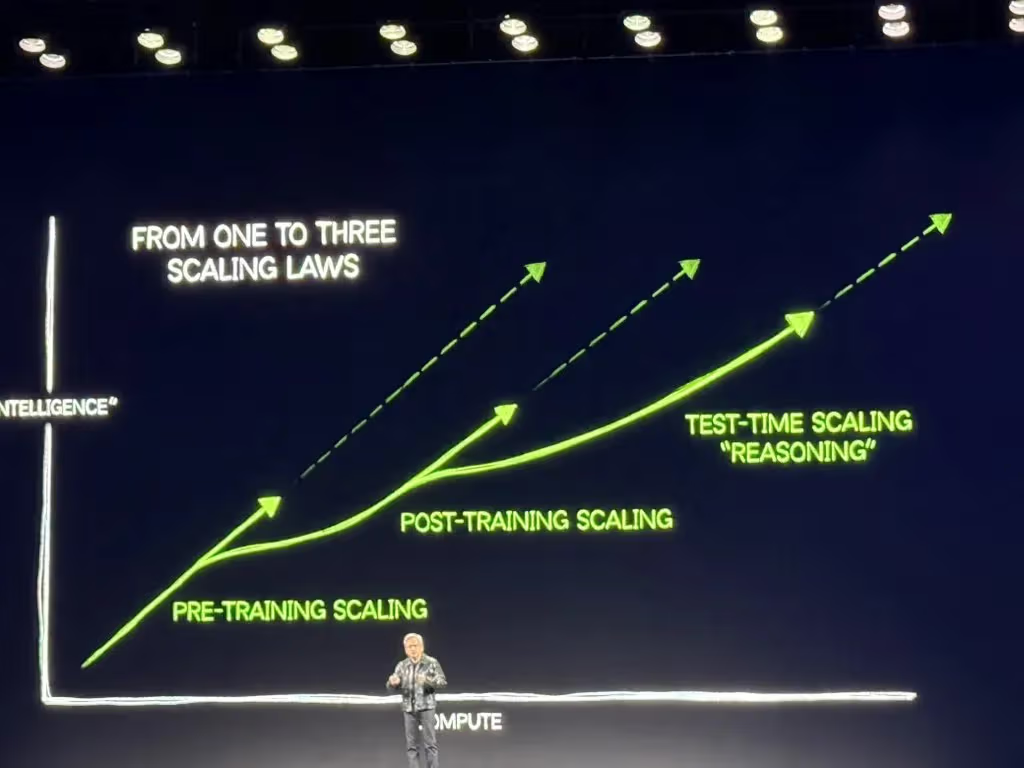

With o1, OpenAI figured out how to scale search during inference time with Reinforcement Learning, opening up a whole new set of scaling laws, just as ‘pre-training' scaling seemed to falter, and just in time for the next generation of GPUs.

DeepSeek confirmed this approach with their recent paper on R1, which I would highly recommend reading here https://github.com/deepseek-ai/DeepSeekR1/blob/main/DeepSeek_R1.pdf if you want to learn more. According to DeepSeek,

“Behaviours such as reflection … arise spontaneously. They are not explicitly programmed but emerge as a result of its interaction with the RL environment.

If theres one thing to take away from this blog, its that:

The bitter lesson endures: general-purpose methods powered by massive compute outstrip hand-crafted heuristics—so provide rich context, refine your objectives, and trust emergent reasoning from o1 and its successors to scale your ambitions, because its all for o1 and o1 for all.

(thanks o1-pro - I fed it this blog and the full bitter lesson and it came up with the ending above)

Next up: o3

When o1 came out we called it a GPT2 moment for a new class of models, what we meant by that is that while the advances were significant, it represented a new path through which to optimise and realise more gains from the same fundemental breakthrough (like those we saw between GPT 2 > 3 > 3.5 > 4).

o3 is around the corner. This model promises another breakthrough in capabilities, simply by scaling the RL that powers o1 reasoning models , distilling the outputs back into the base model.

But as my fellow o1 pro users will know, the largest reasoning models are not suitable for most enterprise scale workloads, due to cost and latency.

This is where the unreasonable effectiveness of model distillation comes in.

But more of that in my next blog, a manifesto for building reliable, flexible, ultra low latency multi-agent systems with o3 mini.