*or is it just beginning?

In this blog I talk about the increasing complexity associated with academic research on prompt engineering; as we move from simple prompt-response interactions into more complex human-AI workflows.

In our follow-up blog, we will cover the human and business implications of this paradigm shift.

Prompting, meet Meta-Prompting

Charles Duhigg at the New Yorker recently gave us the definitive guide to recent events at OpenAI and the fascinating dynamics between Microsoft and OpenAI within their unusual but highly influential partnership.

Putting aside the dynamics of that corporate relationship, the key insight I drew from the piece was this section:

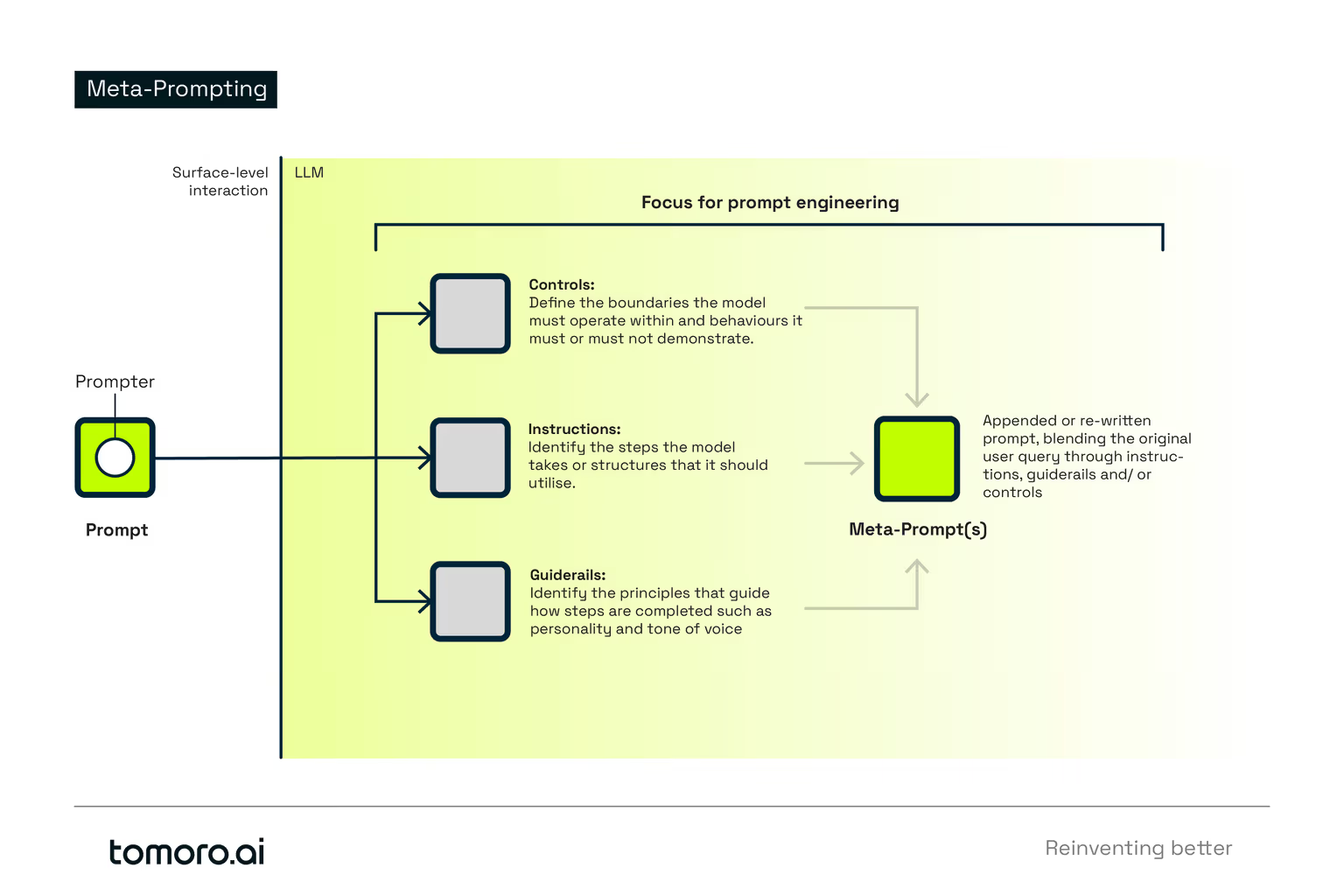

‘Anytime someone submitted a prompt, Microsoft’s version of GPT-4 attached a long, hidden string of meta-prompts and other safeguards - a paragraph long enough to impress [author] Henry James.’

This stood out to me because it’s the first time I have seen the concept of meta-prompts so elegantly represented in a mainstream publication, but as someone who has been building with GPT-3 since 2021, it’s something I’ve personally been researching for a long time. Otherwise known as crafting the human-AI interaction or dialogue; Microsoft’s metaprompts anticipate user behaviour and inform GPT-4 how it should act, what it should do and what it should avoid. In short, meta-prompts are the primary way in which GPT-4 can be customised for Microsoft's array of AI use cases.

There is a misconception that Prompt Engineering is only about the end user of an LLM system, e.g. ChatGPT, adding certain magic phrases or incantations to drive better performance. This leads to folks assuming the practice has a similarly short shelf life to the ‘search engine specialists’ of the 90s, before search engines became intuitive for the end user to query. The domain is further undermined by the typical snake-oil “25 things you NEED to know about Chat-GPT’ hype cycle that has unfortunately and inevitably taken off around LLMs recently.

Early advances in prompt engineering

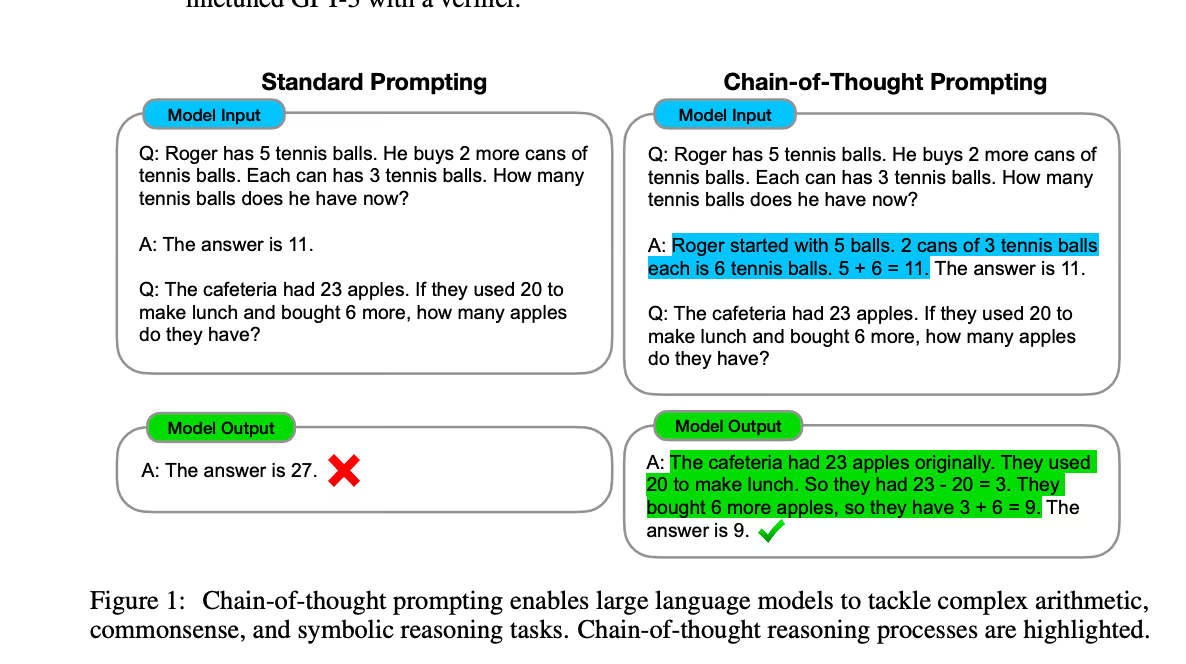

In 2022 Wei et al effectively kicked off Prompt Engineering as the next big thing in ML research, finding that Chain of Thought prompting elicits reasoning in LLMs. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. Simply by giving the model examples of arithmetic questions that had been fully worked through, the LLM was able to correctly answer questions it previously got wrong This is called Chain of Thought (CoT) reasoning, and it's still integral to the performance of prompt-engineered systems, as we will see later.

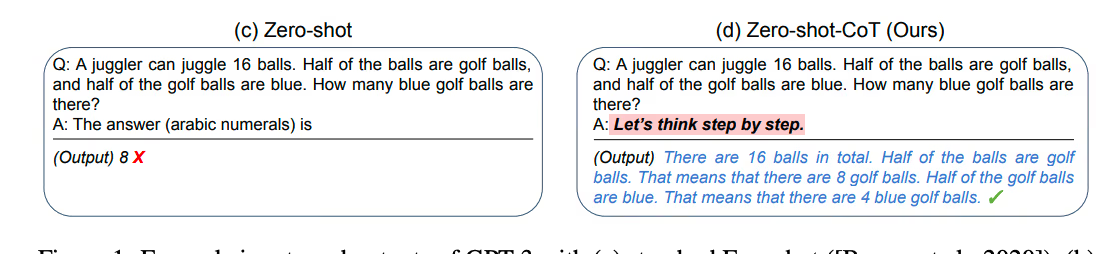

Whilst Wei used a series of examples (called few-shot prompting) to induce a Chain of Thought reasoning process, Kojima et al subsequently found zero-shot (asking a question with no examples) improvements simply by adding the phrase ‘Let’s think step by step’ to their prompts. Large Language Models are Zero-Shot Reasoners

Prompt engineering in 2023

In 2023, Prompt Engineering has gone from strength to strength, as larger context windows (up to 200k tokens) allow ever more elaborate and intricate prompts and creations, and prompt engineering establishes itself as a new abstraction layer for ML research.

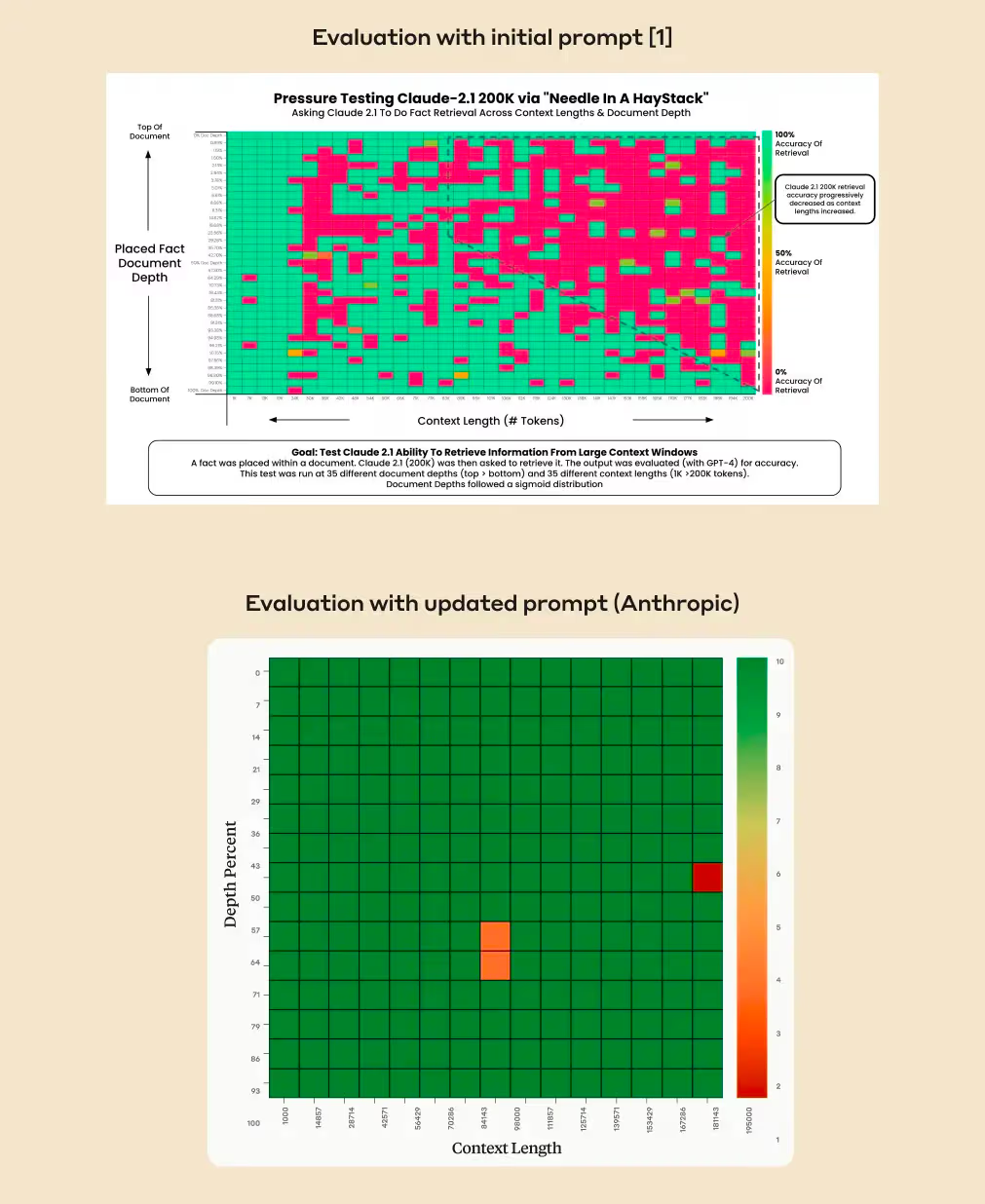

And as models evolve, so too must our prompting strategies. Take the recent Anthropic blog on this topic as a good example. Long context prompting for Claude 2.1. In an initial test done by an external party, Claude 2.1 struggled to recall out-of-context sentences within documents - the ‘needle in the haystack’ problem. Anthropic’s response: that ' as a result of general or task-specific data aimed at reducing such inaccuracies, the model is less likely to answer questions based on an out of place sentence embedded in a broader context.' The layman’s explanation of this would be, that the safety measures Anthropic put in place to prevent inaccuracies or harms, means the model refuses to answer rather than give an answer that could be risky.

Yet, all it takes is one sentence for everything to change. Simply adding the sentence “Here is the most relevant sentence in the context:” to the start of Claude’s response was enough to raise Claude 2.1’s score from 27% to 98%. The core technique here is writing ‘in the character’ of Claude 2.1. Encouraging it to find the most relevant sentence in context and inducing a Chain of Thought process to find that sentence, regardless of how unusual it was compared to the rest of the context.

I can verify that this is a useful technique beyond the benchmarks. Indeed, I spend much of my time at Tomoro, also writing ‘in the character’ of our AI agents, to help the model generalise to new tasks by imitation and chain of thought reasoning, that they themselves help craft, as opposed to only following instructions. A core part of our proposition is that AI agents are collaborative colleagues rather than instruction-following automatons. Indeed, a lot of the work I do feels more like English Literature, than Data Science. But actually, for production-grade systems, this isn’t the case. We need good dialogue, but we also need a more complex prompting paradigm which involves additional, but still relatively simple, ML techniques.

Increasing complexity, increasing capability.

The programming complexity of Prompt Engineering has grown in tandem with the latent capabilities (and dangers) of the underlying models, as higher-risk use cases are made more possible thanks to GPT-4’s capabilities.

MedPrompt is a fascinating research paper recently released by Microsoft Research, which pushes the limits of Prompt engineering as a discipline. The power of prompting

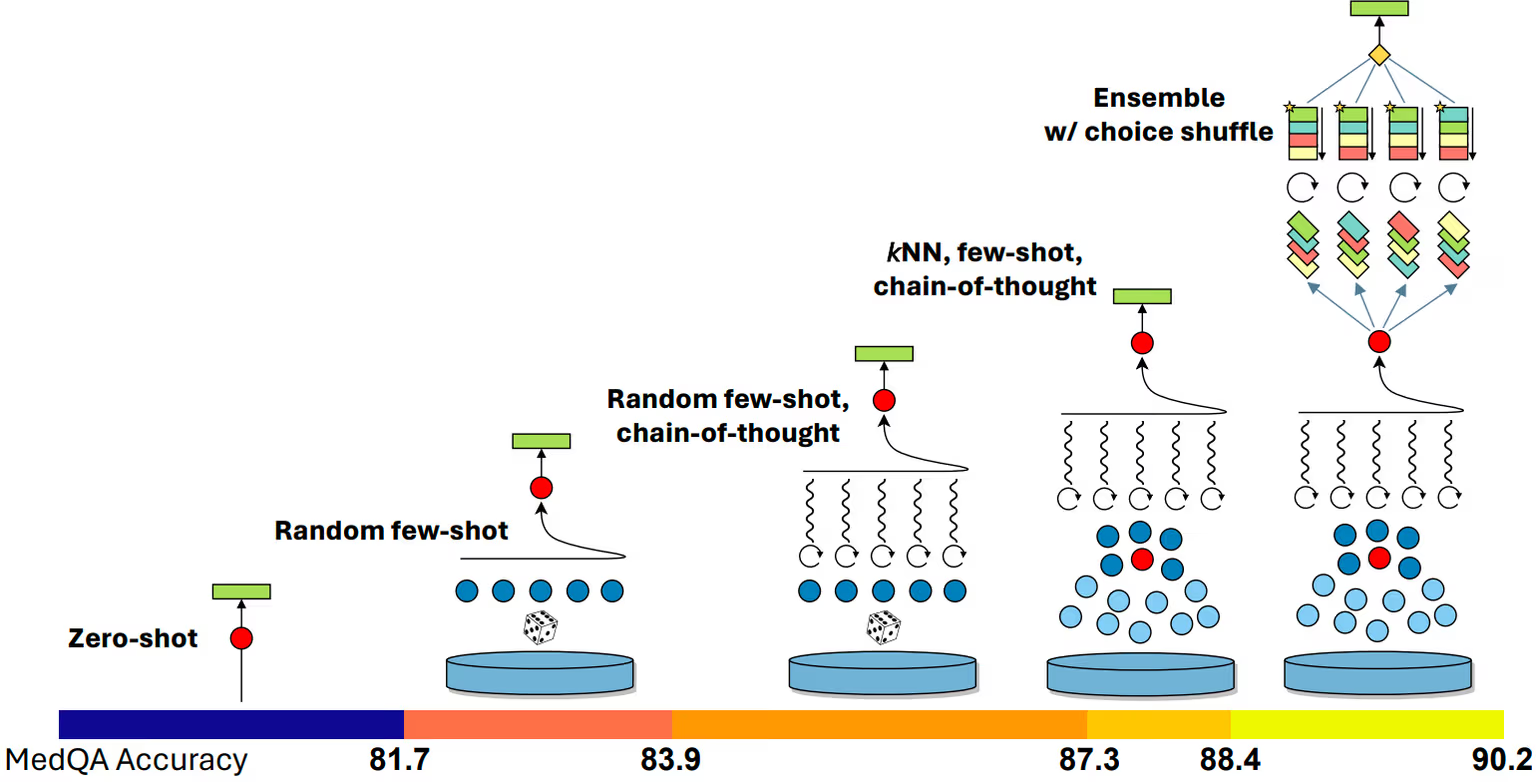

In this groundbreaking research by Microsoft, GPT-4, a generalist AI model, transcended expectations by outstripping specialized medical AI benchmarks through innovative prompting strategies, known collectively as "Medprompt." According to Eric Horvitz, Microsoft’s Chief Scientific Officer, "The study shows GPT-4’s ability to outperform a leading model (Med-PALM-2) that was fine-tuned specifically for medical applications, on the same benchmarks and by a significant margin." Medprompt fuses "kNN-based few-shot example selection, GPT-4–generated Chain-of-Thought prompting, and answer-choice shuffled ensembling," pushing GPT-4's performance over 90% on the MedQA dataset and slicing the error rate by 27% against its finely-tuned counterpart, MedPaLM 2.

Highlighting the broader impact, Horvitz notes, "The Medprompt study...appear[s] to be valuable, without any domain-specific updates to the prompting strategy, across...a diversity of domains." This not only challenges the assumption that domain expertise necessitates fine-tuning but also presents a cost-effective alternative with potent implications for sectors lacking the resources for extensive training.

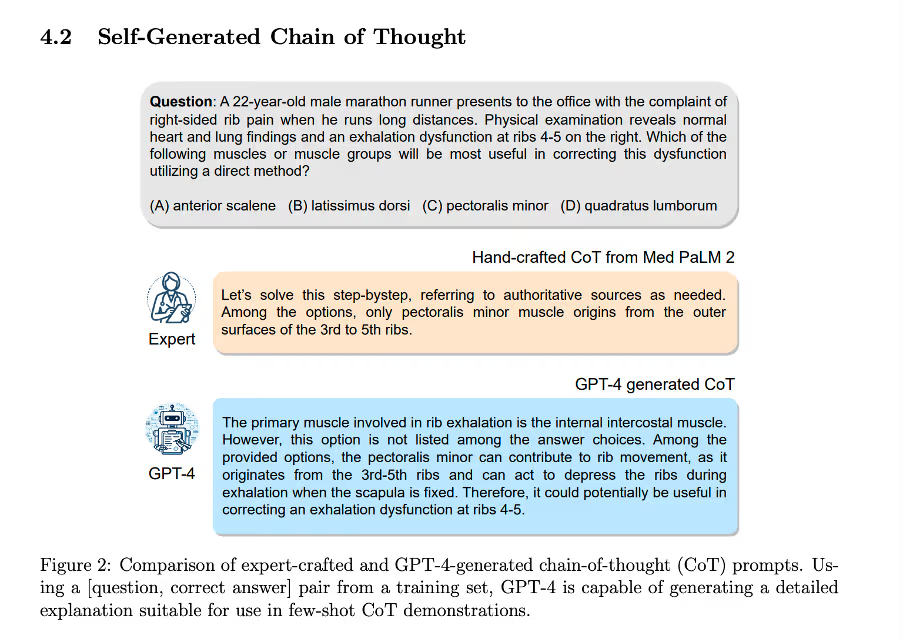

As you can see, based on the write-up, and the diagram – things have gotten a little more complex since the ‘let’s think step-by-step’ days, although Chain of Thought reasoning data is still vital to this evolved approach. In this example, GPT-4 creates an expert chain of thought data, reducing reliance on human-crafted datasets.

Dynamic few-shot prompting

But Chain of Thought reasoning is only one part of their solution. Another key innovation is in dynamic few-shot prompting. That is, understanding the user query, and matching it to similar question-answer CoT prompts that have already been crafted and stored in a vector database. This means that for every new question asked, the most relevant examples of reasoning are selected, and used to craft the prompt that’s sent to GPT-4 to answer the user's question. The authors show a marked improvement using this K-nearest neighbour (KNN) approach, compared to a randomly chosen few-shot CoT prompt. Further innovations like order shuffling, aim to reduce the very humanlike cognitive biases LLMs suffer from in terms of answering multiple-choice questions (preferring certain answers over others).

It’s worth noting that even with these additional steps, with generally capable models like GPT-4, which can produce high-quality synthetic data, the barrier to entry to squeeze the best out of these models is much lower than say, doing your own finetuning or pre-training of Large Language Models.

Jack Clark of Anthropic has an excellent phrase about ‘capability overhangs’ associated with LLMs, meaning that the inherent capability surface of these models is so vast that even after they are no longer state of the art, researchers will continue to unlock latent capabilities. This means that our focus on the practice of prompt engineering AI systems safely will become ever more important with time. In short: Prompt Engineering isn’t dead - it just got started.

Stay tuned for the follow-up to this blog where I talk with my colleague Sam Netherwood about applying these lessons to enterprise use cases. In the meantime, check out OpenAI’s useful guide to prompt engineering here in their API documentation. https://platform.openai.com/docs/guides/prompt-engineering