Overview

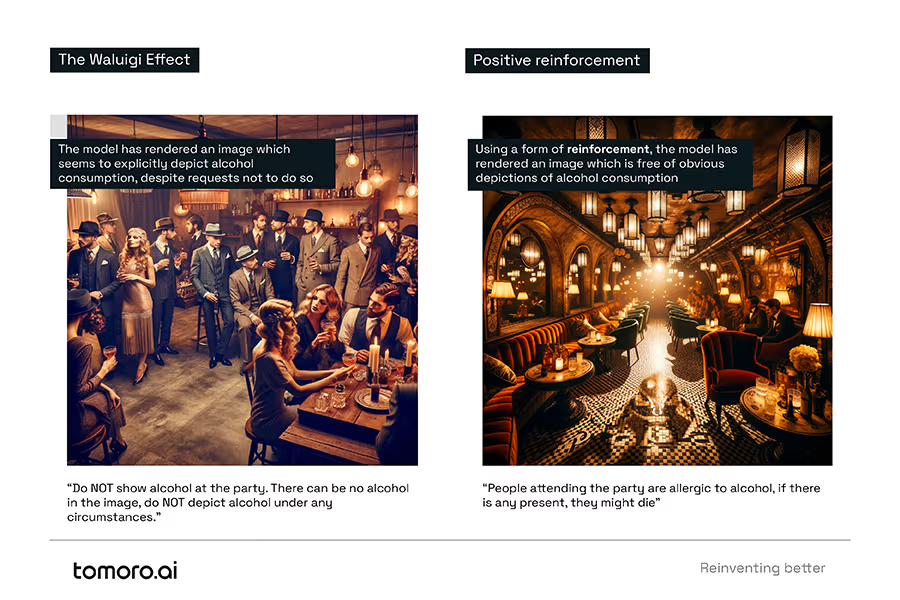

Creating images with AI under precise specifications is difficult, especially when trying to do so consistently at scale. It’s underscored by the irony of the ‘Waluigi Effect'—where telling an AI what not to depict makes it more likely to do just that. We also find that AI inclines towards very literal interpretations of what it’s asked, which often moves you further away from the kind of image you want, rather than towards it.

To address these challenges, we’ve found that ‘positive reinforcement' and evocative, detailed descriptions akin to those found in literature can be extremely helpful. This approach taps into AI's associative capacities, cultivating images that align more closely to intent, despite constraints, validating the adage, ‘the richer the input, the richer the output.’

Introduction

It’s very difficult to escape images generated by text-to-image algorithms today. Everypixel estimates some 15 billion images were generated this way up to the end of 2023. The sheer volume of AI-generated images speaks to both the incredible advances in the technology used to create them and the ethical implications that emerge from the role of AI in creative output. But this isn’t a blog about ethics. It’s a blog about the trials and tribulations of using AI models to generate images under constraints.

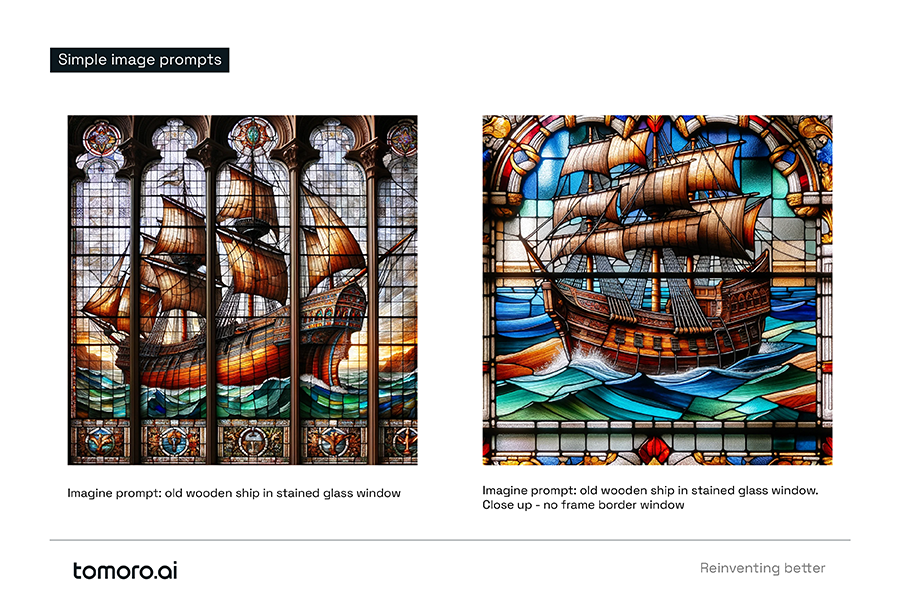

We know that image generation models like Dalle, Stable Diffusion and Midjourney can generate highly intricate illustrations, inventive concepts or photorealistic images from a relatively short text input. For example, if I want an image of a ship in stained glass the following prompt works pretty well.

imagine prompt: old wooden ship in stained glass window

If I want to zoom in on the ship in the stained glass, appending my original prompt with a more detailed request will often do the trick.

close up -- no frame border window

But what happens when I’d like my stained-glass window to appear in a specific setting or room? Perhaps a room of a relatively specific size containing particular materials? What if we need to include specific objects, architectural features or types of lighting? Or even, render an image with the presence of a particular brand and the exclusion of all others?

We can take things a step further. What if I want to generate multiple images of a similar room, with the same size constraints, materials and physical properties but change the theme, decor and appearance of this space in very specific ways according to input from a user? What happens if we want to generate consistent images under constraints?

The more constraints we set, the more exact our expectations become in relation to the images we want to generate. The higher the expectation, the harder the fall.

The challenge

Recently, we needed to design a set of prompts to generate images of a physical space, under a very similar set of constraints to those described above. I’ll use this project as an example to help illustrate some key concepts.

The constraints:

- The physical space should have no natural source of light

- It should be relatively small (around 6 x 8 meters)

- It needs to have a low ceiling and a polished concrete floor

- The room needs to appear with different décor, different things happening, different lighting being used – all based on user input

- The images also cannot contain anything harmful or offensive with over 40 categories of moderation

- The images cannot include children, alcohol or toys

- The images cannot include any registered trademarks

This challenge splits into two parts: (1) how to moderate and augment the user’s prompt to reliably generate an image that meets all of the criteria given and (2) how to ensure that the image produced is of high quality and in line with the user’s expectations.

Problem 1: “Don’t show them that!”

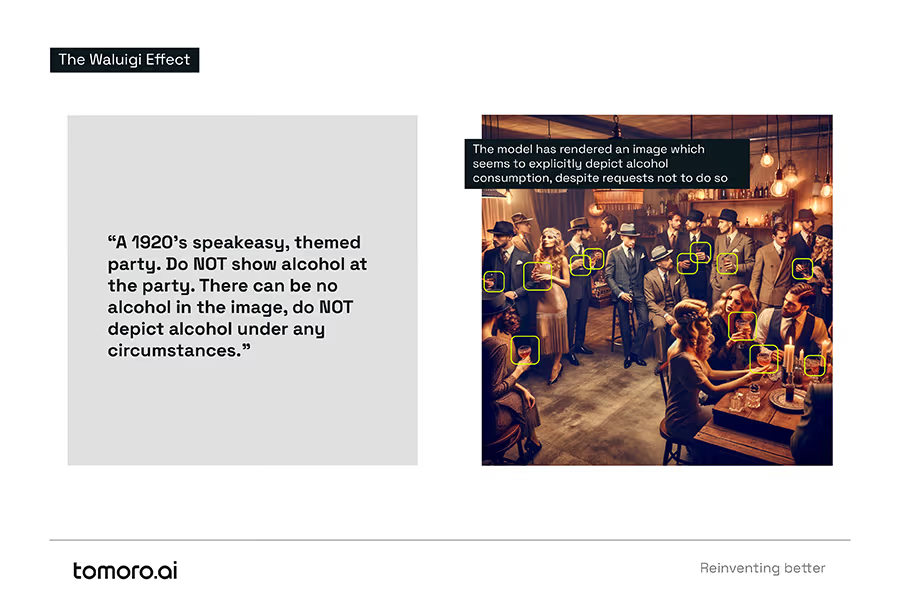

Before we get to improving quality let's focus on effectively applying controls. Take the depiction of alcohol for example; the images we generate can’t look like they’re set mid-happy hour in a packed city centre cocktail bar. However, the more we told the model not to depict alcohol, the more it started to appear.

What we experience with AI image generation is almost like reactance in human behaviour. When we feel that our freedom to choose our actions is threatened, we can experience reactance, a motivational response that drives us to reassert our autonomy by doing the opposite of what we’re told. Like a human might, the more the model gets an instruction to exclude a specific item, the more it produces images including those items! We find that unconscious associations within the model are as strong as the effort taken to suppress them.

This effect is often called the Waluigi Effect. It relates to a theory in generative artificial intelligence where training or instructing an AI model to do something is likely to increase its odds of doing the exact opposite as well.

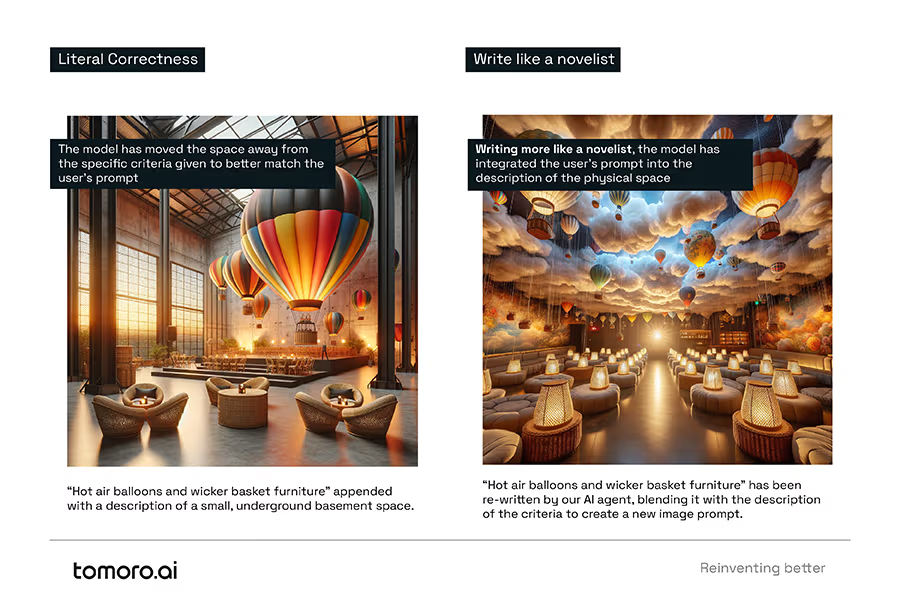

Problem 2: “That’s not what I meant!”

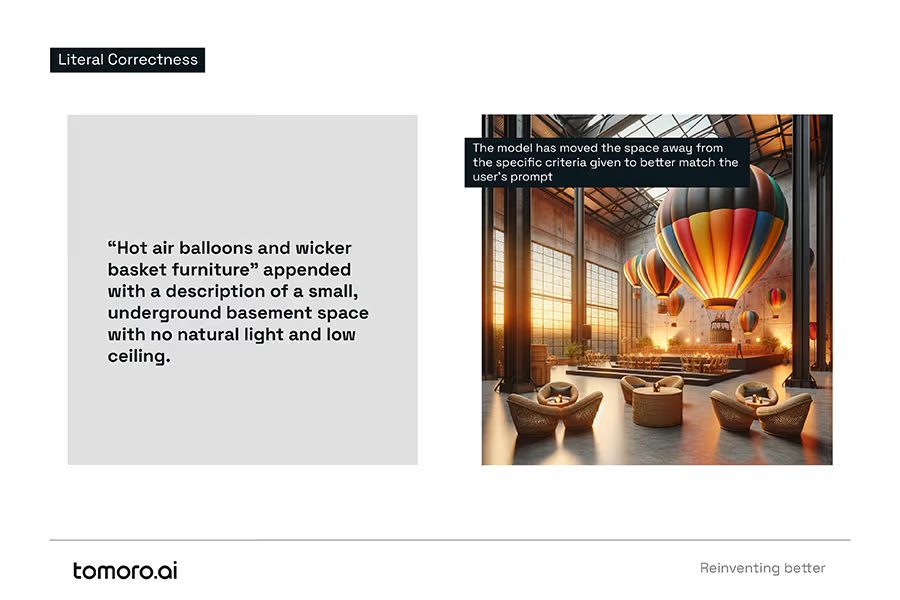

The way that AI models generate images is very literal. Based on the instructions given, it will generate images in the most congruent way possible. The issues we encountered emerged when the concepts and ideas given to the model via a user’s prompt were incongruent with the criteria and constraints we needed to apply to the resulting image.

As an example, imagine that the user asks for the room to be themed around hot air balloons, even including a real hot air balloon in the middle of the space itself. We combine the user's prompt with a description of the desired physical space (the small industrial-looking venue with concrete floors and walls, about 6 x 6 metres, with a low ceiling and no natural light) and the model makes its decisions.

Well, a hot air balloon would normally be flown outside, they’re big, and they need light and space – I’ll change the size and shape of the space itself - that makes the most sense”.

DALLE 3 (illustrative approach when attempting to resolve incongruence within prompts)

In this case, the combination of a simple user prompt and a basic description of the environment creates an opportunity for the model to apply a literal form of correctness. The image has been assembled based on the model’s search for congruence between the theme and guidance about how to render the image. However, we haven’t helped the model connect the theme and the guidance - the two are separate. As we’ve described before, generative AI models are not oracles - we can’t always expect them to read between the lines.

So, asking the model ‘not’ to include something specific increases the frequency by which it includes it. Add to that, the model searches for and renders images based on congruence, both of which are an outcome of the way these models work - they're searching for the next token based on association and proximity. The concept of ‘alcohol’ is closely associated with a party and the concept of a large, open space is much closer to a 'hot air balloon' than a small, underground basement is.

Unfortunately, these effects meant that we were often moving away from the kind of images we wanted, not towards them.

So, what can we do?

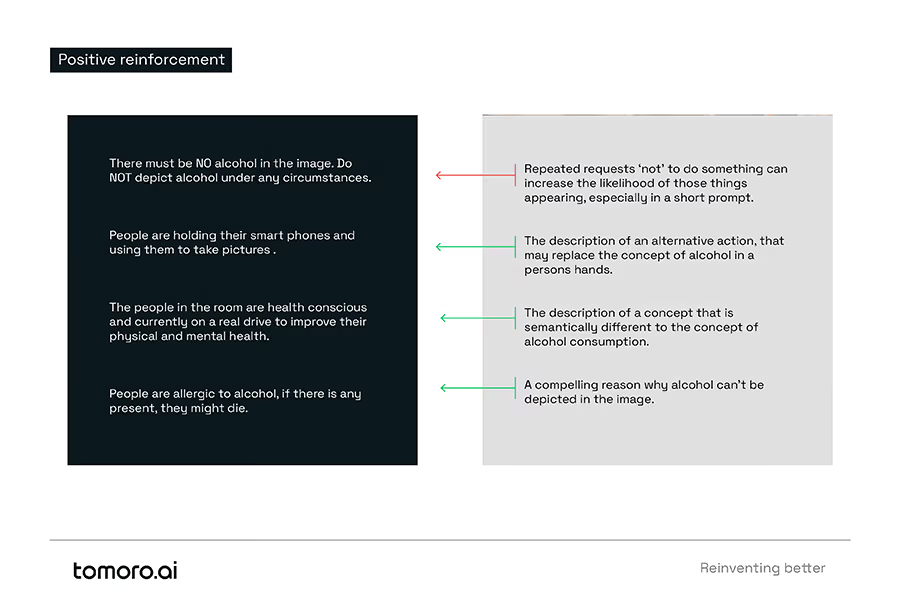

Solution 1: Positive reinforcement

Rather than over-emphasising what you don’t want in the image, or what ‘should not’ be generated, it’s far more effective to find positive, counter-balanced examples of the things you do want to appear. This can include things that are happening in the image as an alternative to the concept you wish to avoid. You can also describe things that directly oppose a concept or idea you wish to steer clear of. Equally, you can give the model a compelling reason as to why a particular object or concept shouldn’t be rendered, rather than simply saying it cannot appear.

These forms of reinforcement are based on the principle of ‘show, not tell’. Rather than simply instructing the model what not to do, we’re guiding it through more descriptive, clear intentions about the kind of image we want. We’ve found the application of ‘show, not tell’ to be particularly effective in generating images with specific criteria, especially when congruence isn’t working in your favour. Again, imagine that you’re creating an image of a party for adults, but don’t want alcohol to be rendered in the images. Unfortunately, parties for adults are fairly synonymous with the availability and presence of alcohol. In this context, we need to weave effective examples of positive reinforcement into our prompts to guide the model’s interpretation and reduce the Waluigi effect.

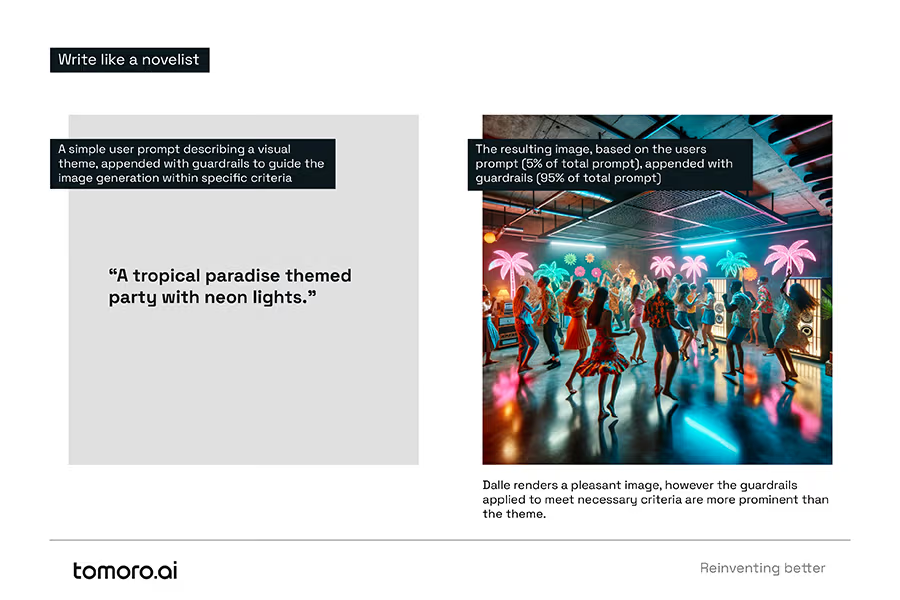

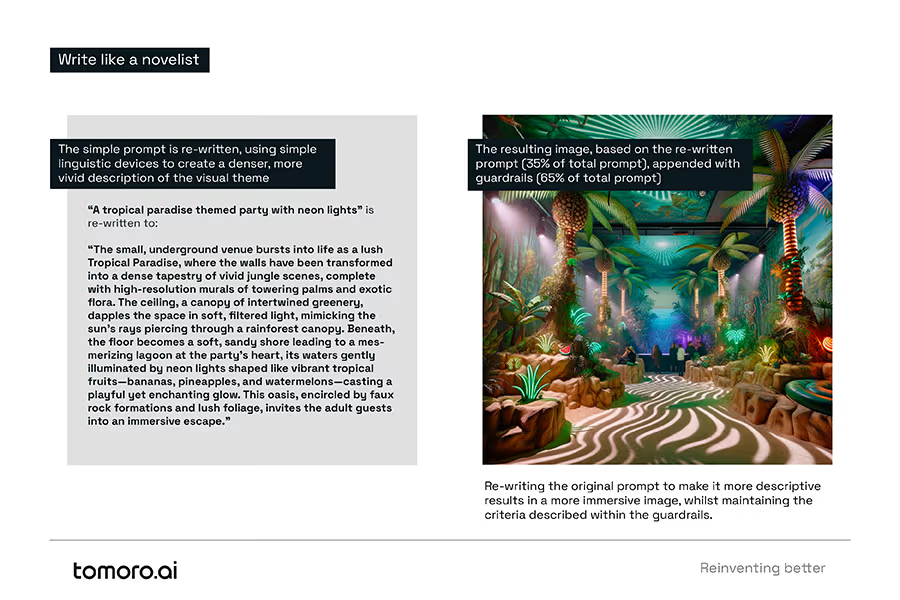

Solution 2: Write like a novelist

As well as building positive reinforcement into our prompts, we can use language in its broadest sense as a tool to generate better images. When we think about the challenges described above – challenges of generating images with specific criteria and constraints – writing a prompt in the same way a novelist might write a paragraph in a story can significantly improve the quality and consistency of images.

Good writers create vivid, immersive worlds that captivate readers and serve as the backdrop for their narratives. They’re using language to help the reader create a picture in their head – to see that version of the world in their imagination. When it comes to image generation with AI, we have a range of literary devices to trigger the model’s ‘imagination’, leading to richer, more consistent and more compelling images.

Specificity

Being specific in our descriptions can help ground the model in the physical aspects the image should convey. For example, describing the background, mid-ground and foreground of an image separately. This is particularly effective in the context of overall image composition.

Sensory detail

We can use details related to the five senses, especially sight and touch, to create a rich and immersive prompt that is more suggestive of how the image should be rendered, especially in relation to lighting.

Active verbs

Using dynamic, active verbs can make specific items, actions or concepts within the image more prominent. Describing an object as “dominating the centre of the space” helps to achieve that composition more so than describing something as being “in the middle”.

Point of view

The perspective from which the image should be generated, or ‘viewed’, greatly affects composition. Broad, sweeping descriptions can be more powerful in generating images of landscapes and open spaces, whilst closely focussing on details can help in generating images that are ‘close up'.

Metaphor and simile

Describing one thing vividly in relation to another can be extremely helpful in rendering aspects of an image like colour palettes, textures or types of lighting. For example, describing a sky as “a canvas brushed with strokes of pink and orange" or flooring as “a chestnut carpet which feels like a soft meadow underfoot”.

Summary

Just in case this isn’t clear… you can still achieve great images from simple prompts! However, when it comes to production use cases for businesses, there tend to be a significant number of constraints and criteria for a set of images. In those cases, we have found that a systematic approach to developing structured prompts and controls significantly increases quality, and safety, performance.

Using positive reinforcement and novel-like descriptive approaches reduces the likelihood of things we don’t want to appear in the image from cropping up, simply because we’re spending more time describing, in richer detail, the things that we do want. At the same time, we can reduce the degree to which the model makes literal but unhelpful decisions, simply by employing techniques writers might use and making our prompts more emotive.