This blog is primarily for:

- People who have built with large language models (LLMs) before (e.g., prompting, RAG, etc.) and want to learn how to go further and make their solutions ‘agentic’ and

- People interested in LLMs who want to skip straight to building multi-agent systems.

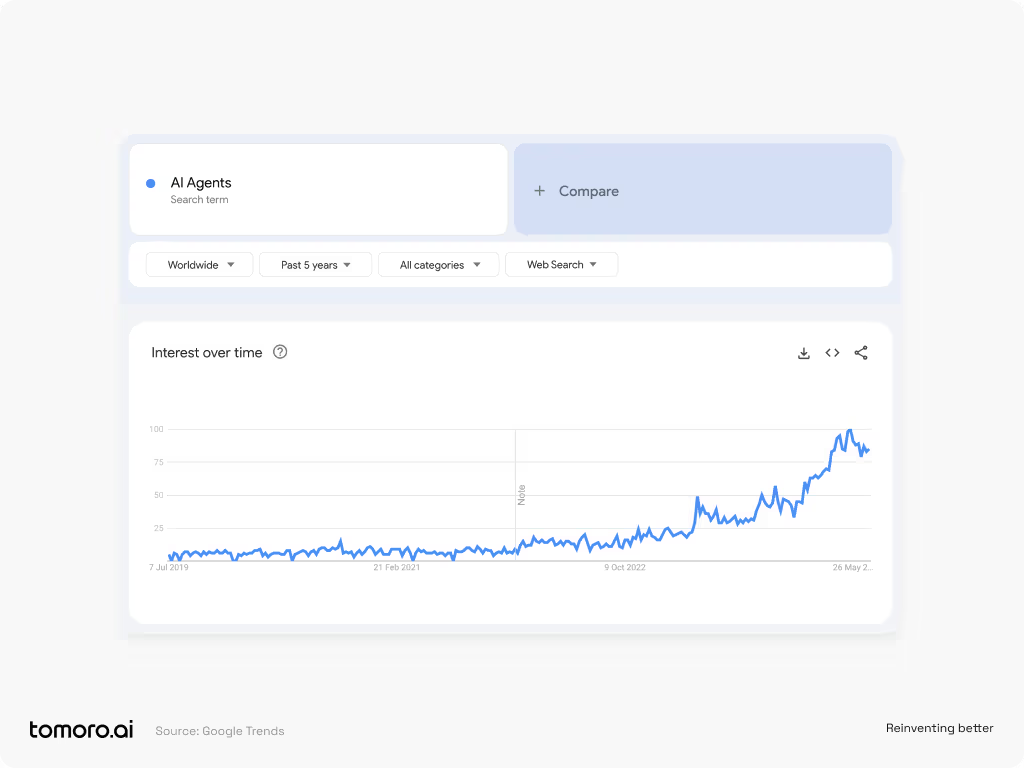

The rise of a buzzword: AI agents

AI agents are becoming all the rage. So, we thought we’d give you a brief definition of what they are , when you need more than one (i.e., multi-agent systems) and how we build them.

In this blog, we will demonstrate a simple LLM agent system using lightweight abstractions, where every prompt or instruction to each of our agents is fully inspectable.

What do we mean by “lightweight abstraction”? Simply put, it’s a minimalistic design approach for structuring and managing LLM-powered AI agents.

First, let’s define our mental model of an individual AI agent.

AI agents and multi-agent systems

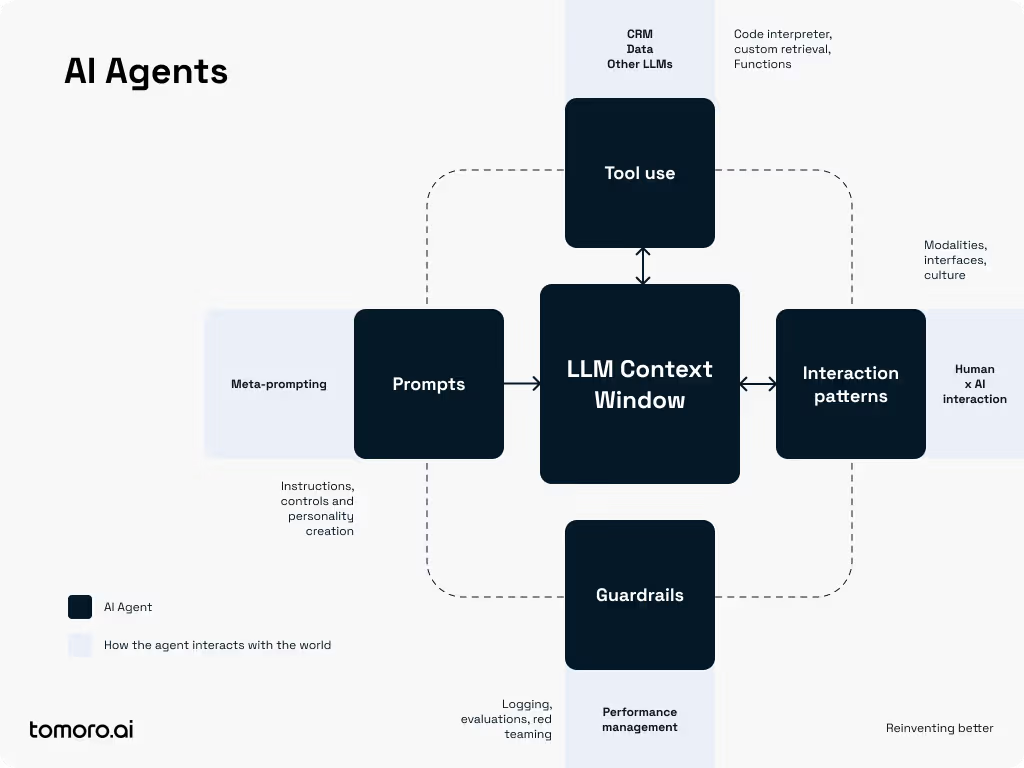

An AI agent is an application with a defined knowledge base, the capability to initiate several actions, and the intelligence to choose the appropriate one given a specific context.

It’s designed to be self-sufficient in understanding requests, gathering the right data, making decisions, and performing or initiating action.

Its power comes from being an LLM-based system that can access systems, use tools, interact with humans, and continuously improve through observation and evaluation. All while self-managing its context window to stay relevant to the overall workflow and previous interactions.

Think of each LLM context window as analogous to computer RAM - it’s the short-term memory of the Agent. As we know from running applications on computers, RAM can easily fill up as we add more tools and tasks, causing systems to run less effectively.

Managing short-term memory is crucial because, despite advancements, LLM recall and quality often degrade as context windows lengthen (and just as importantly, cost increases!). Our experience building production-grade LLM Agent systems is echoed by research on process supervision (e.g. Let's Verify Step by Step , Selection-Inference: Exploiting Large Language Models for... ) that suggests ‘fresh eyes' and task decomposition is essential.

Another way of thinking about task decomposition is spreading workflows across multiple context windows, we call this a multi-agent design. These multi-agent systems tend to outperform single-agent systems, boosting fault tolerance and quality as well as human interpretability - mitigating the AI 'black-box’ issue.

How to build these multi-agent systems?

We’ve written about meta-prompting before in Prompt Engineering is dead* , and it is a core concept in building successful multi-agent systems. Emerging research (e.g. Meta-Prompting: Enhancing Language Models with Task-Agnostic Scaffolding ) shows how meta-prompting can transform a single language model into a system orchestrator.

Namely, it allows the multi-agent system to break down tasks into smaller subtasks handled by expert instances of the same LLM. The 'fresh eyes' concept ensures each expert works with tailored instructions, offering accurate, diverse perspectives. Meta-prompting integrates these outputs seamlessly, improving performance.

Experiments reveal that meta-prompting, especially with tools like a Python interpreter, outshines traditional methods. In a paper from Google DeepMind researchers ( Self-Discover: Large Language Models Self-Compose Reasoning Structures ) demonstrate that LLMs that provide a solution approach to a task before completing that task give higher quality and more interpretable outputs, making it a win for both safety and capability.

Our conclusion is that a single AI agent isn't effective for multiple tasks, especially when tasks become more complex. We need a team or swarm of agents to achieve optimal performance.

Co-ordinating multi-agent systems

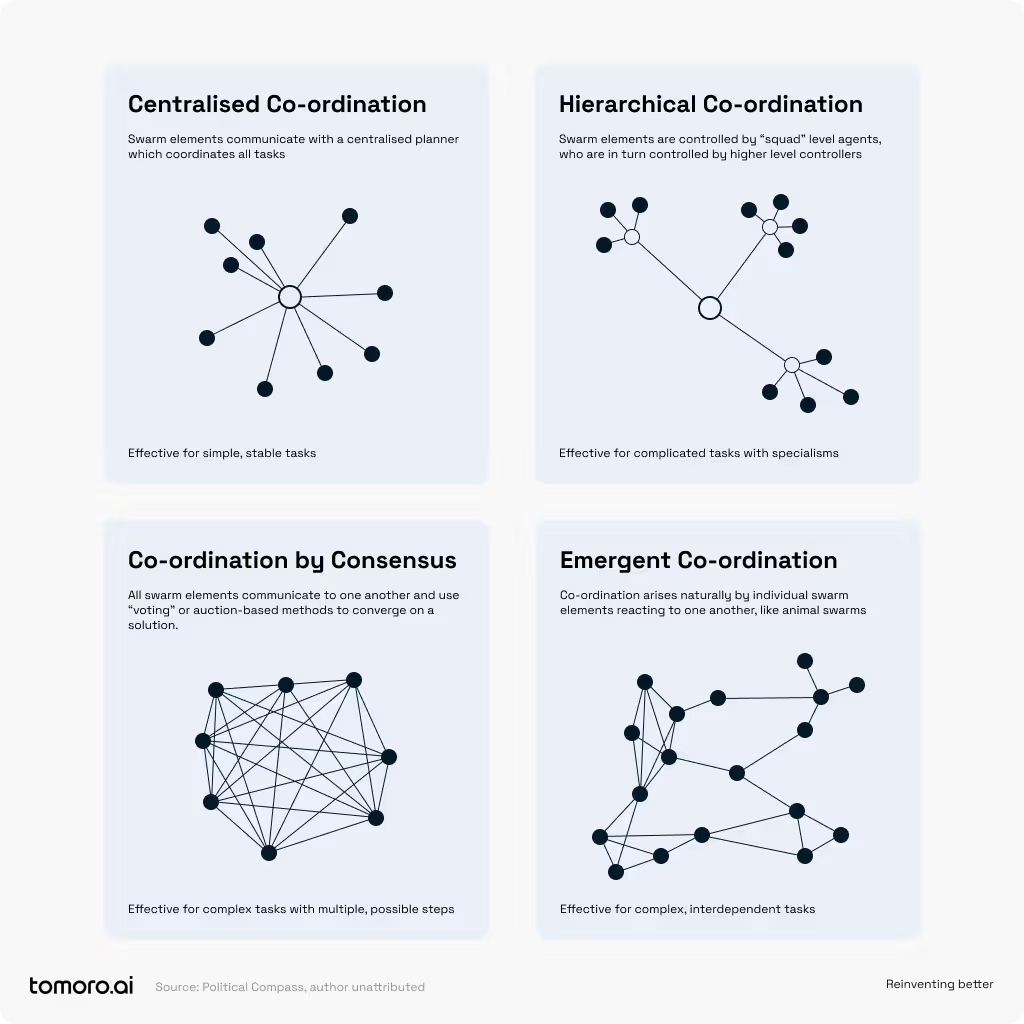

Here is an overview of the different ways agents might be coordinated to solve different types of problems:

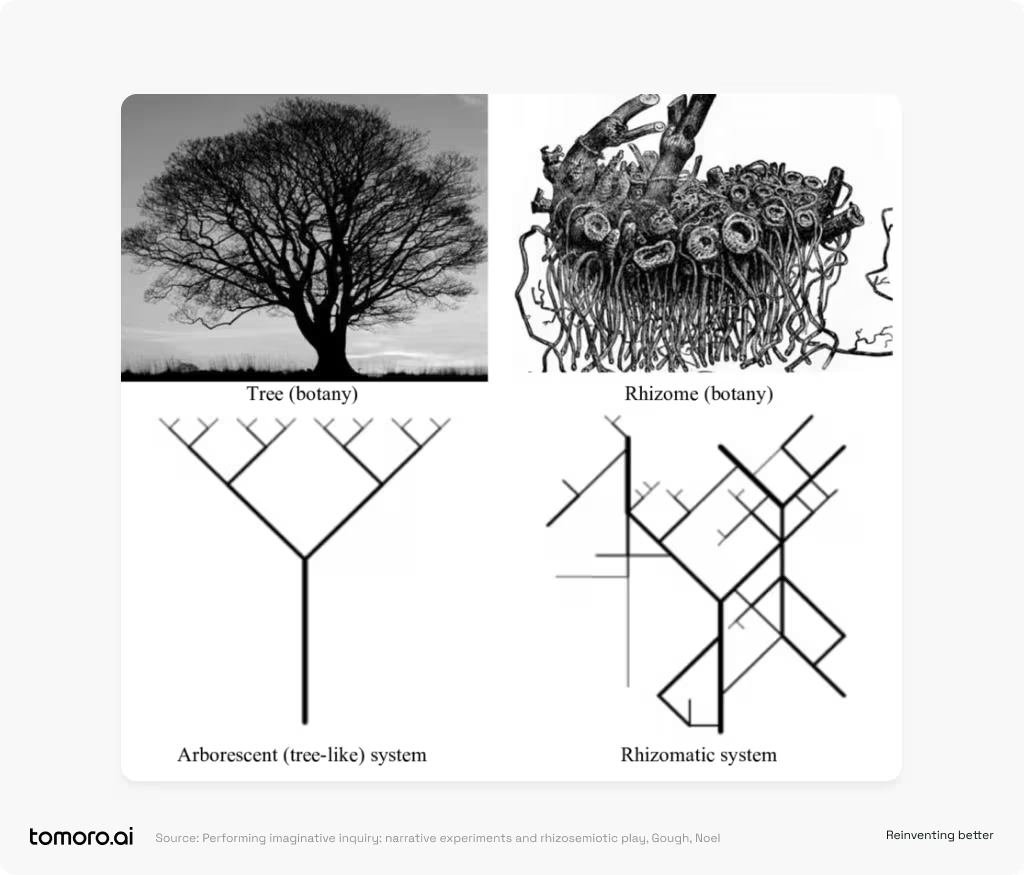

Fixed, “if-else” systems are not the best way to coordinate LLMs, as they do not play to the strengths of non-deterministic, flexible systems with reasoning capabilities. The multi-agent swarm pattern we tend to use with our clients is instead based on the principle of emergent coordination, where, just like the rhizome, each agent is connected to each other agent. The framework contains some elements of both emergent and hierarchical coordination (e.g., one agent always greets the end user first and helps route user queries).

This self-organisation makes systems much more fault-tolerant, and much more difficult to get trapped in local minima (i.e. trying the same sequence of events over and over again); keeping the flexibility and adaptability of LLM-based systems as opposed to rule-based systems.

An example: a multi-agent system for customer services

The context

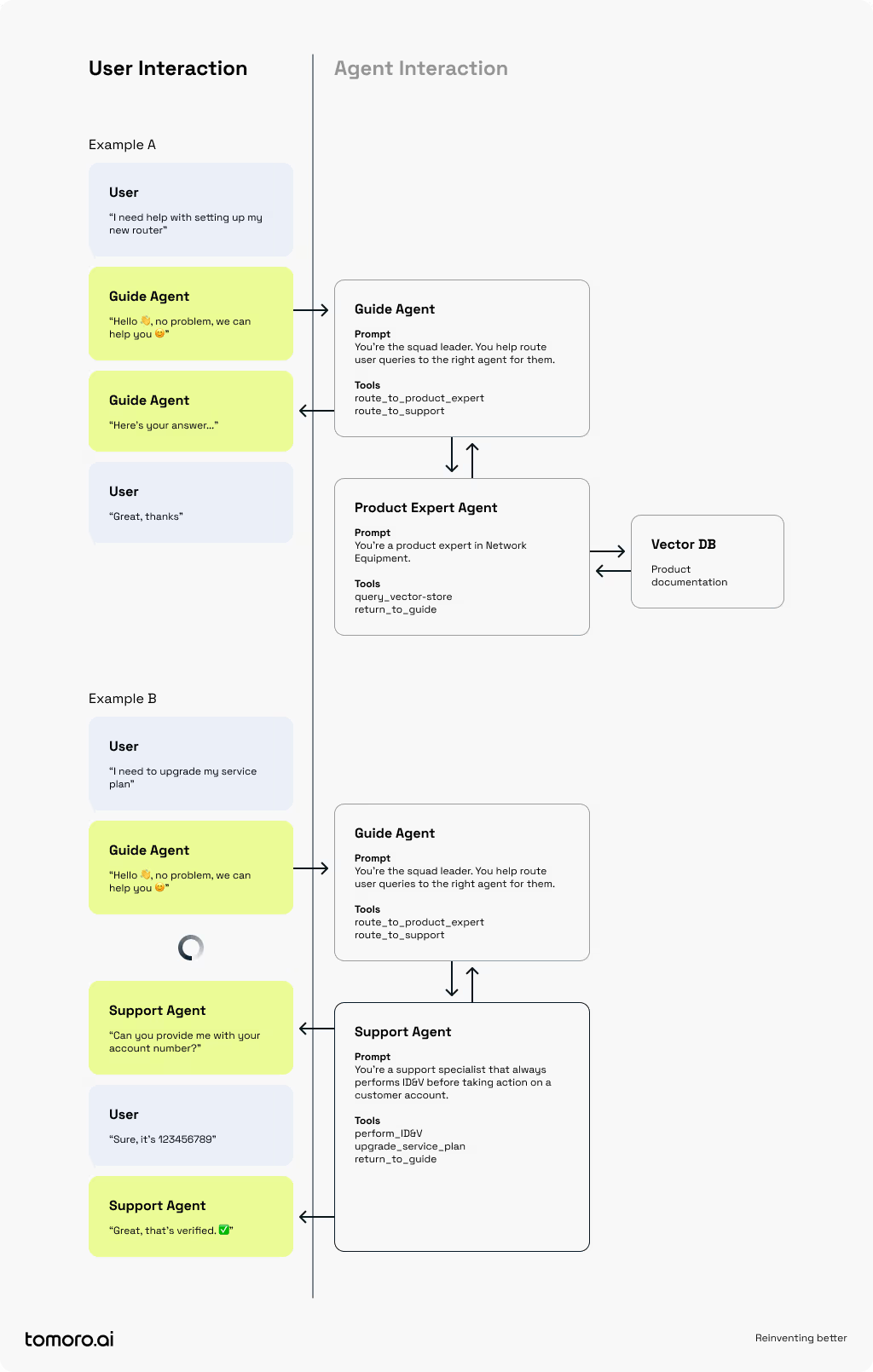

Below is an example of how a multi-agent system operates in customer servicing in the telecommunications industry. Simply put, the agents collaborate to help users resolve specific questions with hand-offs and controls between specific agents in the system.

Imagine a telecoms company where a user needs detailed assistance with a particular service or issue…

The components

As you can see, multiple agents are involved in this process, each with a discrete job and specific tools and knowledge at their disposal:

- Guide Assistant: The gatekeeper, handling initial user interactions and routing tasks to other agents.

- Product Experts: Specialists in their products, fetching and summarising the most relevant knowledge base articles for user queries.

- Support Specialist: The go-to for sensitive issues like service upgrades, refunds, and account recovery, always verifying identity first.

- Vector store: Contains the documentation relevant to each Product Expert, which will run a similarity search to find the most relevant documents for user queries.

Why (and when) multi-agents are better than single-agent systems

Of course, this could also be achieved (at a proof of concept scale) within a single context window, using the concept of LLM role play. However, the benefits of a multi-agent approach are critical to confidence in production:

- Explainability: In a multi-agent system, each agent's role and actions are clear and traceable, making it easier to explain how a task was completed.

- Modularity: Each agent can be updated or replaced independently without affecting the entire system. This makes the system more flexible and easier to maintain.

- Fault Tolerance: If one agent fails or makes an error, it does not compromise the entire system. Other agents can correct the error or take over the task.

- Cost: Multi-agent systems can scale using specialised, smaller context models that are cost-effective compared to a single powerful LLM which might mean 10-100X cost than necessary for simpler tasks.

Single-agent-based systems have their place, and they are often a great way to get started. However, for production-strength systems, especially in mass-scale consumer-facing scenarios, a multi-agent model is the future of AI system architecture.