This will likely be no surprise, but as CTO of a startup AI company - I write a lot of code!

In this article, I aim to relate my experience of using generative AI daily for coding over the last six months (late 2023 to early 2024).

In that time I’ve personally developed a series of different projects, using mostly Python, SQL, HTML, JavaScript and some light CSS and the LLMs I’ve predominantly used are those in GitHub Copilot, and ChatGPT (GPT-4-turbo and GPT-4o).

So, what are the key lessons learnt?

It is mostly very good

All the hate about it being not very good is just not true. Yes, it can be a bit dumb sometimes, just like humans.

It provides the correct answer on the first attempt 90% of the time, 8% of the time it gets it right after some clarification, and 2% of the time, you need to take control.

I've had to adjust my perspective, viewing it less as a 'copilot' and more as an 'apprentice' who can hand me the right tool when I accurately specify what I need.

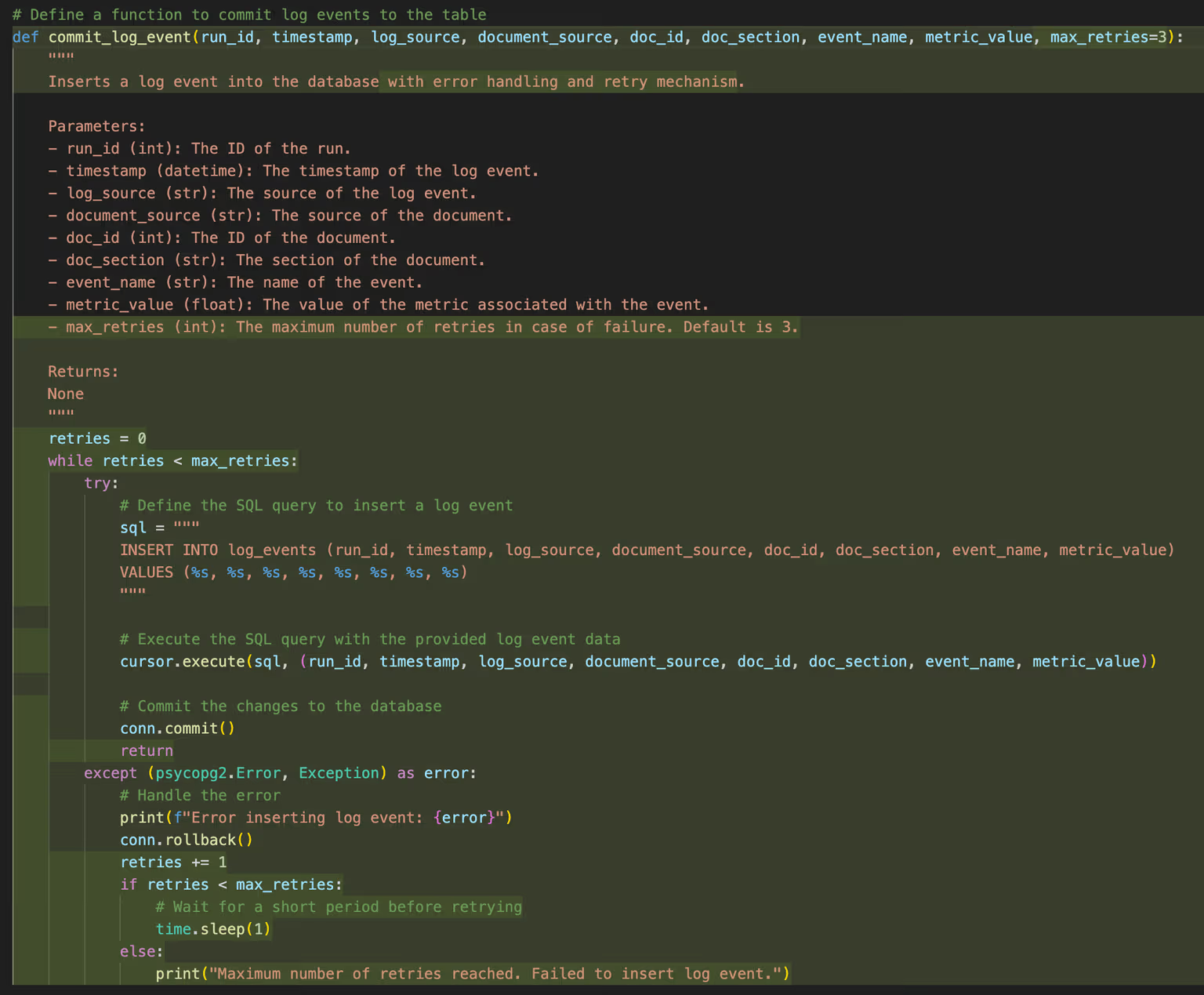

For example, when I wanted to add error handling-based retry to a function, it did a reasonable job:

Keep your (human) hands on the steering wheel at all times

Your productivity depends a LOT on you knowing what questions to ask.

- If you don't know what a stack is, but try to solve a problem that needs a stack; hilarity will ensue.

- Coding assistants will poorly reverse engineer your ask and try to solve the problem; leading to buggy, poorly written code.

- Assistants appear to love abstracting stuff. The number of times I've seen creating a function to print status messages is crazy!

- Do not use an assistant to manage your abstractions, this is your job

For example, here is a function generated by CoPilot:

def return_connection(conn):

connection_pool.putconn(conn)

The code had only one situation where this would be used. I had to point out that this function is unnecessary, and that we should use a context manager to manage the lifecycle of the connections.

It's an accelerator pedal, not a teleportation device

If you don’t know the topic, you will not know the right questions to ask to get your AI copilot to help you. Scrambling around in the dark means the code it develops will almost always be poor.

When you do need to work on something new (for example, a new library, or an unfamiliar concept) AI can help, but you need to take a more nuanced approach:

- Switch into "knowledge mode" and ask ChatGPT to explain and understand the domain.

- When slinging code with code assistants, you should already have a good idea of what you are going to do. CoPilot will only generate code that is as good as your instructions.

- The more unfamiliar you are with the domain, the poorer your instructions will be and thus will result in bad solutions.

Generative AI is excellent for doing boring stuff like test case generation, but only if you are already a good test engineer and know what you want the test cases to test, saying "generate some test cases" will only result in average-quality test cases in most cases.

To demonstrate some of this, here is a request to CoPilot:

In my multithreaded python application, I need to commit events to a postgresql table at various intervals

These events happen quite frequently and from all over the application and can be from various threads.

What is the best way to create a connection to the database and commit log events to the table?

My log table looks like this:

<CREATE TABLE STATEMENT>

This resulted in Copilot offering me standard create and insert functions to send messages to my table in Postgres. In most cases, this will be ok. It does do 95% of what I asked it to do. The functions opened and closed connections in a thread-safe manner.

However, the implementation was suboptimal for a production application; there was no connection pooling, and each log message initiated a new connection to the database. The provided code didn’t use context manager to manage connection and cursor lifecycle.

I had to engage the Copilot to make these (and more) revisions to the initial code, had I not known the “right thing to ask for”, this could have easily led to ‘functional but less than optimal code’ in my application.

My learning here has been that I should already know what I want and I can treat Copilot like a junior engineer in my team to write functions for me. If I expect it to direct itself, it’ll highly likely end up with sub-optimal code.

Summary

Generative AI for coding should be viewed as a power tool, not a crutch. It is no replacement for experience and expertise, but if used properly, it will help you learn more nuance more quickly and ultimately become more productive.

On the other hand, if you use it to mask your lack of skills in a specific area, it will show, often hilariously. The dream of writing <insert app idea that'll make millions> without knowing anything about writing apps is not something Generative AI is attempting to provide.