TL;DR

- It is important to carefully consider how and where decisions are made in your agentic system.

- Deferring more decisions to an LLM allows the system to potentially generalise to more tasks, but at the potential cost of speed, reliability and robustness.

- Where possible, try to extract as much of the decision-making process from the LLM to explicit software code. This is especially true for high-risk and/or production workflows.

Intro

When designing an LLM based agentic system, one of the most important choices that you need to make is the extent of the decision-making that is encapsulated in an LLM model versus explicit software.

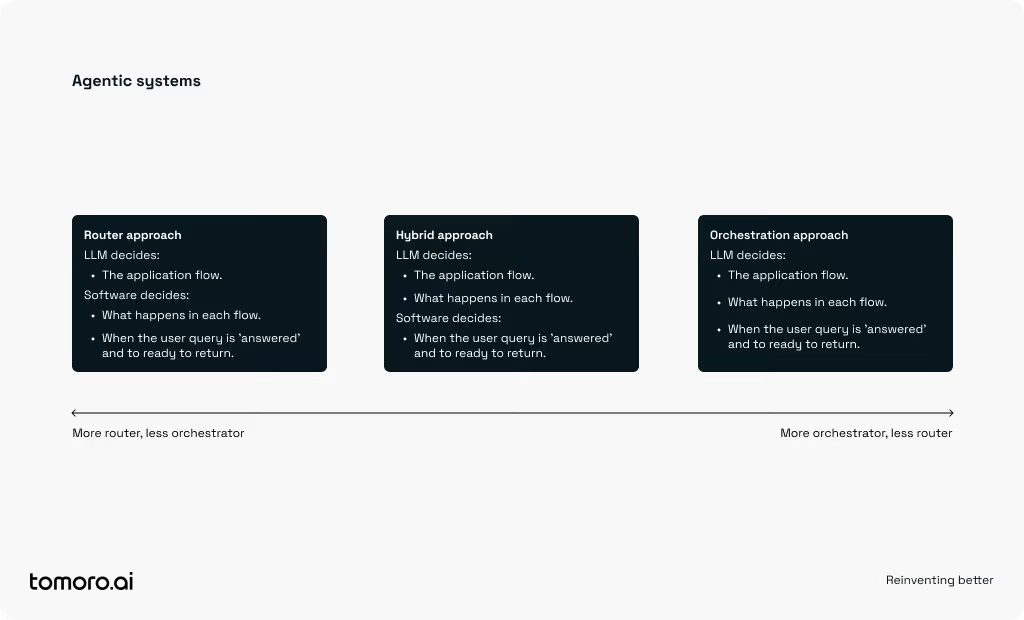

To help understand, we can think of this choice as a spectrum between the following approaches:

- Router-based architectures explicitly define the order and logic in code, ensuring testability, predictability, and robustness for narrow-domain tasks (these are also called ‘workflow agents’).

- Orchestrator agents rely on large language models (LLMs) to dynamically decide task flows using natural-language prompts, ideal for open-ended interactions where predefined logic is insufficient/impossible.

For high-risk, production workflows, we typically recommend using more router-based features, reserving orchestrators for applications demanding flexible, general-purpose conversations.

Router vs. Orchestrator: Understanding the difference

Router-Based Architectures

Router agentic systems:

- Explicitly define the decision-making flow through code/software and use an LLM to determine which route this software takes.

- Are closer to traditional software systems in the sense they have clear and predictable pathways that result in more consistent outcomes.

- Are ideal for tasks that can be strictly defined.

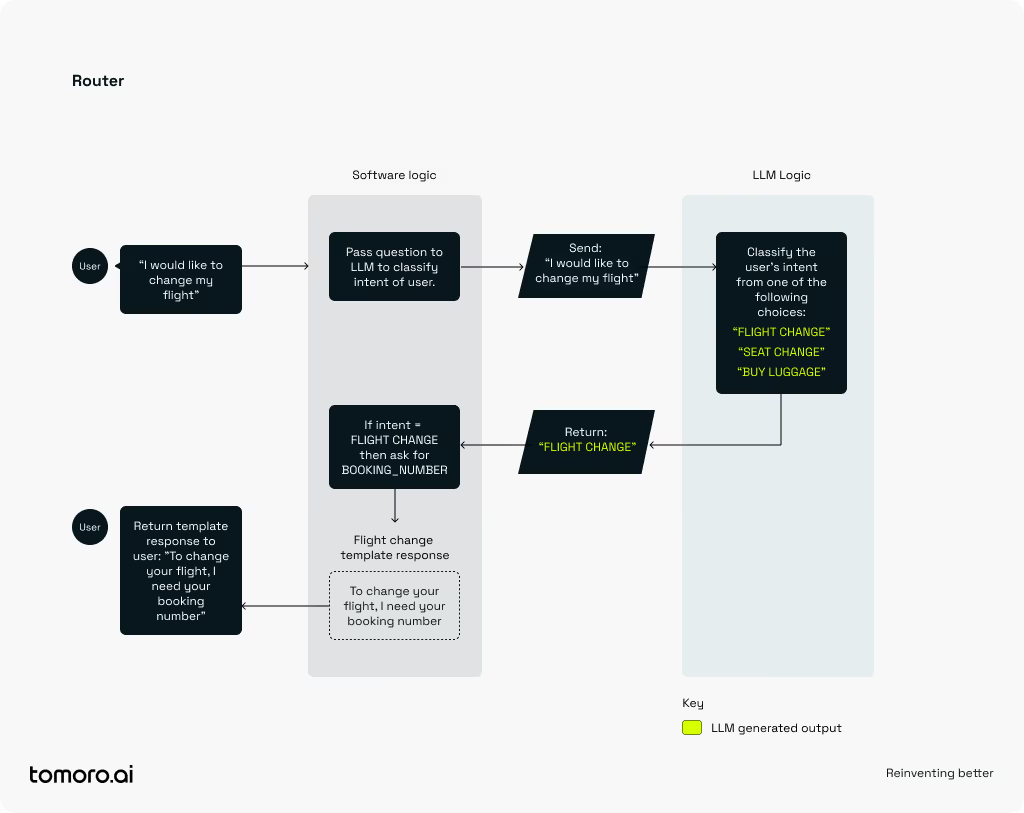

The following is a toy example of an Airline Chatbot Booking agent that uses the ‘Router approach’. We can see that while the LLM helps us to classify the intent of the question from three possible choices, it is ultimately our software that maps this intention to a template text response. Because the LLM is highly constrained, it means the user will experience more consistent behaviour.

Orchestrator Architectures

In contrast to the router system, orchestrator agentic systems:

- Define logic flows through natural-language prompts instead of software. Note: in comparison to a programming language, natural language is inherently ambiguous and flexible (both a positive and negative characteristic as we’ll discuss later). We think of this as ‘intention rather than instruction’.

- Can provide multiple processing options, with the LLM determining execution order and methodology.

- Can dynamically create novel logic pathways that are challenging to define explicitly in software.

- This ambiguity can lead to inconsistent outputs, but when it works, it can feel ‘magical’.

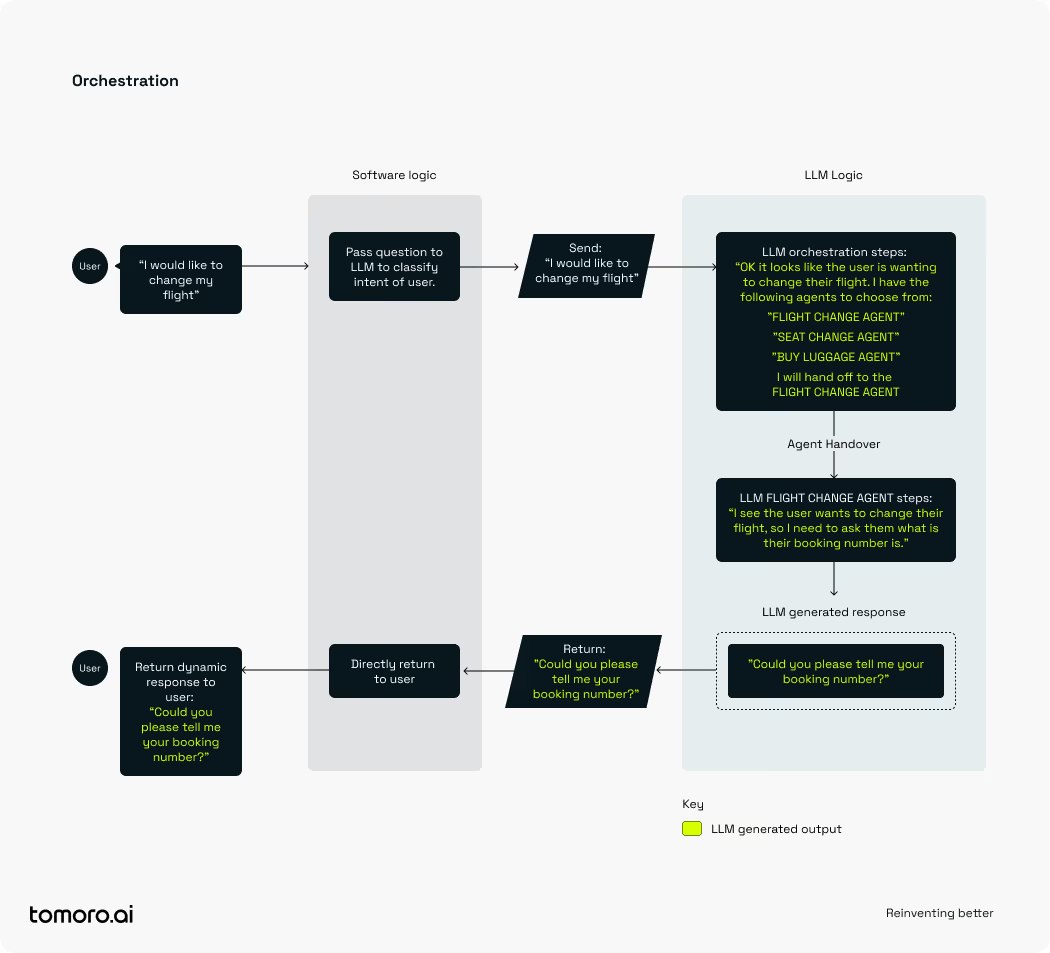

The following example applies an orchestrator approach to the same toy airline problem. Instead of letting software decide what response is appropriate, decision-making is delegated to the LLM layer. Here we have a multi-agent system where a ‘head’ orchestrator agent triages the user query and hands off to an agent specifically designed for changing flights and it ultimately provides the response to the user.

Under this example, the LLM layer is playing the role of classifier, router and response writer. In the Router example, it was just playing the role of classifier (with software handling the rest).

Strengths and Challenges of Router Architectures

Where possible, we recommend using router-based because they offer the following advantages:

- Speed and efficiency: Local computations offer superior speed compared to external API-dependent orchestrators. It’s also much cheaper to process your ‘IF/ELSE’ logic in python rather than to pay for an LLM provider to send it through its 400 billion parameter model.

- Testability and predictability: Significantly easier to debug, test, and maintain through established software practices.

- Transparency and reliability: Less variation in behaviour simplifies troubleshooting. A greater percentage of the application flow is also expressed in transparent, version controlled software, as opposed the opaque, uninterpretable weights of an LLM.

The downsides of router approaches are that they can be rigid, inflexible and can struggle in more open-ended problems. A chatbot that always replies with the exact same responses might be considered boring / stagnant by its users.

Strengths and Challenges of Orchestrator Architectures

Orchestrator designs have powerful capabilities:

- Planning: They can dynamically plan responses.

- Tool Selection/Agent Hand-off: Select appropriate tools or delegate tasks to agents.

- Iterative Combination of Outputs: Iterate and recombine outputs creatively.

- Determining Completion: Determine when enough information has been collected to finalise a response.

Using frameworks like Pydantic-AI or OpenAI’s Agents SDK makes orchestration straightforward and fast to implement. This means it is great for demos or proof of concepts.

The downsides of this approach is that:

- We have no guarantees that the LLM planning steps and subsequent actions will be correct/appropriate. The router system has the same issue, but given it is more constrained, its behaviour is more predictable.

- For simple, well-defined tasks, it is unlikely we need to full capability of a multi-agent system. For example, in our Airline Agent example, there are probably only a limited number of query types that someone interacting with an airline support system actually wants to do.

- Because more logic is contained in the LLM, it is much more susceptible for bad actors to jailbreak or exploit.

- It abstracts away decision-making into the LLM, and therefore makes it harder to understand your system (although monitoring tools like Langfuse or Braintrust might partially help this).

Our heuristics in agentic system design

Reader note: while model capability is changing quickly, it is unlikely the below will change in the near future.

Understand the decisions required in your application

Determine the scope of your problem.

- Can you easily define your desired decision logic in a diagram?

- Are you intolerant of failure or unexpected behaviour in your application?

Answering ‘yes’ to either of the above suggests router features would be better.

Router first, then hybrid approaches

Where possible, we recommend using router approaches as long as it allows and as a general principle, if a part of your system can be expressed in code, then express it in code (i.e. don’t overuse LLMs when not required).

Where the limits of these are reached, some of the open-ended orchestrator advantages can be replicated in a constrained manner. For example:

- Tool Selection/Agent Hand-off: Implemented easily through conditional branching or LLM classifiers.

- Determining Completion: Straightforward LLM classifiers can check for response completeness before returning it to the user.

The ‘Planning’ and ‘Iterative Combination of Outputs’ are undoubtedly much harder to achieve in a rigid router system however. So when a task requires these (as determined by an LLM classifier or some other logic), we suggest creating a more unconstrained orchestrator branch in your system.

Conclusion & Future Outlook

Your choice between router and orchestrator architectures should reflect your application's clarity, complexity, and interaction style. Router-based approaches currently provide reliability, efficiency, and ease of testing for clearly-defined tasks. Orchestrators offer better flexibility for broader, conversational interactions.

As LLMs continue to advance, the balance between these approaches may evolve. At Tomoro.ai, we lean towards router-based or hybrid architectures for production workloads and reserve orchestrators for open-ended problems demanding dynamic, human-like engagement.