Introduction

We build A LOT of retrieval augmented generation (RAG) systems. You name it, we’ve probably seen it.

These days RAG sometimes gets a bad name, either because people now think it’s completely trivial (it is to start, less so to scale) or they think it’s been overtaken by ‘agentic systems’ (which in many cases if you scratch at the surface start looking very similar to RAG very quickly…)

This blog lays out a worked example or two to show how we deal with some common challenges, namely:

-

Handling mixed text + numeric data and why it breaks naive RAG: keywords collide and numbers carry no semantic meaning.

-

Why designing summary-first embeddings helps: generate a short descriptive summary per chunk, then embed/query on that summary.

-

How to generate contextual summaries: include parent-document context so similarly-shaped stats stay disambiguated.

-

When to rely on code and Pydantic models: where verbatim content matters, combine custom code and/or Pydantic model with LLM calls for reliability.

Building custom RAG solutions

The basics

RAG systems power things from support bots to internal knowledge assistants.

Under the hood, you typically:

-

Chunk your source documents

-

Embed each chunk into a vector space

-

Retrieve the top-K chunks at query time

-

Generate an answer conditioned on those chunks

Popular toolkits—LangChain, LlamaIndex, OpenAI’s Filestore—make those steps almost trivial. But in real-world pipelines you’ll hit data that isn’t just dense text, and basic RAG can struggle. In the following sections, we will show concrete examples of data challenges, and gradually build the solution as more complexity is introduced.

When things get more tricky

- When your data is not just text (not uncommon actually)

Consider the following data chunk in a gaming context:

{

"Attack": {

"Range": {

"default": 5,

"with_Draconic_Ascension": 5.5,

},

"Speed": {

"default": "2 seconds",

"with_Draconic_Ascension": "2.2 seconds",

},

"Damage": {

"default": 10,

"with_Draconic_Ascension": 12,

}

}

}

Embeddings work because of the learnt relationships between words through semantic meaning and grammars. With the data above, we have a mixture of text and numbers, where out of this exact context, the numbers have no relationships with the words. So we could say this chunk of data is pretty much a combination of somewhat descriptive words followed by some random numbers.

This actually wouldn’t be a problem if this was the only type of data we had, because we could still retrieve by the embeddings of the few descriptive words available (or just use text-to-sql). However, what if this chunk is buried in lots of text dense chunks, where these words appear as well? For example:

{

"Draconic_Ascension": {

"description": "Transform into dragon form. Attack range and speed are i

ncreased by 10%, damage is boosted by 20%.",

"details": {

"activation_conditions": "Can only be activated when HP is below 50%

or Fury meter is full.",

"visual_effects": "Wings unfurl, scales shimmer with embers, voice line

s change to echoing growls.",

"lore": "An ancient bloodline awakens. The bearer of the mark channel

s the soul of the last Flamewing Wyrm, becoming a living storm of fire and fur

y."

}

}

}

Now imagine we want to retrieve “What is the attack range with Draconic Ascension?”, very likely we won’t be able to retrieve the relevant chunk we want, because it is deep in the noise of other chunks that contain the same keywords.

The root problem is we cannot differentiate between these chunks of data well, even though they contain different types of information for the same topic. Could we somehow enrich / enhance that? Of course we can :smile:

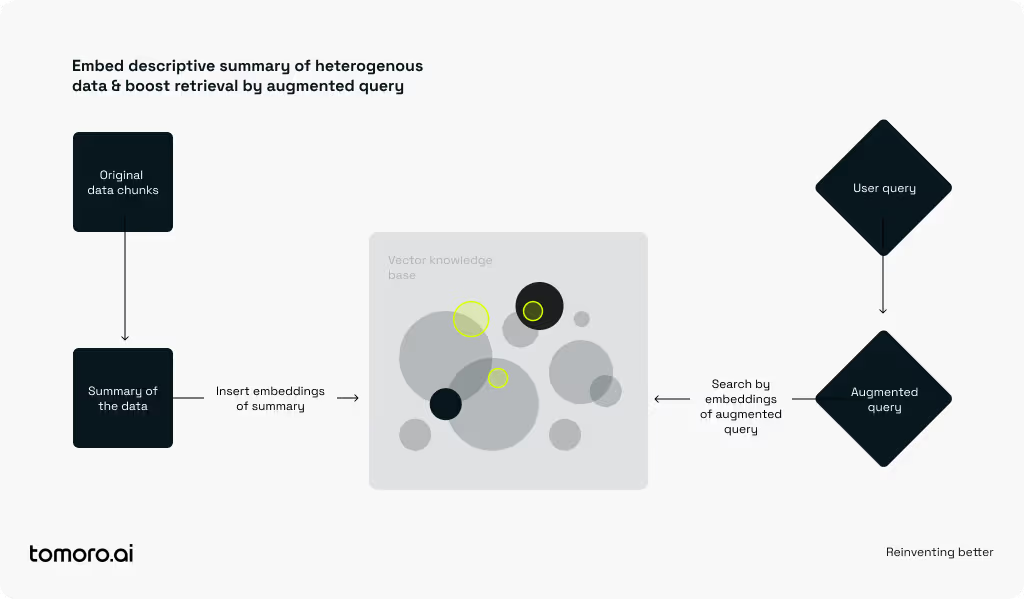

- Enrich your data by summarising it, yes you read it right

Instead of directly embedding the chunk itself, we can first generate a summary describing what the data is about, then embed and retrieve on the summary. At generation step, we would still use the original data linked to the summary.

So for the two chunk examples shown above we’d generate some summary like:

-

Attack statistics of range, speed and damage (default and with Draconic Ascension).

-

Description and details of the Draconic Ascension, including activation conditions, visual effects and lore.

We then also augment the query to “align” with the summary. For instance, we’d turn “What is the attack range with Draconic Ascension?” into “What is the statistics of attack range with Draconic Ascension?” This is especially important where the retrieval query comes from users outside of technical fields asking in ~~“freestyle"~~ normal human language, because it is not within their knowledge or concern how a RAG works to maximise precision/recall after all.

- Don’t take things out of context (generally applicable in life)

Now a follow up scenario is dealing with tons of data chunks that look the same, like below:

# Chunk one

{

"Attack": {

"Range": {

"default": 9,

},

...

}

}

# Chunk two

{

"Attack": {

"Range": {

"default": 6,

},

...

}

}

# Chunk three

{

"Attack": {

"Range": {

"default": 7,

},

...

}

}

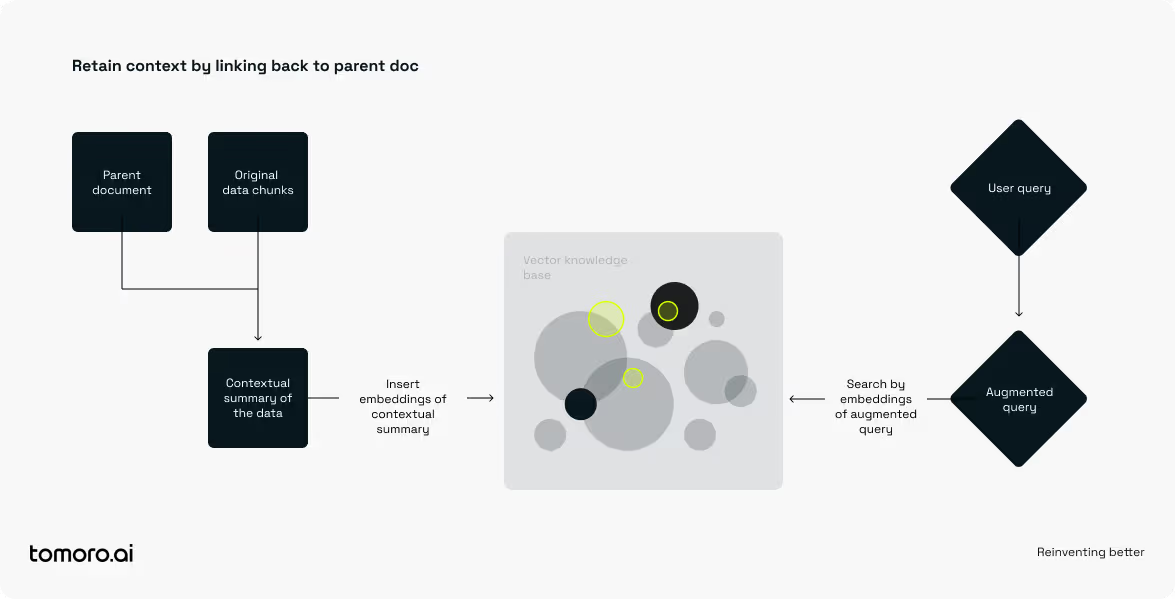

If we keep to the same approach, imagine asking “what is character X’s attack range?”, and we’d be playing a luck based guessing game with these summaries we just generated, because they’d also look very similar. So how could we differentiate them?

Simple answer is, provide context. We could simply include a reference to the chunk’s parent doc in the data chunk, e.g. {”character”: “X”} in this case. So now we’d be able to accurately retrieve the right data for character X when we also have the same data for character Y and Z.

A better and more generalisable approach however, would be to generate contextual summary of the chunk. I.e. instead of generating a summary of just the data chunk itself, we could pass in both its parent document and the chunk to general contextual summary, where we include in the summary how this chunk fits into its parent doc, e.g.:

-

This chunk provides detailed statistics of … for character X. The chunk fits into the full document by showing X’s strength in attack speed…

-

This chunk provides detailed statistics of … for character Y. The chunk fits into the full document by showing Y’s boosted stats with her special ability…

-

This chunk provides detailed statistics of … for character Z. The chunk fits into the full document by showing Z’s stats that’s well suited as a tank in team matches…

This method (which is partially inspired by Anthropic) might seem an overkill for the example shown above, however it’s very effective for chunks that could be misinterpreted “out of context”, plus it provides a unified approach that works for all chunks, maintaining a neat engineering pipeline.

- When you need to be ~~a control freak~~ rigorous

Usually we get data in full pieces and break them into chunks for a RAG system. In this example we show something a little different - data that is broken into chunks but bad chunks - they are random sections from a logical chunk which actually need to be grouped back together. A logical chunk means a chunk of content that naturally should sit together, such as a sub-section of a document, or some coherent paragraph.

Our first attempt with this data is to feed everything into an LLM call, and ask it to group them as it sees fit, then return the grouped content. LLM should be pretty good at it right? Well, yes and no.

We’ve found out, in several other occasions as well, that LLMs tend to behave lazily and are unreliable when you require the full and exact content, especially when the context is long. Which makes perfect sense. But that was a deal breaker for this specific use case because we do need word by word the exact content - no summaries, no skipping any bits of the original content. We cannot miss any details.

And of course the “yes” bit was that it did a great job understanding the semantics and structures of the broken chunks. Only if it doesn’t refuse to quote back the exact content. Damn it :/

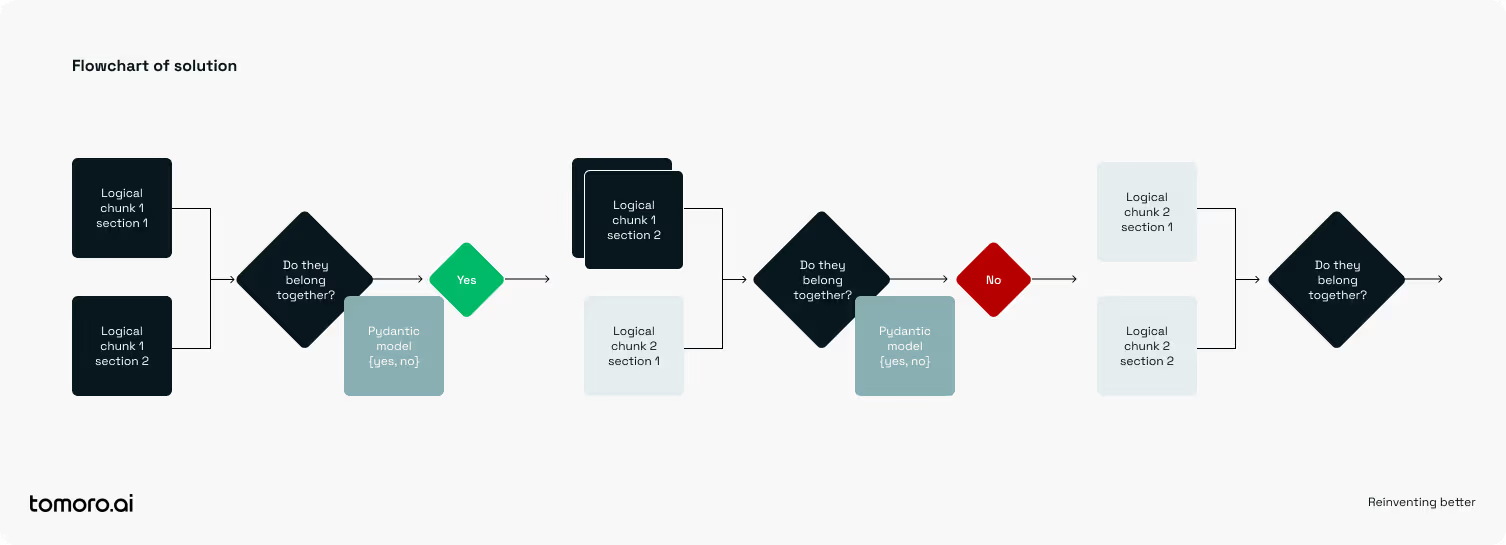

How could we then utilise what an LLM is good for, but avoid what it’s unreliable for? We turned to our good old friend, code (reads customised python function). And a “can’t-be-simpler” pydantic model. Here’s the solution:

-

Iterate through the sections while maintaining a current logical chunk

-

At every section, ask the LLM: does this section belong to the current logical chunk, answer yes or no (following the pydantic model)?

-

If yes, attach the section to the chunk; if no, flush out the current logical chunk as it is complete, then start a new one with the section.

Of course we’re using bit more tokens here than a single pass of the full content, but for the specific use case where being able to retain the exact content is the top priority, it was well worth the (minor) extra cost.

This is a very simple solution, but it follows an important principle, that when rigor is needed, we don’t want to solely rely on LLMs as they’re probabilistic after all.

Custom code/functions and pydantic models could be utilised to achieve a predictable and reliable outcome while still unleashing LLM’s capabilities.

Wrapping up

Building a gen AI solution is as much as an engineering challenge as it is an AI challenge. We hope these examples have inspired you to tackle your own unique challenges. To read more on engineering-first gen AI solutions, check out our blog post on router based agentic system design.