Can Postgres Handle Your RAG Pipeline? Testing pgai's Database-First Approach

pgai is an interesting approach to let databases do more of the routine operational work, so your application code can be simpler, but currently lacks the flexibility needed to cope with more complicated settings.

Executive Summary

- LLMs can only “see” a limited amount of text at once (the context window). This works for small tasks, but breaks down when a knowledge base spans thousands of pages. Even when the context window is sufficient, performance can still degrade due to the “needle-in-a-haystack” problem.

- RAG (‘retrieval augmented generation’) has emerged as a very common pattern where you maintain a knowledge base (docs, wikis, policies, transcripts, etc.), run a semantic search on a user query to retrieve the most relevant snippets using embeddings, and then feed those chunks into the LLM alongside the question. This constrains the model’s context and, when done correctly, can improve answer quality and reduce hallucinations.

- pgai is an open source Postgres extension (and companion tooling) that helps you build “AI retrieval” workflows on top of the trusted open source database PostgreSQL.

- The main idea is to push more of the standard RAG pipeline into the database layer (ingest → chunk → embed → keep embeddings in sync), instead of treating the DB as “just storage” for embeddings.

- Initial thoughts are that it is promising but once your RAG pipeline gets even mildly complicated (particularly chunking approaches), it’s not suitable. We’ll be closely watching this project though.

The RAG Pattern

There are a lot of ways to build RAG, and for a longer breakdown of different RAG approaches, read Practical Examples of Customised RAG Solutions. The design space gets surprisingly deep once you care about quality and the common “default” approach generally looks like this:

- Take a pile of documents.

- Split them into chunks. There are many different methods to do this (e.g. paragraphs, semantic groupings).

- Turn each chunk into an embedding.

- Store embeddings in a vector database (Pinecone, Milvus, etc.) or in Postgres using pgvector.

- At query time, search for the closest chunks `and pass them into the LLM (again...there are many ways to do this too).

In many stacks, steps (1)–(3) happen outside the database in application code or a data pipeline, and the database is mostly used for:

- storing embeddings

- searching embeddings

Purpose of pgai

pgai is a Postgres extension (open source, developed by Timescale) that tries to blur that line.

Instead of treating embeddings as something your app manually manages, pgai makes embeddings a database feature:

- You define what table / documents you want embedded.

- You specify the embedding model and a chunking strategy.

- pgai manages the rest, including keeping embeddings up to date as your source data changes.

The promise is appealing:

- Less bespoke glue code that needs to be maintained.

- It should be easier to keep the embeddings ‘fresh’ as the underlying source documents change.

- Postgres/pgai manages your retries, rate limits, failed jobs, etc.

Reader note: pgai bundles pgvector (another very popular RAG Postgres extension) within it. pgvector adds vector storage and similarity search to Postgres, while pgai builds on that to automate the RAG pipeline steps like chunking, embedding, and keeping those embeddings up to date.

Initial Thoughts on pgai

What we liked

1) It’s straightforward to get running.

The happy path is reasonably simple:

- Pull the Timescale docker images (database + worker).

- Provide your embedding provider API key.

- Run a small amount of SQL to declare the vectoriser (basically: what to embed, how to chunk it, which model to use).

After that, pgai arranges for a vectoriser worker to run as a separate process and generate embeddings asynchronously (e.g. every 5 minutes, or whatever cadence you want).

2) It’s nice to do the whole pipeline “near” the database.

pgai can ingest content from tables, and it can also load documents from places like S3, then parse + chunk + embed them. It can also handle different text document formats like pdfs, markdown etc.

What felt limiting

1) You lose a lot of control (and RAG sometimes needs control).

High-performance RAG systems (when measured in terms of answer quality) often require bespoke pipelines, such as:

- custom chunking rules (by headings, by page, by speaker turns, etc.)

- metadata-aware chunking (keep section titles, timestamps, authors, doc type)

- different embedding strategies per document type

pgai offers less flexibility on the above.

At the moment there are two main chunking strategies: character text splitter and a recursive character text splitter, plus a no chunking option. That might be enough for some use cases but many production RAG systems require more customisation.

It would be amazing to see if Timescale could incorporate some of the more sophisticated chunking strategies that we see in libraries like Chonkie and similarly support advanced designs like Anthropic's contextual retrieval.

2) Text-first, not multimodal.

Many interesting RAG problems are no longer purely text:

- PDFs with diagrams

- screenshots / images

- audio recordings

- video clips

Even if you can “extract text” from these sources, that’s not the same as a true multimodal embedding pipeline.

If pgai eventually supports multimodal models end-to-end (load → chunk → embed for large images/audio/video stored in S3, with robust syncing), that would be compelling but today, it is a text embedding workflow.

3) If you only need embeddings, you might not need pgai.

If your ingestion pipeline is already custom (or needs to be), then “embedding chunks of text” is not the hardest part of RAG. In that world, pgai is solving the easiest part of the problem.

Also, if your knowledge base is updated infrequently, the value of automatic embedding syncing is not as great.

Text to SQL layer

One particularly good way to use pgai would be to deploy a text-to-sql interface over your databases. This can be achieved quite easily with semantic_catalog module offered by pgai. Simply setup like this:

OPENAI_API_KEY="your-OpenAPI-key-goes-here"

TARGET_DB="postgres://user:password@host:port/database"

CATALOG_DB="postgres://user:password@host:port/database"

and get semantic catalog to scrape your data dictionaries with pgai semantic-catalog create. This generates context from your datastore which looks something like this:

---

schema: postgres_air

name: aircraft

type: table

description: Lists aircraft models with performance characteristics and unique codes.

columns:

- name: model

description: Commercial name of the aircraft model.

- name: range

description: Maximum flight range in kilometers.

- name: class

description: Airframe class category or configuration indicator.

- name: velocity

description: Cruising speed of the aircraft.

- name: code

description: Three-character aircraft code serving as the primary key.

...

This context is now available to pgai in a variety to ways;

Through semantic search:

This query will return the tables, functions and other objects that might be relevant to your natural language query:

pgai semantic-catalog search -p "Your natural language question goes here!"

Get raw context:

This will render the raw YAML context related to your natural language query:

pgai semantic-catalog search -p "Your natural language question goes here!" --render

Generate SQL:

Or you can directly generate the raw SQL required to answer your query. The context from previous step is sent to a LLM and response generated:

pgai semantic-catalog generate-sql -p "Your natural language question goes here!"

How You Can Use pgai Right Now

If you’re building a relatively simple RAG system, pgai is worth trying out if you want:

- Postgres as your system of record

- minimal glue code

- embeddings that automatically stay in sync

- a fast way to apply a text-to-SQL to your databases

- to play around with new RAG tools and Postgres extensions (which we like doing at Tomoro 🙂).

Where We’d Be Cautious

It is probably worth waiting on pgai if your RAG pipeline needs any of the following:

- heavy custom ingestion or chunking logic

- lots of document types with different parsing requirements

- multimodal embeddings

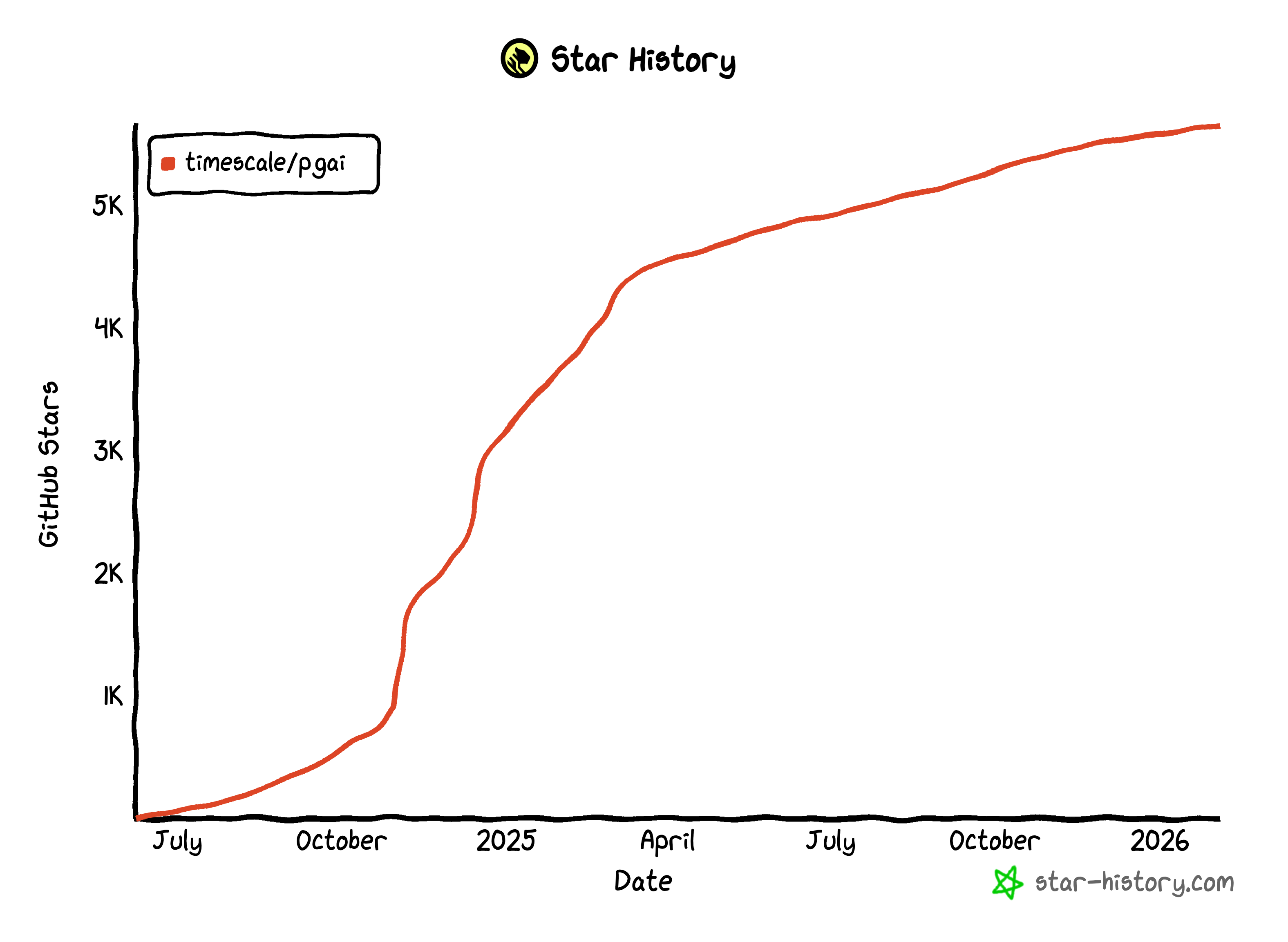

Lastly, while it is clear pgvector has seen strong adoption, it is unclear if pgai will see the same level of interest and therefore support (not withstanding it has only been around for circa 18 months).

Summary

pgai is an interesting approach to RAG to let databases do more of the routine operational work, so your application code can be simpler.

Right now, it is:

- usable and genuinely pleasant for simple RAG setups

- not flexible enough for more bespoke pipelines (especially multimodal)

It shows promise and is definitely worth watching to see how it progresses.