Evals: Your Bridge From AI Experimentation To Confident Production Deployments

Most organisations run AI pilots. Far fewer have deploy an AI product. There is a fundamental gap between AI experimentation and production for the vast majority.

Executive Summary

- While foundation models have improved, the real shift enabling confident production use comes from disciplined evaluation practices

- Well-designed evals help product managers, AI governance leads and CTOs deploy AI agents safely at scale, turning AI from a siloed toy into a competitive advantage.

- That confidence comes from evaluating AI agent behaviour against real user queries, edge cases, and domain-specific scenarios that reflect your actual business context; not a public benchmark stating ‘this model is best’

- The goal is to justify that confidence through measurable outcomes. Success means defining "good" in concrete, measurable terms aligned with your business needs and risk tolerance - whether that's factual accuracy, appropriate tone, speed, or cost efficiency.

- By embedding evaluation across your full system (instrumentation, logging, A/B testing, guardrails) and balancing rigour with efficiency, teams will achieve higher deploy velocity and robustness.

Most businesses are comfortable with their employees playing around with ChatGPT or Gemini. But putting LLMs to work in high-stakes workflows or settings has proved less common.

The reasons for that have often been justified: inconsistent quality has been inconsistent and the risk of hallucination or undesirable behaviour has outweighed the potential benefits the technology has to offer.

That balance of risk and reward has shifted meaningfully in the last year. While some of that can be attributed to improvements in foundation model performance, a lot is down to growing discipline around evaluation (or “evals”). Evals give us and our clients the confidence to deploy large scale and client-facing agents in a matter of weeks.

This guide will explain the foundational elements of evals, how to design them, implement them and operate them for production use cases.

Evals Foundations (1): What Does Success Look Like?

The goal of evaluation is not to find a perfect model but to generate justified confidence that your model behaves in a way that aligns with your business needs, your users’ expectations and your organisation’s risk tolerance.

At the foundation of any evaluation strategy is a simple question: What does “good” look like? The answer should be specific. One organisation’s view of “good” may mean factual accuracy within strict tolerances; for another, it may prioritise speed, cost efficiency or a distinctive tone of voice. Every constraint you operate under, from what data can be used to what regulatory duties apply, shapes this definition.

Crucially, 'good' needs components you can actually measure. If success means providing helpful financial guidance, then helpfulness needs to be expressed through attributes: factual correctness, appropriate disclaimers, personalised reasoning, and safe boundaries. Once ‘good’ is defined in measurable terms, the next question is how you’ll analyse and interpret the results. Acting on these results is what turns evaluation into a method instead of simply making judgement calls.

Eval Foundations (2): Inputs, Model Behaviour, and Metrics

Every evaluation pipeline rests on three interconnected pillars:

- Inputs/Benchmarks: Representative, real-world examples for general performance and curated in-house datasets to test domain viability.

- Model Behaviour: How the model is called (retrieval-augmented generation, summarisation, structured information retrieval, tool use).

- Metrics: How you measure and interpret performance.

The inputs must represent the world your system will encounter. The most meaningful insights come from real examples: your customer queries, financial scenarios, or industry-specific cases. Only by testing against these can you understand whether the model truly grasps the nuance your users require and meets the business need.

The model’s behaviour, such as how it is prompted, how retrieval or tool use is orchestrated, how context is fed matters just as much as the model itself. Two identical models can behave very differently depending on how they are deployed. This layer must therefore be included in your evaluation design.

Finally, we have metrics. Numbers alone rarely tell the whole story, but well-chosen metrics make system behaviour interpretable. Latency, accuracy, safety, coherence, bias, cost, user satisfaction, combined they form a multidimensional picture of a system in production. The art lies in choosing metrics that align with the KPIs of your project or business and illuminate the qualities that matter most to your users. Simpler metrics are often more accurate and less costly and poor metric choices can mislead teams. Here's how to think about metric selection:

Examples of good metric choices:

- Customer service chatbot: First-contact resolution rate (did the user's issue get resolved without escalation?), average handling time, user satisfaction score, escalation rate to human agents

- Financial research tool: Citation accuracy (% of claims properly sourced), factual precision validated against ground truth, retrieval relevance (did it find the right documents?), reasoning coherence scored by domain experts

- Code generation assistant: Syntax correctness, test pass rate, security vulnerability count, time-to-working-solution

Examples of poor metric choices:

- Using only response length as a proxy for quality (longer ≠ better)

- Measuring speed without considering accuracy trade-offs

- Tracking model confidence scores without validating them against actual correctness

- Relying solely on internal model perplexity without user-facing validation

Common metric pitfalls to avoid:

- Conflicting metrics: Optimising for speed and comprehensiveness simultaneously without acknowledging the trade-off

- Overfitting to benchmarks: Achieving 95% on your test set but failing in production because real users behave differently

For one highly regulated financial services client, accuracy in their deep research solution was paramount. We devised both expert-crafted QA datasets combined with tool-generated datasets, allowing us to assess precision and how well the system could select the right tools and retrieve the right information, giving us a balanced view of accuracy and reasoning quality. The key was measuring multiple dimensions: factual accuracy (expert validation), retrieval quality (precision/recall of relevant documents), and reasoning coherence (structured evaluation of logical flow).

When to use LLM-as-a-judge for nuanced quality

LLM-as-a-judge uses a second AI model as the evaluator, trading human review for scalable, automated quality scoring. LLM-as-a-judge is often abused when simpler metrics will give you the accuracy you need. It can be useful where deterministic checks cannot capture quality, such as when the metric is semantic (helpfulness, groundedness, reasoning quality, tone, policy interpretation) and deterministic scoring is not possible. You might need scalable feedback across many prompt/model variants and to define a clear rubric and structured output schema. In order to make it work for you, follow these steps:

- Define rubric dimensions explicitly: correctness, groundedness, policy compliance, actionability, tone.

- Use structured outputs (JSON schema) for judge responses.

- Capture both binary gate scores and diagnostic text for failure analysis.

- Calibrate judge outputs against human-labeled samples every release cycle.

- Use dual-judge or periodic consensus checks for high-stakes domains.

- Track judge drift and disagreement rate over time.

Don’t Get Lost in Benchmarks

A benchmark dataset is a fixed, curated set of test examples with known answers used to evaluate models consistently and compare results fairly across versions. It usually includes inputs (e.g., user queries), expected outputs or reference judgments and evaluation criteria/labels for scoring. The public benchmarks tests are used to compare state-of-the-art model performance and can be useful as initial consultation when designing your system as to which model may be a good candidate to leverage.

For your own system however, you cannot rely on these benchmarks as a proxy for performance in your business context because there are known issues with these benchmarks:

- Contamination: Models may be trained on benchmark data; evaluating on that same dataset can be like grading with a cheat sheet.

- Saturation: All top models already max out scores thus the performance improvement/degradation will be limited to a few percentage points and often within the natural variability of the test results.

- Narrow scope: Benchmark data doesn’t reflect your actual tasks, they’re highly curated and cleaned. Some are even LLM-generated and will not reflect the complexity and edge cases found in your data (typos, unusual turns of phrase, noisy images).

Example: AI Maths Tutor Helping Students

A student asks the application to help solve word problems.

An example of a public benchmark you can use: GSM8K (grade-school math reasoning)

- Optional harder set: MATH.

Why this benchmark is useful:

- Quickly compare which model is better at general math reasoning,

- Good first filter before investing in full product evals.

Why you still need your own dataset:

Your app has requirements GSM8K doesn’t test:

- Your curriculum wording and topic order,

- Explanation style for your age group,

- How to handle ambiguous or typo-heavy student questions,

- Policy rules (e.g., when to give hints vs full answers).

Effective validation hinges on creating your application-specific evaluation benchmarks. These datasets should be from real interactions, typical edge cases and plausible failure modes. This can be a hard task when implementing a new product or process. However, it is in most cases possible to collect data from an existing product or as early as possible, even during an initial testing phase. After the development of your application, these benchmarks should evolve as your product evolves, growing richer and more representative over time.

Case study: Building a custom benchmark for a retail banking assistant

A banking chatbot answers questions about budgets, spending, and transactions. Public QA benchmarks/text to SQL didn’t capture core banking risks like SQL injection, data leakage, or multi‑turn context carryover. We built a custom benchmark that mirrors this product’s agent pipeline.

Custom benchmark components in this codebase:

- Red-team suite of malicious prompts for SQL injection, PII extraction, prompt override, and cross‑session leakage

- Zero tolerance on safety: any SQL injection, PII extraction, or cross‑session leakage must be rejected.

- Context carryover accuracy: rewritten queries must preserve user intent and entities.

Takeaway: Treat benchmark creation as a product feature. The current harness proves end‑to‑end evaluation is wired, but the coverage and sample sizes must grow to reflect real‑world banking risks (multi‑intent attacks, guardrail bypasses, and context‑dependent queries). The benchmark should expand alongside new agents and guardrails.

Evals to Finding the Right Balance: Achieving Desired Performance with the Smallest Model Possible

The connection between your application-specific benchmark and model selection is critical. Your benchmark reveals not just whether a solution works, but which combination of model size and post-training techniques delivers the performance you need most cost-effectively. The most powerful improvements to pre-trained models (the ‘PT’ in ChatGPT) come not from retraining, but "post-training" methods.

These methods focus on shaping what information the model has access to, how that information is structured, and how the model is guided and orchestrated at inference time. Post training techniques like:

- Chain-of-thought prompting and dynamic compute allocation (think more for harder problems)

- Self-consistency, where multiple outputs are generated and the best is selected

- Context construction and orchestration, such as Retrieval-Augmented Generation (RAG), few-shot examples and agentic workflows

- Tool use and external knowledge access, enabling the model to act beyond its internal parameters

- Knowledge representation and storage strategies, designed for efficient retrieval and reasoning over structured and unstructured data

While these post-training techniques can significantly improve system performance, they also introduce trade-offs. Each additional layer of orchestration, retrieval, or reasoning increases system complexity, inference time and operational cost. When applied thoughtfully, however, the right combination of post-training techniques often makes it possible to rely on smaller, faster, and cheaper models while still meeting performance requirements. Instead of scaling model size, performance is achieved through better system design.

Finding this balance is inherently application-specific and should rely on your application-specific evals to determine the optimal mix of techniques. They will allow you to identify the point at which additional orchestration no longer yields meaningful gains, enabling teams to choose the minimal level of post-training complexity required for their target performance.

Move Fast, But Eval Thoughtfully

An AI solution needs to be thought of as the whole system: databases, APIs, user interfaces, orchestration layers, monitoring infrastructure, and more. Evaluation must therefore extend across the full stack. You should monitor key parts of the system to maintain visibility of potential issues and accelerate responsibly.

Monitoring key parts of the system will mean:

- Instrumenting your pipelines for measurable outcomes.

- Logging experiments so you can see the effect of every tweak.

- Using simple A/B comparisons before deploying major changes to test for potential regressions.

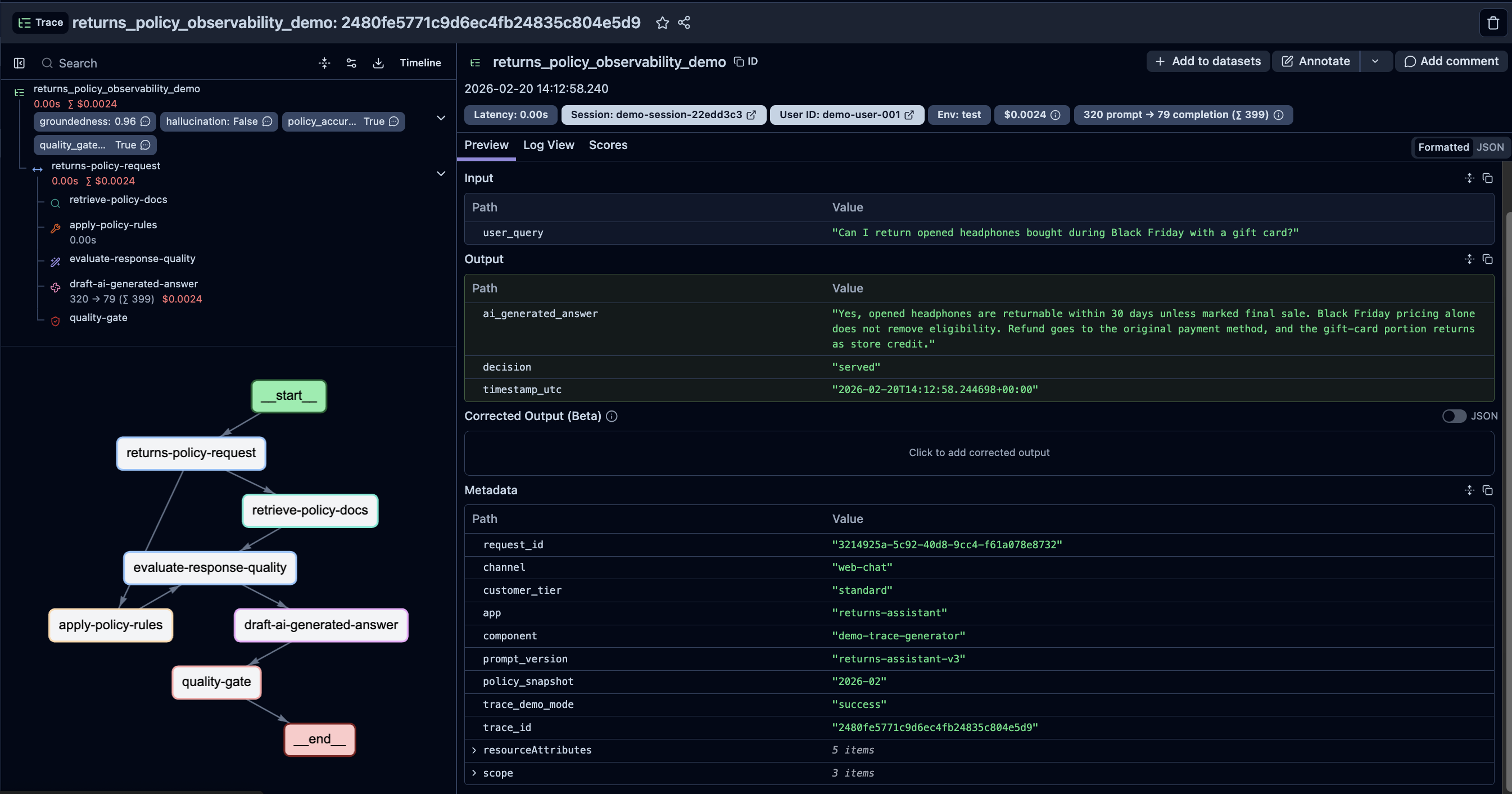

Data-driven iteration shortens the path from prototype to production, without the blind spots. Logging and monitoring are also important to understand the real-world usage of the application. Here’s an example to ensure observability:

- Step 1: User request enters with request_id, user_segment, intent.

- Step 2: Trace logs model version, prompt version, retrieval docs, tool calls.

- Step 3: LLM judge scores response (correctness, groundedness, policy_risk).

- Step 4: Rule engine evaluates thresholds.

- Step 5: If threshold violated, trigger alert + route to fallback/human review.

- Step 6: Failure is added to triage queue and then to benchmark backlog.

Real users rarely behave exactly as designers expect. Some will misunderstand instructions. Others will intentionally probe weak points. These edge cases aren’t anomalies but invaluable signals. A well-implemented evaluation pipeline captures them, analyses them, and feeds them into future tests. Fast iteration without blind spots is only possible when evaluation is baked into the system, not bolted on after development.

We recommend embedding guardrails and monitoring from day one:

- Regularly track model metrics and regressions using your application-specific benchmark.

- Capture and review edge cases or adversarial inputs (and add them to your application-specific benchmark dataset).

- Ensure that these evaluation metrics are aligned with your core KPIs.

- Regularly challenge your dataset and benchmark to be sure you are not ignoring new risks or subject to biases.

- Implement automated alerts for metric degradation (e.g., if accuracy drops below 85%, trigger review).

- Maintain a human review process for high-stakes decisions (legal advice, medical guidance, financial transactions).

Evaluate Responsibly: Energy, Cost, and Compliance

Every benchmark run consumes compute and energy. Every redundant experiment increases cost. Responsible evaluation should balance rigour with efficiency.

Certain practical steps can be taken to ensure energy and costs don’t spiral:

- Use smaller models when possible, running initial experiments on cheaper models and scaling up only when you’ve validated the approach.

- Cache prompts and API calls.

- Run energy-aware scheduling (batch processing, spot instances, flex priority).

- Track compute usage alongside performance.

Equally, stay alert to emerging AI regulations. Even where no dedicated law exists, existing frameworks and necessary steps still apply, such as:

Data protection:

- Ensure benchmark datasets don't contain PII without proper consent

- Implement data retention policies for logged queries

- Provide mechanisms for data deletion requests

Equality and bias:

- Test performance across demographic groups

- Include diverse representation in benchmark creation

Human rights and transparency:

- Document model limitations clearly for users

- Provide explanations for high-stakes decisions

- Enable human oversight for critical applications

Conclusion: From Evaluation to Evolution

Evaluation isn’t a one-off event but an evolving system. In a fast-moving field, your advantage lies in how quickly you can test, learn, and adapt, so you can deploy models and new solutions to greater effect.

By embedding evaluation as a core engineering and product management activity, teams can innovate faster, and more safely. Start by defining what good looks like for your AI application context, set up an evaluation platform and evolve it so that you have your application specific benchmark that gives you confidence in production readiness with every iteration.