Introduction

This blog explores our experience, and lessons learned, of building retrieval augmented generation (RAG) based large language model (LLM) applications at scale.

Traditional RAG based LLM applications typically employ some form of cognitive search during the retrieval phase of the execution. When retrieving from a corpus of unstructured data, the most standard approach taken is to employ vector search.

While this approach works well at the prototype scale; there are several issues that often crop up when scaling the application. Below, we discuss the use of graphs to curate unstructured data, in tandem with LLMs, and overcome these challenges.

This is part 2, where we demonstrate a worked example of the approach. See <part 1 for the overall approach.

Approach to a real example

The following sections demonstrate a more involved example that employs this approach to build a graph from unstructured data and retrieves specific context for a given query.

Dataset and tools used

We will use Neo4J database to build our stack and use cypher query language to perform retrievals. We will use the unstructured text from the plot of episodes from the popular TV series Breaking Bad. The unstructured text is scrapped from Wikipedia in public domain.

This text is a good demonstrator of this approach because of the large number of entities, relationships and complex events that happen through the show.

For our demonstration, we will limit our graph scope to the first 5 episodes of the show; but the approach can be extended to a larger corpus of data.

Building knowledge graph

Creating modelling agent

Traditionally, creating a schema for knowledge graph is done manually. This involves data domain experts making decisions and choosing a modelling paradigm. One of the innovations we are driving at Tomoro AI is the concept of creating agents with domain knowledge and skills to make these decisions on behalf of human data modellers.

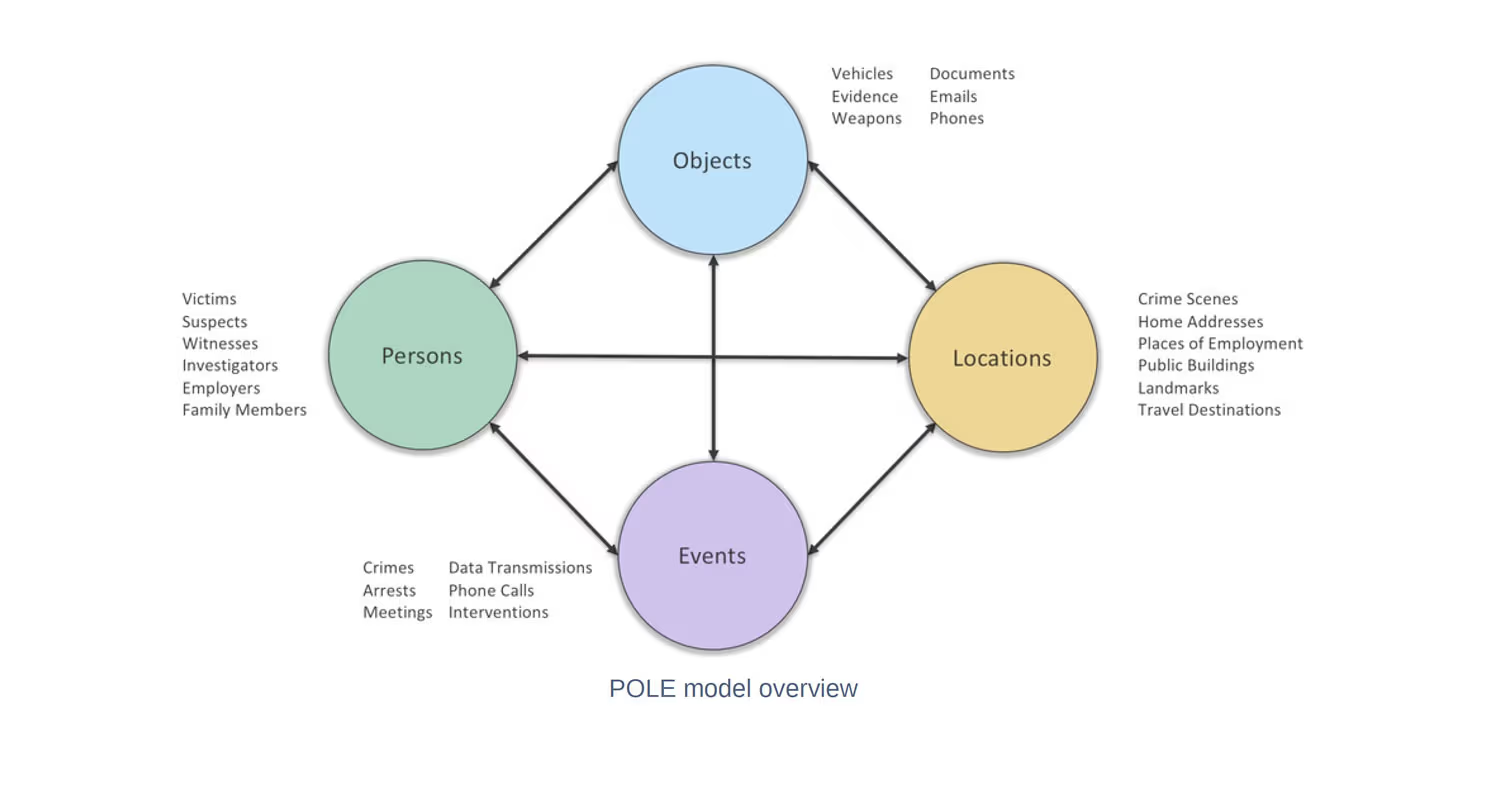

In this example, we use an agent pre-trained on using POLE modelling technique (commonly used in crime prevention and law enforcement) to model the data. It uses People, Objects, Locations and Events as named entities and documents relationships between them.

Tomoro AI agents are a sophisticated collection of prompts, orchestration, long term and short term memory components. They are compiled as independent applications and work with other tools, humans and agents through pre-defined interfaces.

The example here imports a pre-trained agent to model unstructured data. The creation of these agents are out of scope for this document and will be covered in Agent Design Studio proposition.

The code initialises a new instance of graph_modeller_agent with Cypher and POLE modelling skills pre-trained and provides specific instructions for modelling and unstructured text of the episodes.

System prompt: {import pole_graph_modeller.agent()}

Instructions:

Only using the provided unstructured text, please can you create a neo4j database using cypher queries while following these instructions:

Extract PERSONS and OBJECTS from the text as graph nodes.

Extract LOCATIONS and EVENT as relationships between PERSONS and OBJECTS.

Model the personal or professional relationships between PERSONS as graph relationships.

The unstrutured text is as follows:

{bb_episode_text.txt}

The agent uses a combination of NER (named entity recognition) and LLM calls to generate cypher queries that can be streamed into a neo4j instance.

Also note that the request to model the data using cypher query is provided as an instruction. The modeller can output model suitable for Mongo and BigQuery if required.

Building graph database

The agent output in cypher looks something like this:

// Episode 1

CREATE (Walt:Person {name: "Walter White"})

CREATE (Skyler:Person {name: "Skyler White"})

CREATE (WalterJr:Person {name: "Walter Jr."})

CREATE (Hank:Person {name: "Hank Schrader"})

CREATE (Marie:Person {name: "Marie Schrader"})

CREATE (Jesse:Person {name: "Jesse Pinkman"})

CREATE (Bogdan:Person {name: "Bogdan Wolynetz"})

CREATE (Domingo:Person {name: "Domingo Molina"})

CREATE (Emilio:Person {name: "Emilio Koyama"})

CREATE (Krazy8:Person {name: "Krazy-8"})

CREATE (RV:Object {name: "RV"})

CREATE (methLab:Object {name: "meth lab"})

CREATE (chemistrySupplies:Object {name: "chemistry supplies"})

CREATE (Albuquerque:Location {name: "Albuquerque, New Mexico"})

CREATE (highSchool:Location {name: "high school"})

CREATE (carWash:Location {name: "car wash"})

CREATE (hospital:Location {name: "hospital"})

CREATE (methLabLocation:Location {name: "desert"})

CREATE (JesseHome:Location {name: "Jesse's home"})

CREATE (WaltHome:Location {name: "Walt's home"})

CREATE (Walt)-[:AT]->(Albuquerque)

CREATE (Walt)-[:AT]->(carWash)

CREATE (Walt)-[:AT]->(WaltHome)

CREATE (Skyler)-[:AT]->(Albuquerque)

CREATE (WalterJr)-[:AT]->(Albuquerque)

CREATE (Hank)-[:AT]->(Albuquerque)

CREATE (Marie)-[:AT]->(Albuquerque)

CREATE (Jesse)-[:AT]->(JesseHome)

CREATE (Bogdan)-[:AT]->(carWash)

CREATE (Domingo)-[:AT]->(methLabLocation)

CREATE (Emilio)-[:AT]->(methLabLocation)

CREATE (Krazy8)-[:AT]->(JesseHome)

CREATE (Walt)-[:HAS_WIFE]->(Skyler)

CREATE (Walt)-[:HAS_SON]->(WalterJr)

CREATE (Skyler)-[:HAS_SISTER]->(Marie)

CREATE (Hank)-[:IS_BROTHER_IN_LAW_OF]->(Walt)

CREATE (Hank)-[:IS_MARRIED_TO]->(Marie)

CREATE (Jesse)-[:WAS_STUDENT_OF]->(Walt)

CREATE (WaltBirthdayParty:Event {name: "Walt's 50th birthday party"})

CREATE (WaltCollapse:Event {name: "Walt collapses at the car wash"})

CREATE (DEARaid:Event {name: "DEA raid on meth lab"})

CREATE (ChemistrySuppliesTheft:Event {name: "Chemistry supplies theft"})

CREATE (RVDrive:Event {name: "RV drive into the desert"})

CREATE (BrushFire:Event {name: "Brush fire"})

CREATE (PhosphineGas:Event {name: "Phosphine gas synthesis"})

CREATE (ShootingAttempt:Event {name: "Shooting attempt"})

CREATE (SkylerQuestions:Event {name: "Skyler's questions"})

CREATE (Walt)-[:ATTENDED]->(WaltBirthdayParty)

CREATE (Walt)-[:EXPERIENCED]->(WaltCollapse)

CREATE (Walt)-[:WENT_ON_RIDE_ALONG]->(DEARaid)

CREATE (Jesse)-[:WENT_ON_RIDE_ALONG]->(DEARaid)

CREATE (Skyler)-[:TALKED_TO]->(SkylerQuestions)

CREATE (Walt)-[:EXPERIENCED]->(ShootingAttempt) 61 CREATE (Walt)-[:EXPERIENCED]->(DEARaid)

CREATE (Jesse)-[:WITNESSED]->(DEARaid)

CREATE (Emilio)-[:EXPERIENCED]->(PhosphineGas) 64 CREATE (Krazy8)-[:EXPERIENCED]->(PhosphineGas) 65 CREATE (Walt)-[:EXPERIENCED]->(RVDrive)

CREATE (Skyler)-[:ASKED]->(SkylerQuestions)

...Clipped...

Notice how relationship names have been kept standard across the graph, as shown above, like VISITED , EXPERIENCED , MARRIED_TO , ATTENDED , etc.

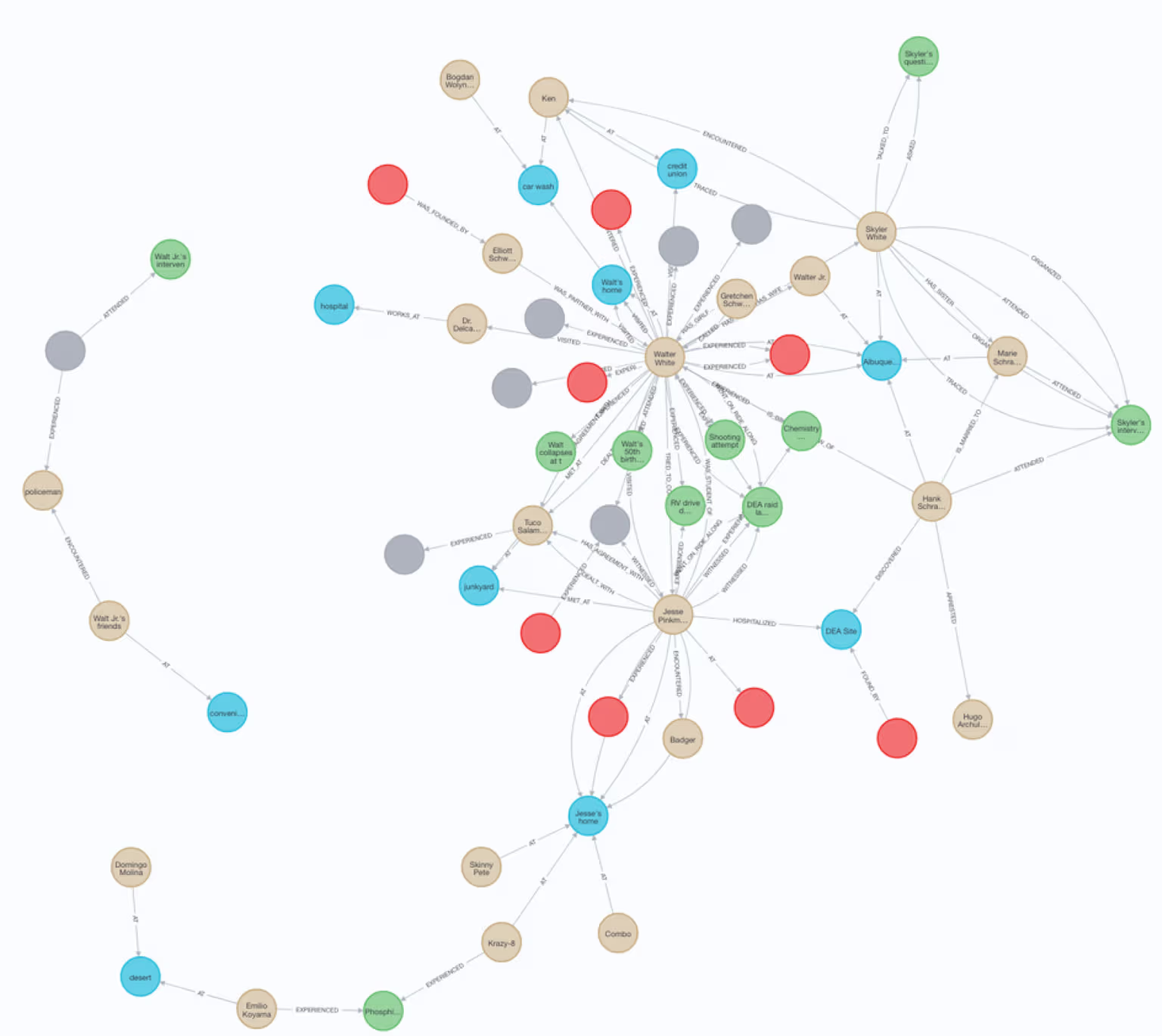

The resulting graph is a dense collection of these concepts:

Graph of 5 five episodes of breaking bad

Querying knowledge graph

Building analyst agent

Similarly to the modelling agent, we use our internal agent composer to import pre-trained analyst agent that has cypher analysis skills.

System prompt: {import cypher_analyst.agent}

Instructions:

Please can you write cypher queries to respond to the following questions

Running queries

The agent is able to explore the graph using cypher queries to discover the node and relationship types using CALL db.labels(); and CALL db.relationshipTypes(); commands since it is pretrained to analyse graph databases. It then applies a selection inference pattern to understand encoded relationship types to create a subset of object required and generates cypher query to target relevant nodes and relationships.

1. Who were involved in the Skyler's intervention event?

MATCH (p:Person)-[:ATTENDED]->(e:Event)

WHERE e.name = 'Skyler\'s intervention' OR e.type = 'Skyler\'s intervention'

RETURN p.name;

Answer:

╒════════════════╕

│p.name │

╞════════════════╡

│"Marie Schrader"│

├────────────────┤

│"Hank Schrader" │

├────────────────┤

│"Skyler White" │

└────────────────┘

2. Who are Walter White's human relationships?

MATCH (w:Person {name: 'Walter White'})-[r]- (p:Person)

RETURN type(r) as Relationship_Type, p.name as Person_Name

Answer:

╒════════════════════════╤═══════════════════╕

│Relationship_Type │Person_Name │

╞════════════════════════╪═══════════════════╡

│"DEALT_WITH" │"Tuco Salamanca" │

├────────────────────────┼───────────────────┤

│"ENCOUNTERED" │"Ken" │

├────────────────────────┼───────────────────┤

│"WAS_GIRLFRIEND_OF" │"Gretchen Schwartz"│

├────────────────────────┼───────────────────┤

│"CALLED" │"Gretchen Schwartz"│

├────────────────────────┼───────────────────┤

│"WAS_PARTNER_WITH" │"Elliott Schwartz" │

├────────────────────────┼───────────────────┤

│"TRIED_TO_CONTACT" │"Jesse Pinkman" │

├────────────────────────┼───────────────────┤

│"VISITED" │"Dr. Delcavoli" │

├────────────────────────┼───────────────────┤

│"VISITED" │"Jesse Pinkman" │

├────────────────────────┼───────────────────┤

│"HAS_AGREEMENT_WITH" │"Tuco Salamanca" │

├────────────────────────┼───────────────────┤

│"WAS_STUDENT_OF" │"Jesse Pinkman" │

├────────────────────────┼───────────────────┤

│"IS_BROTHER_IN_LAW_OF" │"Hank Schrader" │

├────────────────────────┼───────────────────┤

│"HAS_SON" │"Walter Jr." │

├────────────────────────┼───────────────────┤

│"HAS_WIFE" │"Skyler White" │

└────────────────────────┴───────────────────┘

3. Who was arrested by Hank Schrader? 57

MATCH (hank:Person {name: 'Hank Schrader'})-[r:ARRESTED]->(arrestedPerson:Person)

RETURN arrestedPerson.name

Answer:

╒═══════════════════╕

│arrestedPerson.name│

╞═══════════════════╡

│"Hugo Archuleta" │

└───────────────────┘

Notice that some of these questions involve being able to connect relationships between Persons that may not have even occurred in the same episode (Walter White’s relationships). In the unstructured text, they will appear is completely different text.

Also notice how, due to agent’s native understanding of the graph structure, makes it possible to reduce the number of tokens needed to send to LLM to generate cypher queries. Only the most relevant nodes, relationship types relevant to the query are sent to generation step.

Observations and an insight into our further research

In this blog, we demonstrated an alternative approach to using vector based embeddings to perform context retrieval. We observed that using graph databases to model unstructured data can help with:

- Curating relevant concepts and relationships in meaningful ways even if the information is spread out in the original unstructured text.

- We can optimise the number of context tokens required to send to generation when implementing RAG patern by using a local graph databases.

- By not sending large contexts or repeated contexts; we reduce noise and cost of execution per cycle.

- By removing the need to generate embeddings for all data; and by applying a model structure to actual dataset; we also reduce system complexity from implementation point of view.

- By using agents to independently perform modelling and analysis activities; the graph can grow and change without the need of in-band synchronisation between agents.

We are actively pursuing further research on:

- Can the embeddings be added to the graph model to facilitate more robust relationship discovery?

- Can we infer new knowledge from data in knowledge graph that hasn’t been explicitly mentioned in original text?

- Comparative analysis of this approach at scale with a range of vector database to quantitatively measure performance difference and cost to answer / query.

Sounds interesting? Get in touch.