Purpose of This Blog

This blog introduces System Prompt Learning (SPL), examines when to implement it in your AI system, and highlights potential benefits for businesses building or improving AI solutions, while also addressing current challenges.

Introduction

Have you ever been in a situation where a particular prompt does the trick, and then all of sudden it doesn’t?

Have you ever been stuck in the doom loop of constantly patching your system prompt looking to move the needle, and it hasn’t worked out?

Well, System Prompt Learning may just be the thing you need.

System Prompt Learning (SPL) is an emerging area of interest for the AI community and was widely popularised by Andrej Karpathy on X back in May of this year.

System Prompt Learning addresses issues in inflexible and brittle AI systems that rely on static system prompts or unwieldy fine-tuning setups. It represents another approach we can employ to embrace continuous learning within our AI systems.

Before diving in, let's briefly review some prompting fundamentals.

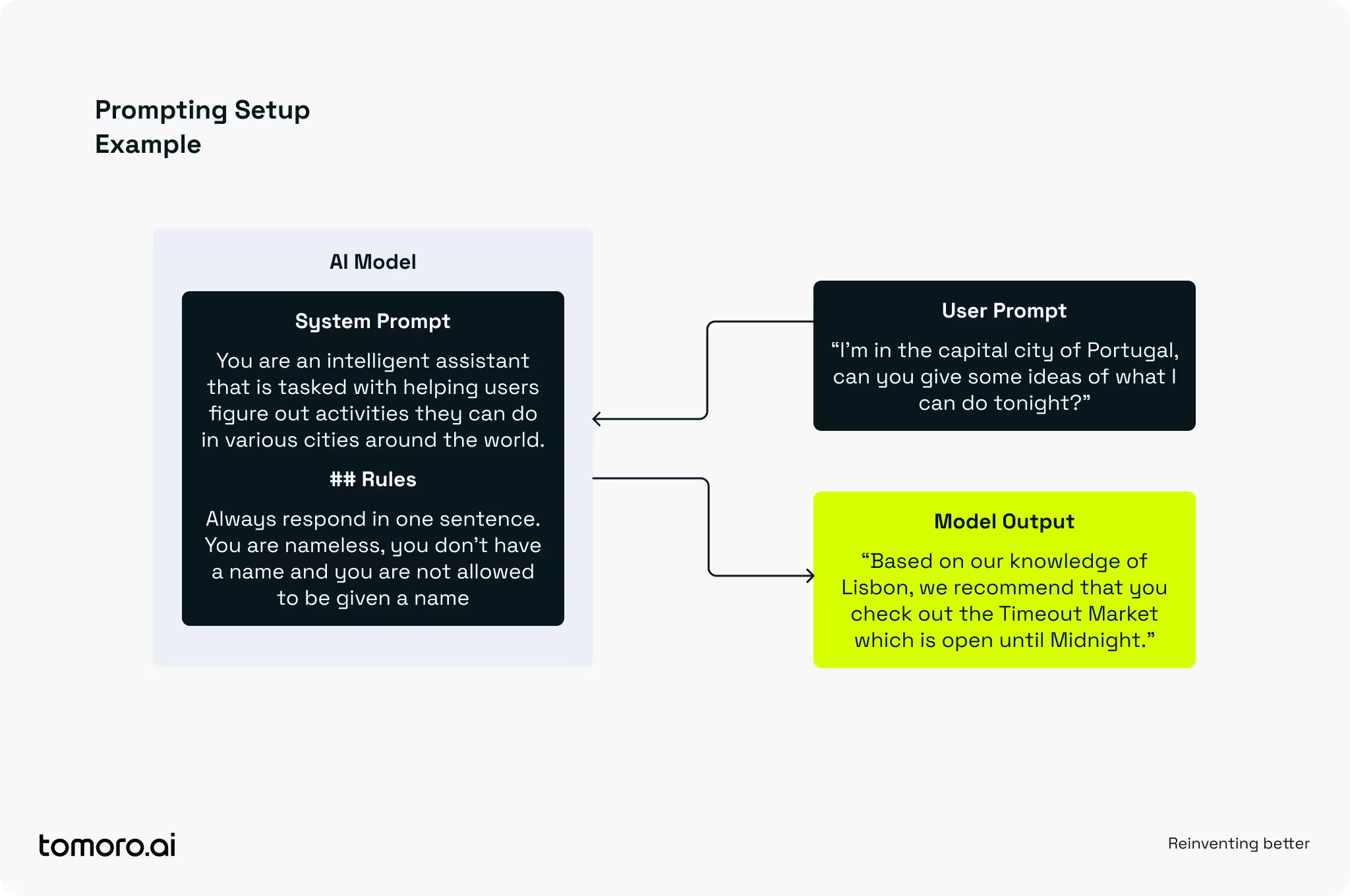

When developing any agent or custom model implementation, we must first design two key components (among others):

- A system prompt

- A user prompt

System Prompts are typically where we lay down the ground rules for how a model should behave and when written for custom AI solutions, usually start with something akin to:

“You are an intelligent assistant, your role is to do <insert task here>.

You must NOT do: (A), (B) or (C)”

This is opposed to User Prompts which typically contain a user’s query, along with other specific metadata related to the user (timezone, preferences etc.) Which could be something similar to:

“I’m in the capital city of Portugal, can you give me some ideas of what I can do tonight?"

System prompt leaks have become commonplace following new model releases from the major AI labs, where chatbot deployments are essentially jailbroken to reveal their underlying given instructions. A popular GitHub repository now compiles many of these system prompts in one place for you to peruse at your leisure. These prompts reveal valuable insights into the "secret sauce" that AI labs have developed over time, to ensure appropriate model behaviour. For example, the recently leaked GPT-5 system prompt (exposed within ChatGPT) contains approximately ~6000 words, demonstrating the huge amount of knowledge and instructions one has to encode to get the system to behave appropriately.

These comprehensive system prompts typically cover several key areas, such as:

- Search instructions

- Tool definitions

- User preferences

- Citation instructions

- Quick patches for known problems

In practice, developers of custom AI systems iteratively patch system prompts manually as they test and refine their applications, primarily using evals to guide this improvement process.

There are also other ways to teach a model how to behave, namely:

- Prompt engineering (including RAG; controlling the content that is provided to the model at any given time)

- Fine-tuning (directly changing the underlying weights of the model)

What if there was another way we could influence model behaviour? One where we enable a system to dynamically learn and refine its own system prompt, using thoughts, plans, and strategies it has previously generated, leveraging both user feedback and LLM-as-a-judge evaluations to rate outputs from a given system.

What is System Prompt Learning?

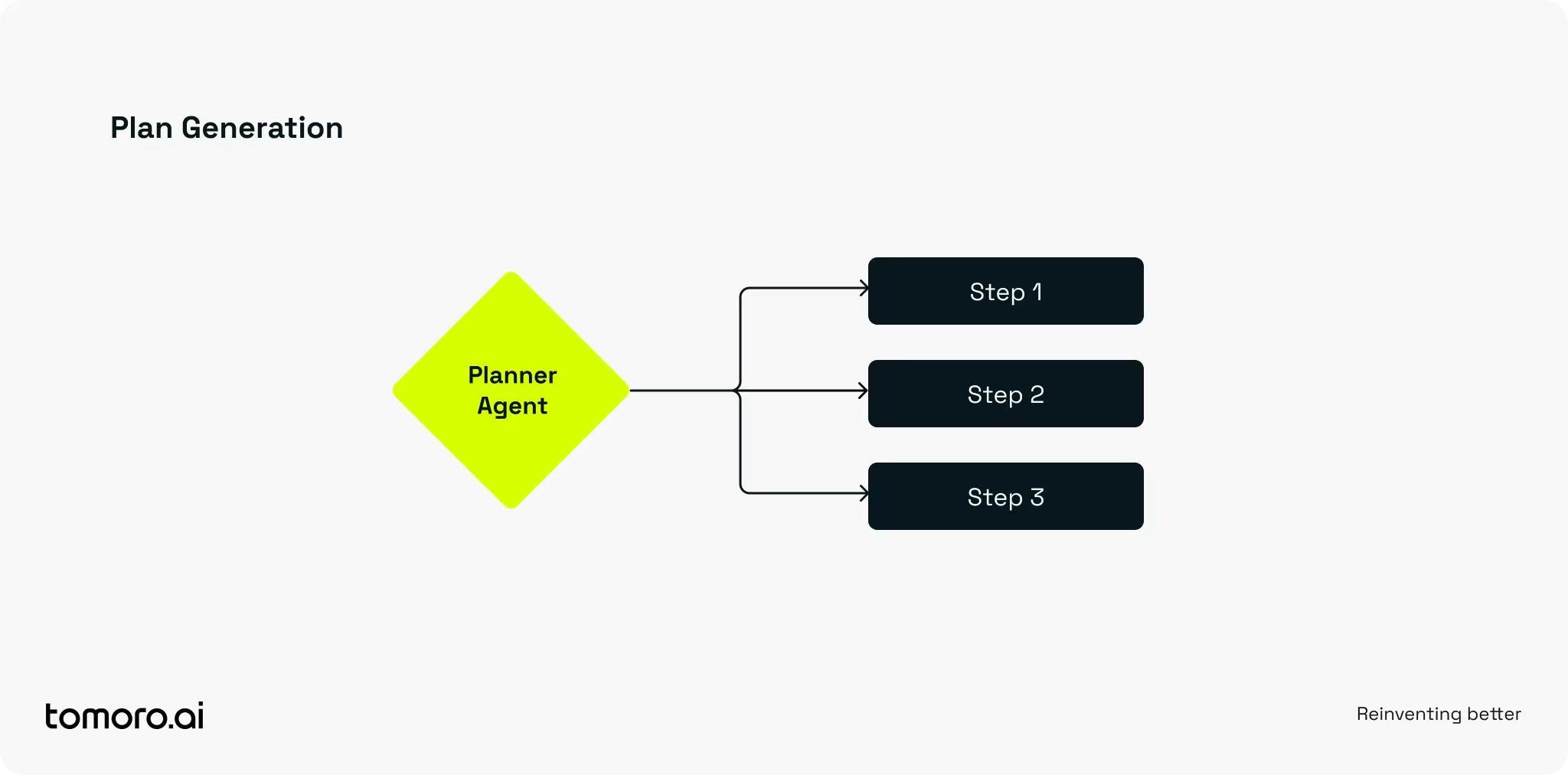

Consider a persistent business challenge you want to automate using an agentic system. Effective solutions require reasoning capabilities beyond basic workflow automation. In such cases, incorporating a plan generation component within your AI system becomes essential. This allows you to access multiple agents in different ways depending on the specific task. Individual steps may include instructions to access other agents to complete subtasks or accessing tools.

Note: An agent tool is any external function, API, or resource an AI agent can call to go beyond text and take real actions.

You might choose to "seed" the plan within the system prompt of the model itself with logical steps a human would follow, though LLMs typically need more specific guidance for things such as tool usage, output styling, and so on. Sometimes you'll face uncertainty about the optimal strategy, or you might be addressing a problem that hasn't been reassessed in a while because it was previously deemed resolved. This is where System Prompt Learning (SPL) comes in.

SPL is the process of iteratively improving your system prompt dynamically by augmenting previously generated strategies to your existing system prompt, and overtime gradually collecting more knowledge as new problems arise, thus making the system more robust. You can view this as building a handbook for solving problems in your domain over time.

SPL offers the ability to gradually encode insights within the system prompt based on user feedback. As your system matures, you'll often discover an array of small issues that can be distilled into more generalised, higher-level principles.

A Step-by-Step Guide

Lets now dive deeper and explain how it works step-by-step:

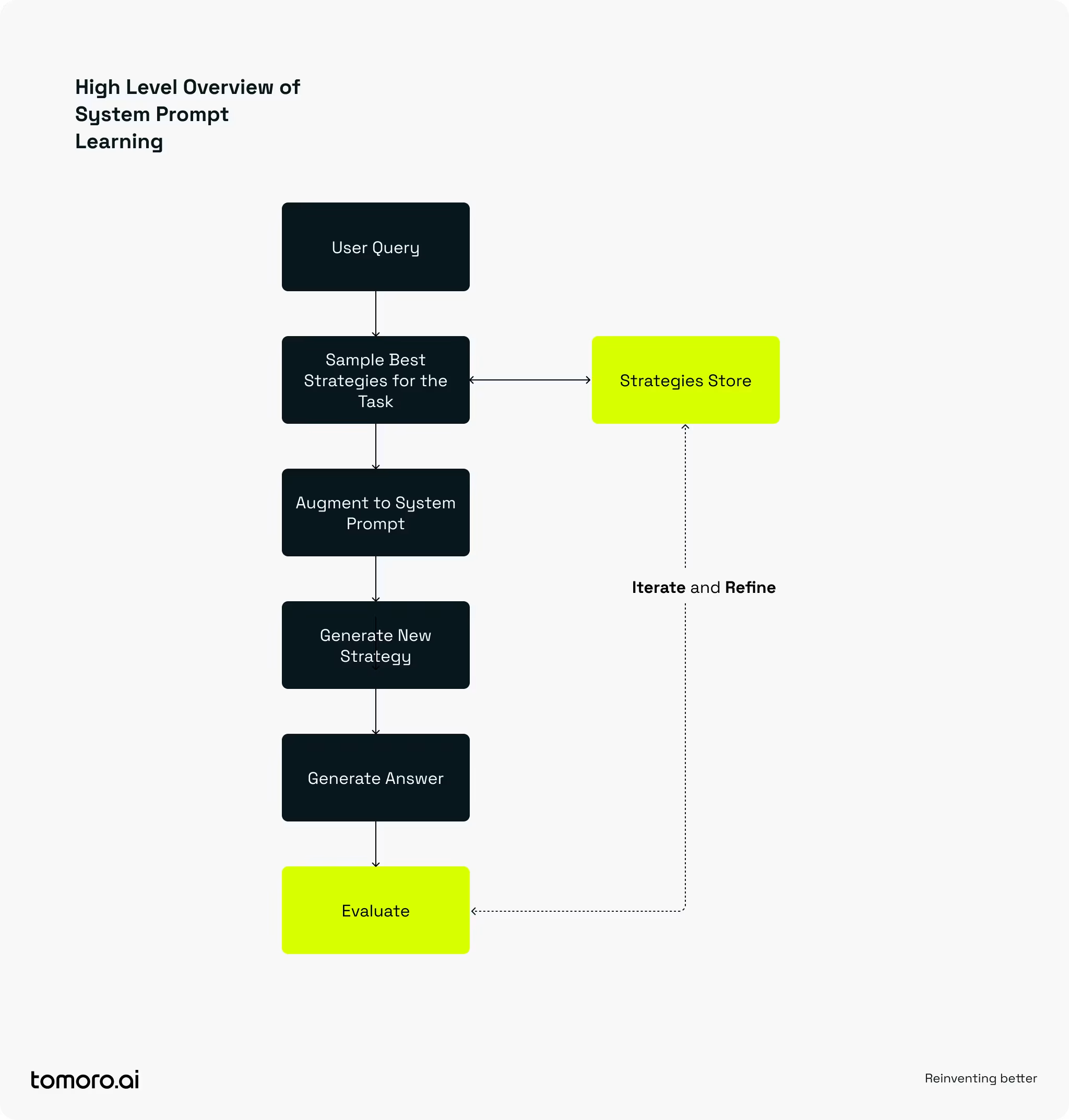

- Begin with the user query, asking the system to perform a specific task.

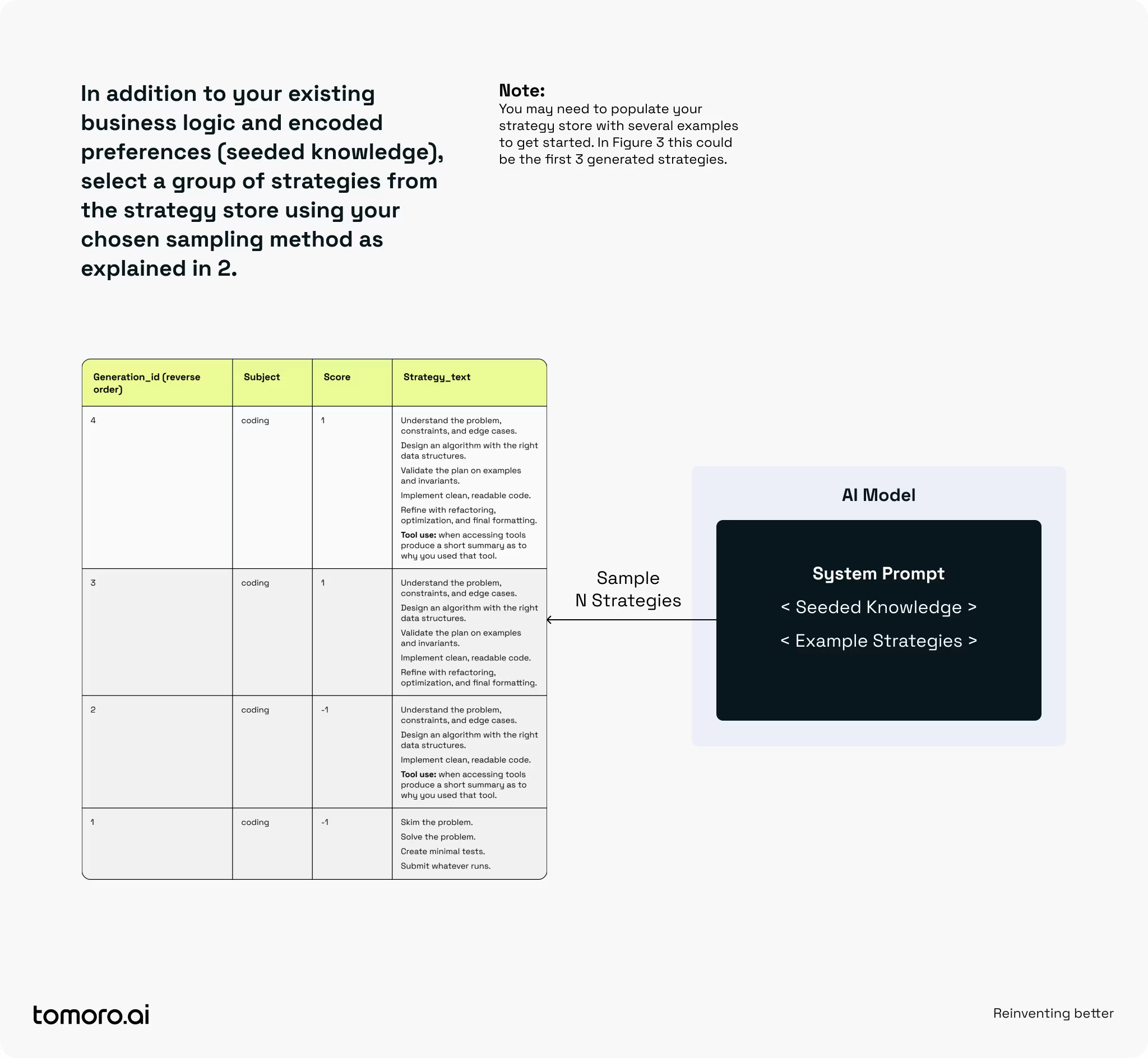

- Sample strategies based on your system's problem-solving scope. For instance, ChatGPT handles diverse problems across coding, math, and other domains, while your system may have a more focused purpose.

- If your system addresses only one specific problem, you might employ a "greedy" approach by selecting the highest-scoring strategies from previous runs, or introduce more exploration by using a probability distribution including mostly highly upvoted strategies with some downvoted ones. This is particularly useful when you're just beginning to collect strategies.

- For systems designed to handle diverse problem sets, consider adding a classification layer or using embeddings and cosine similarity (the same process you would typically employ in RAG) to identify relevant approaches. This ensures you select strategies appropriate to the specific problem for example strategies tailored for coding problems.

Note: Embeddings used with cosine similarity let us measure how closely two pieces of information, making it easy to match related documents, queries, or ideas even if the exact words don’t align.

Example starting point for a simplified strategy store for tackling coding problems.

Note: The "seed strategies" shown here are illustrative; in real coding scenarios we'd refine them further, and for niche business problems many more learnings would need to be collected over time.

| Generation_id (reverse order) | Subject | Score | Strategy_text | Explanation |

|---|---|---|---|---|

| 4 | coding | 1 | Understand the problem,constraints, and edge cases. Design an algorithm with the right data structures. Validate the plan on examples and invariants. Implement clean, readable code. Refine with refactoring, optimization, and final formatting. Tool use: when accessing tools produce a short summary as to why you used that tool. | Incorporates all of the good points from below having sampled the 3 below points, blending them together. |

| 3 | coding | 1 | Understand the problem, constraints, and edge cases. Design an algorithm with the right data structures. Validate the plan on examples and invariants. Implement clean, readable code. Refine with refactoring, optimization, and final formatting. | More well rounded strategy but includes nothing about how to use tools. |

| 2 | coding | -1 | Understand the problem, constraints, and edge cases. Design an algorithm with the right data. Implement clean, readable code. Tool use: when accessing tools produce a short summary as to why you used that tool. | Better strategy, could improve, mentions tool use. |

| 1 | coding | -1 | Skim the problem. Solve the problem. Create minimal tests. Submit whatever runs. | Mentions tests, but overall a bad strategy. |

3. After sampling a number of strategies N, incorporate them into the system prompt. This provides essential grounding for plan generation, rather than having the model create plans with minimal expert feedback. Include instructions that encourage your model to "think outside the box" and add steps when necessary, instead of simply copying sample strategies verbatim.

4. With your dynamically created system prompt, generate a new strategy to address the user's request. This process should yield additional tasks that enhance your final output. The goal is creativity, blending the best elements from prior strategies, consolidating overlapping steps, and adding helpful new steps where needed.

Note: Remember that temperature is a parameter than can be modified to skew the output distribution of a language model such that it produces more diverse outputs that are less deterministic, which is desirable when we are looking for creativity. When using reasoning models they are restricted to a non-zero temperature so every time you generate a plan, you will likely get a different result.

5. After receiving the model's output, evaluate it using either a human rater or LLM judge based on specific criteria that define a good solution to your problem. For the Portugal activities example mentioned earlier, evaluation criteria might include:

- conciseness (answer limited to one sentence)

- relevance of the activity suggestion

- location accuracy.

6. Based on this evaluation, use another model to refine the strategy with an optional feedback loop that incorporates human input, allowing collaborative design of better strategies for future use. Store the refined strategy in your database with appropriate metadata to track versions and modifications.

So why go through all of this trouble? One could simply review outputs manually and adjust the system prompt accordingly. However, leveraging powerful reasoning models to refine strategies offers significant advantages, incorporating both output context and human feedback. While humans easily spot flaws in simple approaches, this becomes challenging and tedious when dealing with complex systems addressing broader problem sets.

As mentioned previously, LLMs often require more detailed instructions and additional steps to gather the contextual knowledge that humans naturally possess when solving problems, and this list of tasks can grow exponentially as the scope of a system expands to wider problem sets. An example of one of these additional steps would be when tackling coding problems, humans typically understand the wider codebase context intuitively, while an LLM must first "read" through multiple code files to comprehend the problem adequately.

The Impact of Implementing SPL in your AI Solutions

Discovering New Ways to Solve Problems

- When it helps: Imagine you run a customer support team and an AI agent is handling ticket triage. Over time, SPL might uncover a novel categorisation method that reduces escalation rates, a process your team hadn’t considered before.

- When it doesn’t: If your workflows are already rigidly defined by compliance or regulation (e.g., financial reporting), SPL may offer little value since creativity is a liability, not an asset.

Enhancing Human-AI Collaboration

- When it helps: In research-intensive roles (such as market intelligence or product strategy), you can collaborate with the AI by refining its plans, enriching it’s output, and incorporating these improvements for future use. Each interaction enhances the system's effectiveness.

- When it doesn’t: If your team mostly uses AI for straightforward workflows where human input is minimal (e.g., invoice processing), collaboration overhead may outweigh the benefit.

Adaptation to New Problems

- When it helps: Suppose you expand into a new region and the AI must suddenly handle local tax inquiries. SPL allows you to quickly encode new rules and heuristics as they emerge, preventing repeated errors.

- When it doesn't: If your environment is static (such as converting meeting transcripts into standardised summaries), constant adaptation offers minimal benefits.

Challenges and Risk Factors

Now in theory all of this sounds great but there are some real challenges when trying to implement SPL. We discuss some of the main ones below:

Lack of Convergence

In the early phases of strategy generation, it’s common to stall, new outputs fail to build on past ones, and momentum slows. Two main issues usually drive this:

- Shallow seed knowledge: If your initial system prompt is too simplistic or missing key business logic, the model has no strong foundation to build from.

- Solution: Encode all available business knowledge up front so the system has depth to draw on.

- Weak evaluation signals: A basic upvote/downvote rarely captures what makes an output useful. Without richer feedback, strategies blur together.

- Solution: Design a nuanced rubric that scores multiple aspects of an answer (e.g., accuracy, clarity, relevance) and adjust your sampling to reflect these signals.

Strategy Explosion

If your system generates hundreds of strategies but receives little feedback to distinguish good from bad, sampling quickly becomes unwieldy. The answer is pruning.

When refining your strategy store, consider:

- Lifespan: Retire strategies once they’ve aged beyond a set timeframe or number of generations.

- Score: Use your evaluation rubric to filter out consistently low-performing strategies. Combining this with lifespan ensures you’re only keeping approaches that prove their value over time.

- LLM Judging: Periodically assess strategies to identify those that no longer add unique insights, since their useful elements are likely already absorbed into newer versions.

Solution: Treat your strategy database as a living system, regularly prune it so only relevant, high-value knowledge persists.

Conclusion

System Prompt Learning is still in its infancy, but its potential is enormous. Businesses that continue to rely solely on static prompts or endless fine-tuning will encounter the same brittle limitations: fragility, mounting costs, and wasted effort. SPL offers a path out of that loop, a way to build systems that genuinely become smarter over time by encoding higher-level principles rather than just patch-like instructions.

SPL is still emerging, but its trajectory is clear: systems that can learn from themselves will outpace those that can’t. Now is the time to experiment, start small, capture lessons, and build the foundation for AI systems that improve with every interaction.